There are alien minds among us. Not the little green men of science fiction, but the alien minds that power the facial recognition in your smartphone, determine your creditworthiness and write poetry and computer code. These alien minds are artificial intelligence systems, the ghost in the machine that you encounter daily.

But AI systems have a significant limitation: Many of their inner workings are impenetrable, making them fundamentally unexplainable and unpredictable. Furthermore, constructing AI systems that behave in ways that people expect is a significant challenge.

If you fundamentally don’t understand something as unpredictable as AI, how can you trust it?

Why AI is unpredictable

Trust is grounded in predictability. It depends on your ability to anticipate the behavior of others. If you trust someone and they don’t do what you expect, then your perception of their trustworthiness diminishes.

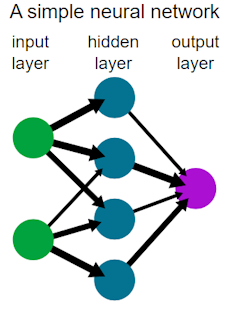

In neural networks, the strength of the connections between ‘neurons’ changes as data passes from the input layer through hidden layers to the output layer, enabling the network to ‘learn’ patterns.

Wiso via Wikimedia Commons

Many AI systems are built on deep learning neural networks, which in some ways emulate the human brain. These networks contain interconnected “neurons” with variables or “parameters” that affect the strength of connections between the neurons. As a naïve network is presented with training data, it “learns” how to classify the data by adjusting these parameters. In this way, the AI system learns to classify data it hasn’t seen before. It doesn’t memorize what each data point is, but instead predicts what a data point might be.

Many of the most powerful AI systems contain trillions of parameters. Because of this, the reasons AI systems make the decisions that they do are often opaque. This is the AI explainability problem – the impenetrable black box of AI decision-making.

Consider a variation of the “Trolley Problem.” Imagine that you are a passenger in a self-driving vehicle, controlled by an AI. A small child runs into the road, and the AI must now decide: run over the child or swerve and crash, potentially injuring its passengers. This choice would be difficult for a human to make, but a human has the benefit of being able to explain their decision. Their rationalization – shaped by ethical norms, the perceptions of others and expected behavior – supports trust.

In contrast, an AI can’t rationalize its decision-making. You can’t look under the hood of the self-driving vehicle at its trillions of parameters to explain why it made the decision that it did. AI fails the predictive requirement for trust.

AI behavior and human expectations

Trust relies not only on predictability, but also on normative or ethical motivations. You typically expect people to act not only as you assume they will, but also as they should. Human…