Conventional silicon architecture has taken computer vision a long way, but Purdue University researchers are developing an alternative path—taking a cue from nature—that they say is the foundation of an artificial retina. Like our own visual system, the device is geared to sense change, making it more efficient in principle than the computationally demanding digital camera systems used in applications like self-driving cars and autonomous robots.

“Computer vision systems use a huge amount of energy, and that’s a bottleneck to using them widely. Our long-term goal is to use biomimicry to tackle the challenge of dynamic imaging with less data processing,” said Jianguo Mei, the Richard and Judith Wien Professor of Chemistry at Purdue’s College of Science. “By mimicking our retina in terms of light perception, our system can be potentially much less data-intensive, though there is a long way ahead to integrate hardware with software to make it become a reality.”

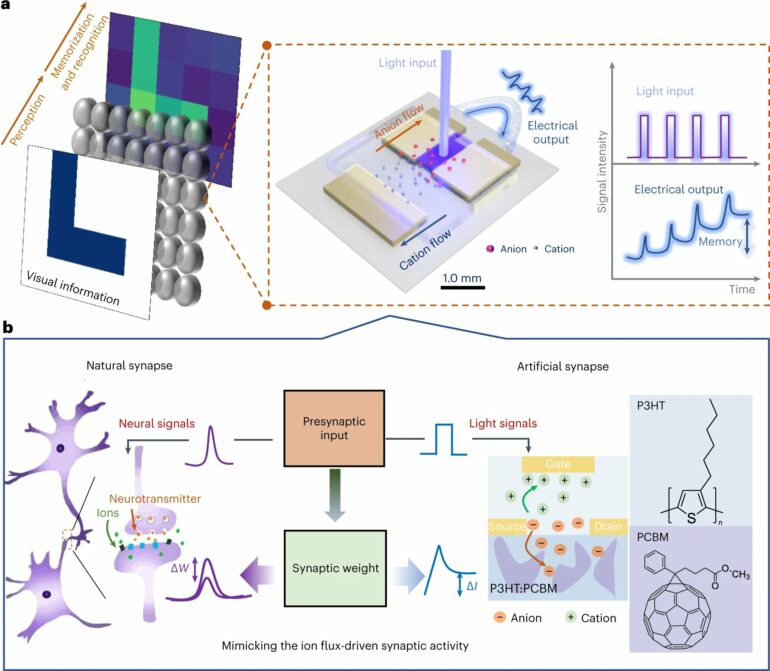

Mei and his team drew their inspiration from light perception in retinal cells. As in nature, light triggers an electrochemical reaction in the prototype device they have built. The reaction strengthens steadily and incrementally with repeated exposure to light and dissipates slowly when light is withdrawn, creating what is effectively a memory of the light information the device received.

That memory could potentially be used to reduce the amount of data that must be processed to understand a moving scene, an approach that is more energy-computationally efficient and error-tolerant than conventional computer vision.

The team calls their device an organic electrochemical photonic synapse and says that it more closely mimics how the human visual system works and has greater potential as the foundation of a device for human-machine interfaces. The design may also be helpful for neuromorphic computing applications that operate on principles similar to the architecture of the human brain, said Ke Chen, a graduate student in Mei’s lab and lead author of a Nature Photonics paper that tested the device on facial recognition.

“In a normal computer vision system, you create a signal, then you have to transfer the data from memory to processing and back to memory; it takes a lot of time and energy to do that,” Chen said. “Our device has integrated functions of light perception, light-to-electric signal transformation, and on-site memory and data processing.”

Currently, robotic or autonomous devices rely on the familiar digital camera as the foundation of computer vision. Inside the camera, light-sensitive areas of crystal silicon, called photosites, absorb photons and release electrons, converting light to an electrical signal that can be processed with increasingly sophisticated computer image recognition programs. A typical smartphone camera uses upwards of 10 million photosites, each only a few microns (one-millionth of a meter) square, capturing images with far higher resolution than our own eyes can do.

But all that data—having to analyze all available light information regardless of whether the scene changes or not—isn’t necessary for many of the tasks that use computer vision.

By contrast, Mei’s solution, like human vision, is relatively low resolution but is well suited to sensing movement. Human eyes have a resolution in the neighborhood of 15 microns. The prototype device—which houses 18,000 transistors on a 10-centimeter square chip—has a resolution of a few hundred microns, and Mei said the technology could be improved by lowering the resolution to about 10 microns.

“Our eye and brain aren’t as high resolution as silicon computing, but the way we process the data makes our eye better than most of the imaging systems we have right now when it comes to dealing with data,” Mei said. “Computer vision systems deal with a humongous amount of data because the digital camera doesn’t differentiate between what is static and what is dynamic; it just captures everything.”

Rather than going straight from light to an electrical signal, Mei and his team first convert light to a flow of charged atoms called ions, a mechanism similar to that which retinal cells use to transmit light inputs to the brain. They do this with a small square of a light-sensitive polymer embedded in an electrolyte gel. Light hitting the spot on the polymer square attracts positively charged ions in the gel to the spot (and repels negatively charged ions), creating a charge imbalance in the gel.

Repeated exposure to light increases the charge imbalance in the gel, a feature that can be used to differentiate between the consistent light of a static scene and the dynamic light of a changing scene. When the light is removed, the ions remain in their charged configuration for a short period of time in what can be considered a temporary memory of light, gradually returning to a neutral configuration.

The positively charged spot serves as the gate on a transistor, allowing a small electric current to flow between a source and a drain in the presence of light. Much like the conventional photodetector, the electric current indicates light intensity and wavelength and is passed to a computer for image recognition. But while the output of an electric current is the same, it is the intermediate step of converting light to the electrochemical signal that creates motion sensing and memory capabilities.

Mei’s electrochemical transistor is one of an emerging class of optoelectronic devices that seek to integrate light perception and memory, but the performance of their device is superior in that the charge imbalance increases in smooth and steady increments with repeated exposure to light and decays more slowly than competing designs. With plans for future iterations to be made of a flexible material, they may also be able to produce a version that is wearable and even bio-compatible.

More information:

Ke Chen et al, Organic optoelectronic synapse based on photon-modulated electrochemical doping, Nature Photonics (2023). DOI: 10.1038/s41566-023-01232-x

Citation:

Researchers look to the human eye to boost computer vision efficiency (2023, November 29)