Wildfires are some of the most destructive natural disasters in the country, threatening lives, destroying homes and infrastructure, and creating air pollution. In order to properly forecast and manage wildfires, managers need to understand wildfire risk and allocate resources accordingly. A new study contributes scientific expertise to this effort.

In the study, published in the November issue of the journal Earth’s Future, researchers from DRI, Argonne National Laboratory, and the University of Wisconsin-Madison, teamed up to assess future fire risk.

They looked at the four fire danger indices used across North America to predict and manage the risk of wildfire to see how the risk correlated with observed wildfire size between 1984 and 2019. Then, they examined how wildfire risk changed under the projected future climate, finding that both fire potential and a longer wildfire season are likely under climate change.

“We use several of these fire danger indices to evaluate fire risk in the contiguous U.S.,” said Guo Yu, Ph.D., assistant research professor at DRI and lead author of the study. “But previous studies have only looked at how climate change will alter wildfire risk using one of them, and only a few studies have looked at how fire risk has translated to the size or characteristics of actual wildfires. We wanted to rigorously assess both in this paper.”

Fire danger indices use information about weather conditions and fuel moisture or how dry vegetation is on the ground. The most common fire danger indices used in North America are the USGS Fire Potential Index, the Canadian Forest Fire Weather Index, and the Energy Release Component and Burning Indices from the National Fire Danger Rating System.

First, the scientists used satellite remote sensing data from 1984 to 2019 to see how potential fire risk correlated with ultimate wildfire size for more than 13,000 wildfires, excluding controlled burns. They found that when wildfire risk was higher, wildfire size tended to be larger, and this relationship was stronger over larger areas.

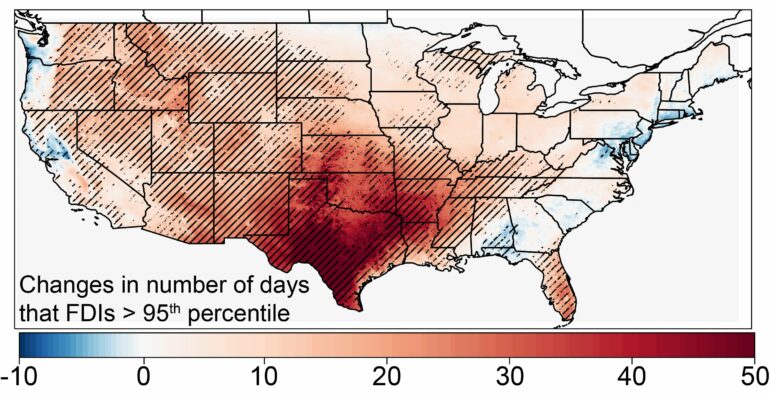

By plugging the fire danger indices into future climate projections, the study found that extreme wildfire risk will increase by an average of 10 days across the continental U.S. by the end of the century, driven largely by increased temperatures.

Certain regions, like the southern Great Plains (including Kansas, Oklahoma, Arkansas, and Texas), are projected to have more than 40 additional days per year of extreme wildfire danger. A few small regions are projected to see a decrease in their annual wildfire risk season due to higher rainfall and humidity, including the Pacific Northwest coast and the mid-Atlantic coast.

In the Southwest, the extreme wildfire season is projected to increase by more than 20 days per year, most of which will occur in the spring and summer months. Longer fire seasons extending into the winter months are also projected, particularly for the Texas-Louisiana coastal plain.

“Under a warmer future climate, we can see that the fire danger will even be higher in the winter,” Yu said. “This surprised me because it feels counterintuitive, but climate change will alter the landscape in so many ways.”

The study authors hope that the study will help fire managers understand the size of potential wildfires so they can prepare accordingly, as well as understand how fire seasonality will shift and extend under a changing climate.

More information:

Guo Yu et al, Performance of Fire Danger Indices and Their Utility in Predicting Future Wildfire Danger Over the Conterminous United States, Earth’s Future (2023). DOI: 10.1029/2023EF003823

Provided by

Desert Research Institute

Citation:

Climate change will increase wildfire risk and lengthen fire seasons, study confirms (2023, December 8)