Researchers have developed the first LiDAR-based augmented reality head-up display for use in vehicles. Tests on a prototype version of the technology suggest that it could improve road safety by ‘seeing through’ objects to alert of potential hazards without distracting the driver.

The technology, developed by researchers from the University of Cambridge, the University of Oxford and University College London (UCL), is based on LiDAR (light detection and ranging), and uses LiDAR data to create ultra high-definition holographic representations of road objects which are beamed directly to the driver’s eyes, instead of 2D windscreen projections used in most head-up displays.

While the technology has not yet been tested in a car, early tests, based on data collected from a busy street in central London, showed that the holographic images appear in the driver’s field of view according to their actual position, creating an augmented reality. This could be particularly useful where objects such as road signs are hidden by large trees or trucks, for example, allowing the driver to ‘see through’ visual obstructions. The results are reported in the journal Optics Express.

“Head-up displays are being incorporated into connected vehicles, and usually project information such as speed or fuel levels directly onto the windscreen in front of the driver, who must keep their eyes on the road,” said lead author Jana Skirnewskaja, a Ph.D. candidate from Cambridge’s Department of Engineering. “However, we wanted to go a step further by representing real objects in as panoramic 3D projections.”

Skirnewskaja and her colleagues based their system on LiDAR, a remote sensing method which works by sending out a laser pulse to measure the distance between the scanner and an object. LiDAR is commonly used in agriculture, archaeology and geography, but it is also being trialled in autonomous vehicles for obstacle detection.

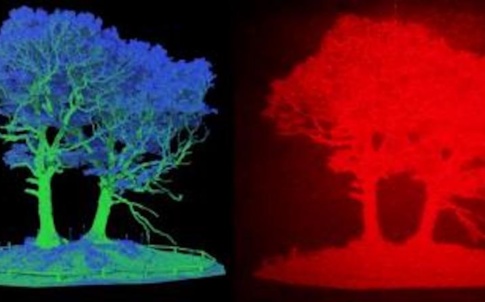

Using LiDAR, the researchers scanned Malet Street, a busy street on the UCL campus in central London. Co-author Phil Wilkes, a geographer who normally uses LiDAR to scan tropical forests, scanned the whole street using a technique called terrestrial laser scanning. Millions of pulses were sent out from multiple positions along Malet Street. The LiDAR data was then combined with point cloud data, building up a 3D model.

“This way, we can stitch the scans together, building a whole scene, which doesn’t only capture trees, but cars, trucks, people, signs, and everything else you would see on a typical city street,” said Wilkes. “Although the data we captured was from a stationary platform, it’s similar to the sensors that will be in the next generation of autonomous or semi-autonomous vehicles.”

When the 3D model of Malet St was completed, the researchers then transformed various objects on the street into holographic projections. The LiDAR data, in the form of point clouds, was processed by separation algorithms to identify and extract the target objects. Another algorithm was used to convert the target objects into computer-generated diffraction patterns. These data points were implemented into the optical setup to project 3D holographic objects into the driver’s field of view.

The optical setup is capable of projecting multiple layers of holograms with the help of advanced algorithms. The holographic projection can appear at different sizes and is aligned with the position of the represented real object on the street. For example, a hidden street sign would appear as a holographic projection relative to its actual position behind the obstruction, acting as an alert mechanism.

In future, the researchers hope to refine their system by personalising the layout of the head-up displays and have created an algorithm capable of projecting several layers of different objects. These layered holograms can be freely arranged in the driver’s vision space. For example, in the first layer, a traffic sign at a further distance can be projected at a smaller size. In the second layer, a warning sign at a closer distance can be displayed at a larger size.

“This layering technique provides an augmented reality experience and alerts the driver in a natural way,” said Skirnewskaja. “Every individual may have different preferences for their display options. For instance, the driver’s vital health signs could be projected in a desired location of the head-up display.

“Panoramic holographic projections could be a valuable addition to existing safety measures by showing road objects in real time. Holograms act to alert the driver but are not a distraction.”

The researchers are now working to miniaturise the optical components used in their holographic setup so they can fit into a car. Once the setup is complete, vehicle tests on public roads in Cambridge will be carried out.

Heavy rain affects object detection by autonomous vehicle LiDAR sensors

More information:

Jana Skirnewskaja et al, LiDAR-derived digital holograms for automotive head-up displays, Optics Express (2021). DOI: 10.1364/oe.420740

Provided by

University of Cambridge

Citation:

3D holographic head-up display could improve road safety (2021, April 26)

retrieved 26 April 2021

from https://phys.org/news/2021-04-3d-holographic-head-up-road-safety.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.