A new method of analyzing the complex data from massive astronomical events could help gravitational wave astronomers avoid a looming computational crunch.

Researchers from the University of Glasgow have used machine learning to develop a new system for processing the data collected from detectors like the Laser Interferometer Gravitational-Wave Observatory (LIGO).

The system, which they call VItamin, is capable of fully analyzing the data from a single signal collected by gravitational wave detectors in less than a second, a significant improvement on current analysis techniques.

Since the historic first detection of the ripples in spacetime caused by colliding black holes in 2015, gravitational wave astronomers have relied on an array of powerful computers to analyze detected signals using a process known as Bayesian inference.

A full analysis of each signal, which provides valuable information about the mass, spin, polarization and inclination of orbit of the bodies involved in each event, can currently take days to be completed.

Since that first detection, gravitational wave detectors like LIGO in the U.S. and Virgo in Italy have been upgraded to become more sensitive to weaker signals, and other detectors like KAGRA in Japan have come online.

As a result, gravitational wave signals are being detected with increasing regularity, putting the current computing infrastructure under greater strain to analyze each detection. As detector performance continues to improve thanks to upgrades between each observing run, there is a risk that the capacity of the system to process a greater number of signals will be overwhelmed.

VItamin was developed by researchers from the University of Glasgow’s School of Physics & Astronomy in collaboration with colleagues from the School of Computing Science.

In a new paper published today in the journal Nature Physics, they describe how they ‘trained’ VItamin to recognize gravitational wave signals from binary black holes using a machine learning technique called a conditional variational autoencoder, or CVAE.

The team created a series of simulated gravitational wave signals, overlaid with noise to mimic the background noise from which gravitational wave detectors have to pick each detection. Then, they passed them through the machine learning system around 10 million times.

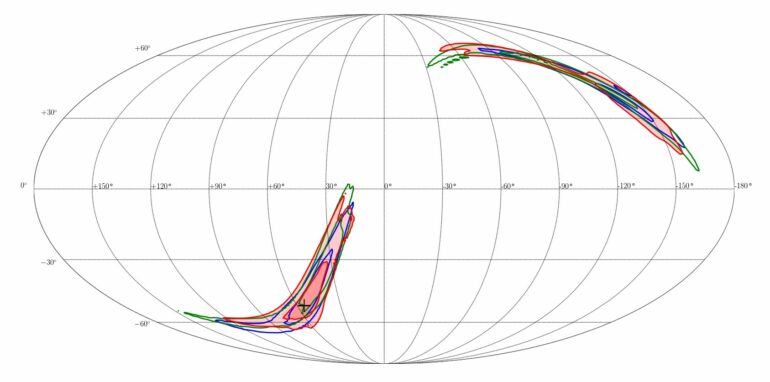

Over the course of the process, VItamin improved its ability to pick out signals and analyze 15 parameters until it was capable of providing accurate results in less than a second.

Hunter Gabbard, of the University of Glasgow’s School of Physics and Astronomy, is the lead author of the paper. He said: “Gravitational wave astronomy has provided us with an entirely new way to listen to the universe, and the pace of developments since the first detection in 2015 has been remarkable.”

“As detector technology improves further still, and new detectors start listening too, we expect to be picking up hundreds of signals a year in the near future. Harnessing the power of machine learning will be vital to helping us keep pace with detector developments, and VItamin is an exciting development towards that goal.

“We’re keen to work closely with colleagues in our global collaboration to integrate VItamin into the standard toolkit for detecting and responding to gravitational wave signals.”

VItamin’s ability to rapidly analyze signal parameters could also help astronomers from around the world respond more quickly to gravitational wave detections which are also likely to be visible to optical or radio telescopes.

Dr. Chris Messenger of the School of Physics and Astronomy, a co-author of the paper, added: “Events like the collisions of black holes are invisible to electromagnetic telescopes, which is why we didn’t have direct evidence of their existence until the first gravitational wave signal from a black hole merger was detected.

“But events like the collision of two neutron stars do have a visible component. In 2017, we detected the first gravitational wave signal from a neutron star merger and we were able to help our collaborators in electromagnetic astronomy turn their telescopes to the point in the sky where they could see the afterglow of the event.

“We could do that thanks to gravitational wave detectors’ early-warning systems, which give us an initial readout of data like the location of events. Every extra second that it takes to point telescopes at the sky is a missed opportunity to collect valuable information. VItamin could help us provide much more detailed information to our colleagues, enabling much more rapid response and allowing the collection of a broader spectrum of data.”

Professor Roderick Murray-Smith and Dr. Francesco Tonolini of the School of Computing Science are co-authors of the paper.

Professor Murray-Smith said: “The scientific domain of gravitational wave astronomy was a new area for us, and it gave us the opportunity to design new models, specifically tailored for this application, which brought understanding of the physics together with leading edge machine learning methods. The University of Glasgow-led QuantIC project, which funded our work, has been a great opportunity to bring machine learning together with science, especially physics.”

Dr. Tonolini added: “In the past, these latent variable models have been typically developed and optimized to capture distributions of images, text and other common signals. However, the data and distributions encountered in the gravitational wave domain are really quite unique and required us to re-invent components of these models to achieve the success demonstrated.”

The team’s paper, titled “Bayesian parameter estimation using conditional variational autoencoders for gravitational-wave astronomy,” is published in Nature Physics.

More information:

Hunter Gabbard et al, Bayesian parameter estimation using conditional variational autoencoders for gravitational-wave astronomy, Nature Physics (2021). DOI: 10.1038/s41567-021-01425-7

Provided by

University of Glasgow

Citation:

Turbocharged data analysis could prevent gravitational wave computing crunch (2021, December 21)