A collaboration between Lawrence Berkeley National Laboratory’s (Berkeley Lab’s) Applied Mathematics and Computational Research Division (AMCRD) and Physics Division has yielded a new approach to error mitigation that could help make quantum computing’s theoretical potential a reality.

The research team describes this work in a paper published in Physical Review Letters, “Mitigating Depolarizing Noise on Quantum Computers with Noise-Estimation Circuits.”

“Quantum computers have the potential to solve more complex problems way faster than classical computers,” said Bert de Jong, one of the lead authors of the study and the director of the AIDE-QC and QAT4Chem quantum computing projects. De Jong also leads the AMCRD’s Applied Computing for Scientific Discovery Group. “But the real challenge is quantum computers are relatively new. And there’s still a lot of work that has to be done to make them reliable.”

For now, one of the problems is that quantum computers are still too error-prone to be consistently useful. This is due in large part to something known as “noise” (errors).

There are different types of noise, including readout noise and gate noise. The former has to do with reading out the result of a run on a quantum computer; the more noise, the higher the chance a qubit—the quantum equivalent of a bit on a classical computer—will be measured in the wrong state. The latter relates to the actual operations performed; noise here means the probability of applying the wrong operation. And the prevalence of noise dramatically increases the more operations one tries to perform with a quantum computer, which makes it harder to tease out the right answer and severely limits quantum computers’ usability as they’re scaled up.

“So noise here just basically means: It’s stuff you don’t want, and it obscures the result you do want,” said Ben Nachman, a Berkeley Lab physicist and co-author on the study who also leads the cross-cutting Machine Learning for Fundamental Physics group.

And while error correction—which is routine in classical computers—would be ideal, it is not yet feasible on current quantum computers due to the number of qubits needed. The next best thing: error mitigation—methods and software to reduce noise and minimize errors in the science outcomes of quantum simulations. “On average, we want to be able to say what the right answer should be,” Nachman said.

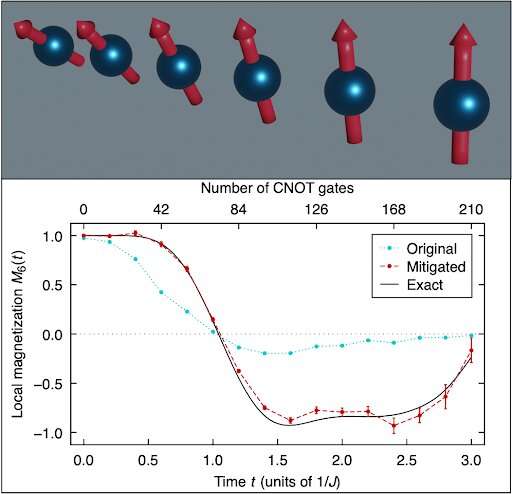

To get there, the Berkeley Lab researchers developed a novel approach they call noise estimation circuits. A circuit is a series of operations or a program executed on a quantum computer to calculate the answer of a scientific problem. The team created a modified version of the circuit to give a predictable answer—0 or 1—and used the difference between the measured and predicted answer to correct the output measured of the real circuit.

The noise estimation circuit approach corrects some errors, but not all. The Berkeley Lab team combined their new approach with three other different error mitigation techniques: readout error correction using “iterative Bayesian unfolding,” a technique commonly used in high-energy physics; a homegrown version of randomized compiling; and error extrapolation. By putting all these pieces together, they were able to obtain reliable results from an IBM quantum computer.

Making bigger simulations possible

This work could have far-reaching implications for the field of quantum computing. The new error mitigation strategy allows researchers to tease the right answer out of simulations that require a large number of operations, “Way more than what people generally have been able to do,” de Jong said.

Instead of doing tens of so-called entanglement or controlled NOT operations, the new technique allows researchers to run hundreds of such operations and still get reliable results, he explained. “So we can actually do bigger simulations that could not be done before.”

What’s more, the Berkeley Lab group was able to use these techniques effectively on a quantum computer that’s not necessarily optimally tuned to reduce gate noise, de Jong said. That helps broaden the appeal of the novel error mitigation approach.

“It is a good thing because if you can do it on those kinds of platforms, we can probably do it even better on ones that are less noisy,” he said. “So it’s a very general approach that we can use on many different platforms.”

For researchers, the new error mitigation approach means potentially being able to tackle bigger, more complex problems with quantum computers. For instance, scientists will be able to perform chemistry simulations with a lot more operations than before, said de Jong, a computational chemist by trade.

“My interest is trying to solve problems that are relevant to carbon capture, to battery research, to catalysis research,” he said. “And so my portfolio has always been: I do the science, but I also develop the tools that enable me to do the science.”

Advances in quantum computing have the potential to lead to breakthroughs in a number of areas, from energy production, de-carbonization, and cleaner industrial processes to drug development and artificial intelligence. At CERN’s Large Hadron Collider—where researchers send particles crashing into each other at incredibly high speeds to investigate how the universe works and what it’s made of—quantum computing could help find hidden patterns in LHC data.

To move quantum computing forward in the near term, error mitigation will be key.

“The better the error mitigation, the more operations we can apply to our quantum computers, which means someday, hopefully soon, we’ll be able to make calculations on a quantum computer that we couldn’t make now,” said Nachman, who is especially interested in the potential for quantum computing in high-energy physics, such as further investigating the strong force that is responsible for binding nuclei together.

A cross-division team effort

The study, which started in late 2020, marks the latest in a series of collaborations between Berkeley Lab’s Physics and Computational Research divisions. That kind of cross-division work is especially important in the research and development of quantum computing, Nachman said. A funding call a few years ago from the U.S. Department of Energy (DOE) as part of a pilot program to see if researchers could find ways of using quantum computing for high-energy physics initially prompted Nachman and his colleague Christian Bauer, a Berkeley Lab theoretical physicist, to approach de Jong.

“We said, ‘We have this idea. We’re doing these calculations. What do you think?'” Nachman said. “We put together a proposal. It was funded. And now it’s a huge fraction of what we do.”

A lot of people are interested in this technology across the board, according to Nachman. “We have benefited greatly from collaboration with (de Jong’s) group, and I think it goes both ways,” he said.

De Jong agreed. “It has been fun learning each other’s physics languages and seeing that at the core we have similar requirements and algorithmic needs when it comes to quantum computing,” he said.

More information:

Miroslav Urbanek et al, Mitigating Depolarizing Noise on Quantum Computers with Noise-Estimation Circuits, Physical Review Letters (2021). DOI: 10.1103/PhysRevLett.127.270502

Provided by

Lawrence Berkeley National Laboratory

Citation:

Cutting through the noise to increase error mitigation in quantum computers (2022, February 24)