For nearly a decade, researchers at the Ludwig-Maximilians-Universität (LMU) München and the Technical University of Munich (TUM) have fostered a healthy collaboration between geophysicists and computer scientists to try and solve one of humanity’s most terrifying problems. Despite advancements over the recent decades, researchers are still largely unable to forecast when and where earthquakes might strike.

Under the right circumstances, a violent couple of minutes of shaking can portend an even greater threat to follow—certain kinds of earthquakes under the ocean floor can rapidly displace massive amounts of water, creating colossal tsunamis that can, in some cases, arrive only minutes after the earthquake itself is finished causing havoc.

Extremely violent earthquakes do not always cause tsunamis, though. And relatively mild earthquakes still have the potential to trigger dangerous tsunami conditions. LMU geophysicists are determined to help protect vulnerable coastal populations by better understanding the fundamental dynamics that lead to these events, but recognize that data from ocean, land and atmospheric sensors are insufficient for painting the whole picture. As a result, the team in 2014 turned to using modeling and simulation to better understand these events. Specifically, it started using high-performance computing (HPC) resources at the Leibniz Supercomputing Centre (LRZ), one of the 3 centers that comprise the Gauss Centre for Supercomputing (GCS).

“The growth of HPC hardware made this work possible in the first place,” said Prof. Dr. Alice-Agnes Gabriel, Professor at LMU and researcher on the project. “We need to understand the fundamentals of how megathrust fault systems work, because it will help us assess subduction zone hazards. It is unclear which geological faults can actually produce magnitude 8 and above earthquakes, and also which have the greatest risk for producing a tsunami.”

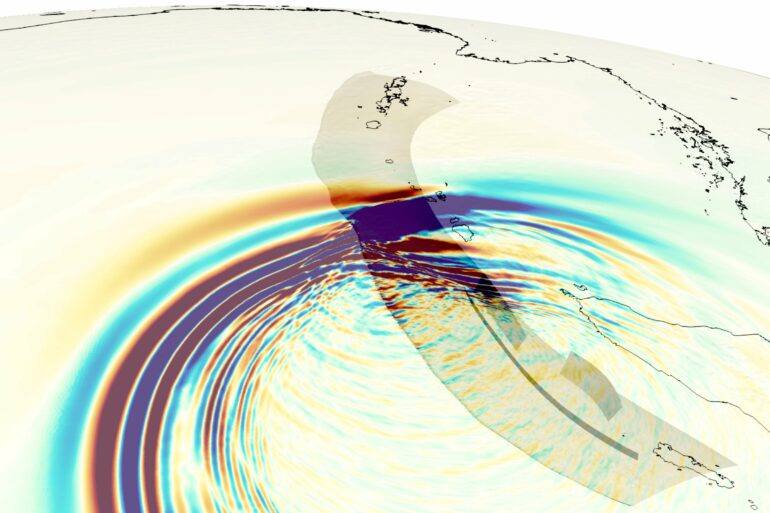

Through years of computational work at LRZ, the team has developed high-resolution simulations of prior violent earthquake-tsunami events. Integrating many different kind of observational data, LMU researchers have now identified three major characteristics that play a significant role in determining an earthquake’s potential to stoke a tsunami—stress along the fault line, rock rigidity and the strength of sediment layers. The LMU team recently published its results in Nature Geoscience.

Lessons from the past

The team’s prior work has modeled past earthquake-tsunami events in order to test whether simulations are capable of recreating conditions that actually occurred. The team has spent a lot of effort modeling the 2004 Sumatra-Andaman earthquake—one of the most violent natural disasters ever recorded, consisting of a magnitude 9 earthquake and tsunami waves that reached over 30 meters high. The disaster killed almost a quarter of a million people, and caused billions in economic damages.

Simulating such a fast-moving, complex event requires massive computational muscle. Researchers must divide the area of study into a fine-grained computational grid where they solve equations to determine the physical behavior of water or ground (or both) in each space, then move their calculation forward in time very slowly so they can observe how and when changes occur.

Despite being at the cutting edge of computational modeling efforts, the team used the vast majority of SuperMUC Phase 2 in 2017, at the time LRZ’s flagship supercomputer, and was only able to model a single earthquake simulation at high resolution. During this period, the groups collaboration with computer scientists at TUM led to developing a “local time-stepping” method, which essentially allows the researchers to focus time-intensive calculations on the regions that are rapidly changing, while skipping over areas where things are not changing throughout the simulation. By incorporating this local time-stepping method, the team was able to run its Sumatra-Andaman quake simulation in 14 hours rather than the 8 days it took beforehand.

The team continued to refine its code to run more efficiently, improving input/output methods and inter-node communications. At the same time, LRZ installed in 2018 its next-generation SuperMUC-NG system, significantly more powerful than the prior generation. The result? The team was able to not only unify the earthquake simulation itself with tectonic plate movements and the physical laws of how rocks break and slide, but also realistically simulate the tsunami wave growth and propagation as well. Gabriel pointed out that none of these simulations would be possible without access to HPC resources like those at LRZ.

“It is really hardware aware optimization we are utilizing,” she said. “The computer science achievements are essential for us to do advance computational geophysics and earthquake science, which is increasingly data-rich but remains model-poor. With further optimization and hardware advancements, we can perform as many of these scenarios to allow sensitivity analysis to figure out which initial conditions are most meaningful to understand large earthquakes.”

After having its simulation data, the researchers set to work understanding what characteristics seemed to play the largest role in making this earthquake so destructive. Having identified stress, rock rigidity, and sediment strength as playing the largest roles in determining both an earthquake’s strength and its propensity for causing a large tsunami, the team has helped bring HPC into scientists and government officials’ playbook for tracking, mitigating, and preparing for earthquake and tsunami disasters moving forward.

Urgent computing in the HPC era

Gabriel indicated that the team’s computational advancements fall squarely in line with an emerging sense within the HPC community that these world-class resources need to be available in a “rapid response” fashion during disaster or emergencies. Due to its long-running collaboration with LRZ, the team was able to quickly model the 2018 Palu earthquake and tsunami near Sulawesi, Indonesia, causing more than 2,000 fatalities and provide insights into what happened.

“We need to understand the fundamentals of how submerged fault systems work, as it will help us assess their earthquake as well as cascading secondary hazards. Specifically, the deadly consequences of the Palu earthquake came as a complete surprise to scientists,” Gabriel said. “We have to have physics-based HPC models for rapid response computing, so we can quickly respond after hazardous events. When we modeled the Palu earthquake, we had the first data-fused models ready to try and explain what happened in a couple of weeks. If scientists know which geological structures may cause geohazards, we could trust some of these models’ informing hazard assessment and operational hazard mitigation.”

In addition to being able to being able to run many permutations of the same scenario with slightly different inputs, the team is also focused on leveraging new artificial intelligence and machine learning methods to help comb through the massive amounts of data generated during the team’s simulations in order help clean up less-relevant and possibly distracting data that comes from the team’s simulations.

The team is also participating in the ChEESE project, an initiative aimed at preparing mature HPC codes for exascale systems, or next-generation systems capable of one billion billion calculations per second, or more than twice as fast as today’s most powerful supercomputer, the Fugaku system in Japan.

More information:

Thomas Ulrich et al, Stress, rigidity and sediment strength control megathrust earthquake and tsunami dynamics, Nature Geoscience (2022). DOI: 10.1038/s41561-021-00863-5

Provided by

Gauss Centre for Supercomputing

Citation:

Collaboration helps geophysicists better understand severe earthquake-tsunami risks (2022, April 7)