It doesn’t take much to get ChatGPT to make a factual mistake. My son is doing a report on U.S. presidents, so I figured I’d help him out by looking up a few biographies. I tried asking for a list of books about Abraham Lincoln and it did a pretty good job:

A reasonable list of books about Lincoln.

Screen capture by Jonathan May., CC BY-ND

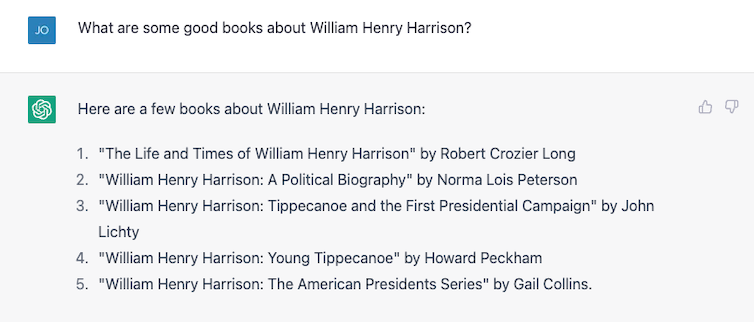

Number 4 isn’t right. Garry Wills famously wrote “Lincoln at Gettysburg,” and Lincoln himself wrote the Emancipation Proclamation, of course, but it’s not a bad start. Then I tried something harder, asking instead about the much more obscure William Henry Harrison, and it gamely provided a list, nearly all of which was wrong.

Books about Harrison, fewer than half of which are correct.

Screen capture by Jonathan May., CC BY-ND

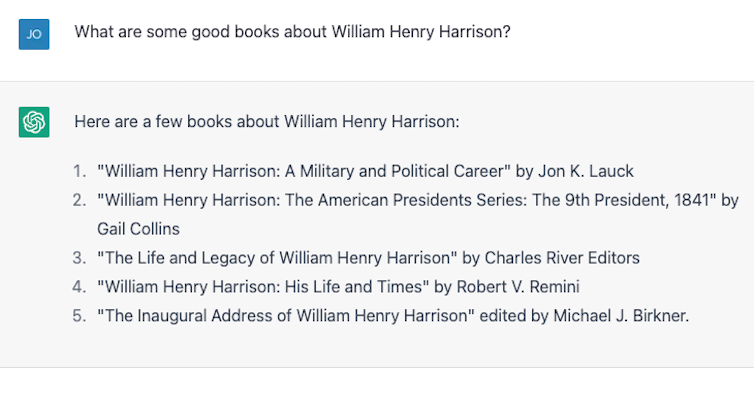

Numbers 4 and 5 are correct; the rest don’t exist or are not authored by those people. I repeated the exact same exercise and got slightly different results:

More books about Harrison, still mostly nonexistent.

Screen capture by Jonathan May., CC BY-ND

This time numbers 2 and 3 are correct and the other three are not actual books or not written by those authors. Number 4, “William Henry Harrison: His Life and Times” is a real book, but it’s by James A. Green, not by Robert Remini, a well-known historian of the Jacksonian age.

I called out the error and ChatGPT eagerly corrected itself and then confidently told me the book was in fact written by Gail Collins (who wrote a different Harrison biography), and then went on to say more about the book and about her. I finally revealed the truth and the machine was happy to run with my correction. Then I lied absurdly, saying during their first hundred days presidents have to write a biography of some former president, and ChatGPT called me out on it. I then lied subtly, incorrectly attributing authorship of the Harrison biography to historian and writer Paul C. Nagel, and it bought my lie.

When I asked ChatGPT if it was sure I was not lying, it claimed that it’s just an “AI language model” and doesn’t have the ability to verify accuracy. However it modified that claim by saying “I can only provide information based on the training data I have been provided, and it appears that the book ‘William Henry Harrison: His Life and Times’ was written by Paul C. Nagel and published in 1977.”

This is not true.

Words, not facts

It may seem from this interaction that ChatGPT was given a library of facts, including incorrect claims about authors and books. After all, ChatGPT’s maker, OpenAI, claims it trained the chatbot on “vast amounts of data from the internet written by humans.”

However, it was almost certainly not given the names of a bunch of made-up books about one of the most mediocre presidents. In a way, though, this false information is indeed based on its training…