February 18, 2021

feature

Over the past few years, computer scientists have created numerous computational techniques that can automatically generate texts, images and other types of data. These models are highly advantageous, particularly for creating data or creative works that are demanding and time-consuming for humans to produce manually.

Researchers at Dalian University of Technology in China and City University of Hong Kong have recently created an innovative framework that can automatically generate manga comic books, which are typically designed by highly skilled professional artists and require extensive work. Their framework, presented in a paper pre-published on arXiv, creates comic books by extracting data from TV series, movies, animations or other videos.

“We propose a fully automatic system for generating comic books from videos without any human intervention,” the researchers wrote in their paper. “Given an input video along with its subtitles, our approach first extracts informative keyframes by analyzing the subtitles and stylizes keyframes into comic-style images.”

After extracting key frames from videos and turning them into comic-style images, the system devised by the researchers uses a multi-page layout framework to spread the images across several pages and create visually appealing layouts that reflect the relationship between the images.

Rather than always using the same type of speech balloons, like most other comic generation frameworks do, the framework created by the researchers generates different types of balloons that reflect the emotion conveyed by the character’s words. To do this, it first tries to grasp the emotion conveyed by different lines of a dialog by analyzing both a video’s audio track and the corresponding subtitles.

The shape of the dialog balloons created by the model and the size of the words contained in them vary based on the emotions conveyed by the characters. This significantly improves the overall comic reading experience, producing more engaging layouts that reflect the content of the dialogues between different characters.

The speech balloons generated by the system are placed adjacent to the characters who are speaking. To do this, the model first detects different speakers in the video and then places speech balloons that are aligned with the emotions expressed by them in their proximity.

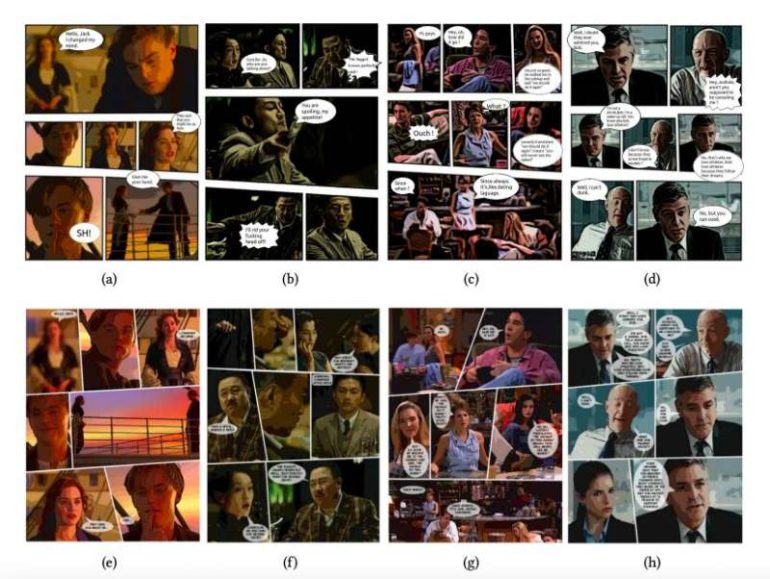

The researchers evaluated their system in a series of experiments, assessing its individual modules and comparing the quality of the comics it produces with those generated by other existing state-of-the-art techniques for translating videos into comic books. The system was used to generate comics based on 16 video clips extracted from four movies and series: “Titanic,” “The Message,” “Friends” and “Up in the Air.” These videoclips were between two and six minutes long.

The team asked a group of people to evaluate the overall quality of the comics produced by their model, in comparison with those produced by an alternative comic generation system. The vast majority of users who took part in this study said that they preferred the layouts created by the researchers’ model to those created by the previously developed system.

“Our experiments demonstrate that our system can synthesize more expressive and engaging comics in comparison to a state-of-the-art comic generation system,” the researchers wrote in their paper. “Although our system has been shown to achieve promising results, it is still subject to several limitations. For example, the keyframe selection is not accurate enough. In some cases, the selected keyframes are similar to each other, which would certainly introduce redundancy into generated comics.”

Once it is perfected, the comic generation system developed by this team of researchers could be used to automatically create engaging comic books based on movies, TV series or other video content. In their next studies, the researchers plan to develop an alternative module for keyframe selection, as this could improve the quality of the layouts produces by their system and reduce keyframe redundancy.

“What’s more, inspired by many existing methods that can generate image sequences given a story with multiple sentences, it is possible to produce comic books from textual stories and we are interested to extend our method to leverage textual information to help generate manga,” the researchers conclude in their paper.

Amazon to buy digital comics company

More information:

Automatic comic generation with stylistic multi-page layouts and emotion-driven text balloon generation. arXiv:2101.11111 [cs.CV]. arxiv.org/abs/2101.11111

2021 Science X Network

Citation:

A system that automatically generates comic books from movies and other videos (2021, February 18)

retrieved 19 February 2021

from https://techxplore.com/news/2021-02-automatically-comic-movies-videos.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.