I research the intersection of artificial intelligence, natural language processing and human reasoning as the director of the Advancing Human and Machine Reasoning lab at the University of South Florida. I am also commercializing this research in an AI startup that provides a vulnerability scanner for language models.

From my vantage point, I observed significant developments in the field of AI language models in 2024, both in research and the industry.

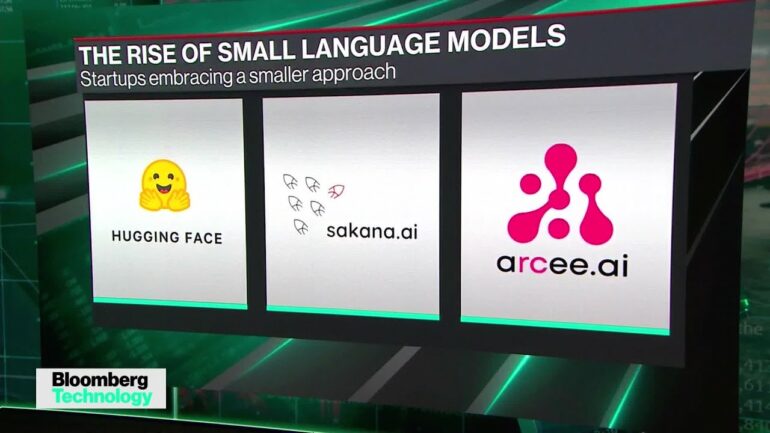

Perhaps the most exciting of these are the capabilities of smaller language models, support for addressing AI hallucination, and frameworks for developing AI agents.

Small AIs make a splash

At the heart of commercially available generative AI products like ChatGPT are large language models, or LLMs, which are trained on vast amounts of text and produce convincing humanlike language. Their size is generally measured in parameters, which are the numerical values a model derives from its training data. The larger models like those from the major AI companies have hundreds of billions of parameters.

There is an iterative interaction between large language models and smaller language models, which seems to have accelerated in 2024.

First, organizations with the most computational resources experiment with and train increasingly larger and more powerful language models. Those yield new large language model capabilities, benchmarks, training sets and training or prompting tricks. In turn, those are used to make smaller language models – in the range of 3 billion parameters or less – which can be run on more affordable computer setups, require less energy and memory to train, and can be fine-tuned with less data.

No surprise, then, that developers have released a host of powerful smaller language models – although the definition of small keeps changing: Phi-3 and Phi-4 from Microsoft, Llama-3.2 1B and 3B, and Qwen2-VL-2B are just a few examples.

These smaller language models can be specialized for more specific tasks, such as rapidly summarizing a set of comments or fact-checking text against a specific reference. They can work with their larger cousins to produce increasingly powerful hybrid systems.

What are small language model AIs – and why would you want one?

Wider access

Increased access to highly capable language models large and small can be a mixed blessing. As there were many consequential elections around the world in 2024, the temptation for the misuse of language models was high.

Language models can give malicious users the ability to generate social media posts and deceptively influence public opinion. There was a great deal of concern about this threat in 2024, given that it was an election year in many countries.

And indeed, a robocall faking President Joe Biden’s voice asked New Hampshire Democratic primary voters to stay home. OpenAI had to intervene to disrupt over 20 operations and…