As the number of devices connected to the internet continues to increase, so does the amount of redundant data transfer between different sensory terminals and computing units. Computing approaches that intervene in the vicinity of or inside sensory networks could help to process this growing amount of data more efficiently, decreasing power consumption and potentially reducing the transfer of redundant data between sensing and processing units.

Researchers at Hong Kong Polytechnic University have recently carried out a study outlining the concept of near-sensor and in-sensor computing. These are two computing approaches that enable the partial transfer of computation tasks to sensory terminals, which could reduce power consumption and increase the performance of algorithms.

“The number of sensory nodes on the Internet of Things continues to increase rapidly,” Yang Chai, one of the researchers who carried out the study, told TechXplore. “By 2032, the number of sensors will be up to 45 trillion, and the generated information from sensory nodes is equivalent to 1020 bit/second. It is thus becoming necessary to shift part of the computation tasks from cloud computing centers to edge devices in order to reduce energy consumption and time delay, saving communication bandwidth and enhancing data security and privacy.”

Visual sensors can gather large amounts of data and they thus generally require significant computing power. In one of their previous studies, Chai and his colleagues tried to perform information processing at the level of sensory terminals and used an optoelectronic resistive switching memory array to demonstrate that preprocessing images gathered by sensors could improve the performance of computational methods for image recognition.

“After this study, I proposed that in-sensor computing paradigms that require new hardware platforms can achieve new functionalities, high performance and energy efficiency using the same or less power,” Chai said. “With the rapid development in this emerging area, it has become necessary to summarize the existing achievements and provide perspectives for future development. Our recent perspective paper in Nature Electronics offers a timely overview of the challenges and opportunities in this field.”

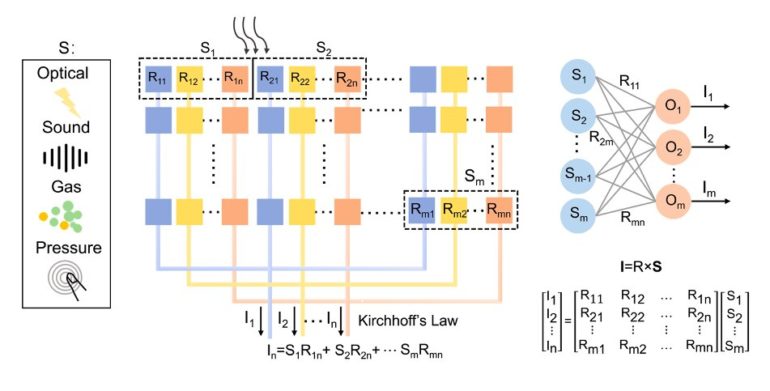

As sensors and computing units have different functions, they are generally made of different materials and have distinct device structures, designs and processing systems. In conventional sensory computing architectures, sensors and computing units are physically separated, with a large distance between them. In near and in-sensor computing architectures, the distance between sensory and computing units is significantly reduced or eliminated.

In near-sensor computing systems, processing units or accelerators are placed beside sensors. This means that the processing units or accelerators execute specific operations at sensor endpoints, which can increase the system’s overall performance and minimize the transfer of redundant data.

In in-sensor computing architectures, on the other hand, individual sensors or multiple connected sensors directly process the information they collect. This eliminates the need for a processing unit or accelerator, merging sensing and computing functions together.

“A big challenge for near-sensor computing is the integration between sensory and computing units,” Chai explained. “For example, the computing unit already adopts very advanced technology nodes, while most of the sensory devices can perform their functions well based on large pitch size technology. Although the monolithic 3-D integration provides a way with high density and short distance, its complicated processing and heat dissipation still present great challenges.”

While in-sensor computing architectures have so far proved to be promising approaches for combining computing and sensing capabilities, they are typically only applicable in specific scenarios. In addition, they can only be realized using innovative materials and device structures that are still at early stages of development.

“Near-/in-sensor computing is an interdisciplinary field of research, covering materials, devices, circuits, architectures, algorithms and integration technologies,” Chai said. “These architectures are complex, because they need to handle a large amount and various types of signals in different scenarios. The successful deployment of near-/in-sensor computing requires the co-development and co-optimization of sensors, devices, integration technologies and algorithms.”

In their recent paper published in Nature Electronics, Chai and his colleagues provide clear definitions for near-sensor and in-sensor computing. These definitions classify sensory computing into low-level processing (i.e., the preliminary and selective extraction of useful data from a large volume of raw data by suppressing unwanted noise or distortion, or by enhancing the feature for further processing) and high-level processing (i.e., abstract representation involving the cognitive processes that enable the identification of the ‘what’ or ‘where’ input signals are).

In addition to providing a reliable definition of near and in-sensor computing, the researchers propose possible solutions for the realization of integrated sensing and processing units. In the future, their work could inspire further studies aimed at realizing these architectures or their hardware components using advanced manufacturing technologies.

So far, the work by Chai and his colleagues primarily focused on vision sensors. However, near-sensor and in-sensor computing approaches could also integrate other types of sensors, such as those that detect acoustic, pressure, stain, chemical or even biological signals.

“We are now interested in extending the strategies we discussed to different application scenarios,” Chai said. “In addition, most existing reports are restricted to a relatively small scale and still far from real applications. In the future, we will explore opportunities to scale up the design by increasing the number of devices and connecting with peripheral circuits to construct a system.”

Neuromorphic computing with memristors

More information:

Near-sensor and in-sensor computing. Nature Electronics(2020). DOI: 10.1038/s41928-020-00501-9

Optoelectronic resistive random access memory for neuromorphic vision sensors. Nature Nanotechnology(2019). DOI: 10.1038/s41565-019-0501-3

In-sensor computing for machine vision. (2020) DOI: 10.1038/d41586-020-00592-6

2020 Science X Network

Citation:

Exploring the potential of near-sensor and in-sensor computing systems (2020, December 21)

retrieved 21 December 2020

from https://techxplore.com/news/2020-12-exploring-potential-near-sensor-in-sensor.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.