Researchers at University of Calgary, Adobe Research and University of Colorado Boulder have recently created an augmented reality (AR) interface that can be used to produce responsive sketches, graphics and visualizations. Their work, initially pre-published on arXiv, won the Best Paper Honorable Mention and Best Demo Honorable Mention awards at the ACM Symposium on User Interface Software and Technology (UIST’20).

“Our project originally started from the simple motivation of enabling users to visualize a real-world phenomenon through interactive sketching in AR,” Ryo Suzuki, one of the researchers who carried out the study, told TechXplore. “This motivation came from our observations of physics classroom education, where experiments conducted by the teacher are often an integral part of learning physics.”

Explaining physical phenomena to students can sometimes be quite challenging. This is because fully understanding these phenomena requires picturing how they happen and what they look like, instead of merely learning the theory associated with them. Interactive tools that allow physics teachers to explain concepts in a dynamic and engaging way could thus be of great value, as they could improve their students understanding of topics covered in their lessons.

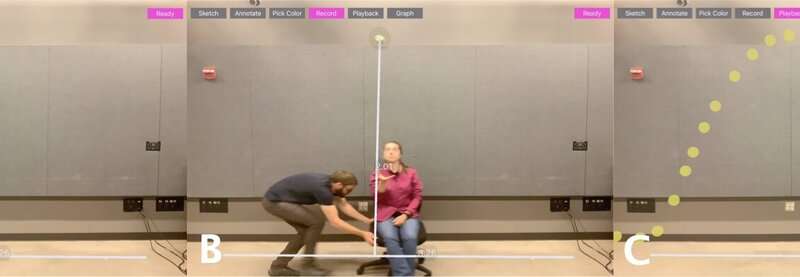

RealitySketch, the platform created by Suzuki and his colleagues, allows users to produce dynamic and responsive graphics that can be visualized on top of a board, wall or other objects in the real world. This allows students to gain a better idea of the physical phenomena that a teacher is trying to explain to them, by directly observing them in motion.

“In the past, creating such visualizations was a time-consuming process, requiring a separate step of video editing or post-production,” Suzuki explained. “In contrast, we wondered: what if users could directly sketch dynamic visualizations onto the real world in real time, so that they can for instance dynamically animate and visualize the movements of physical objects?”

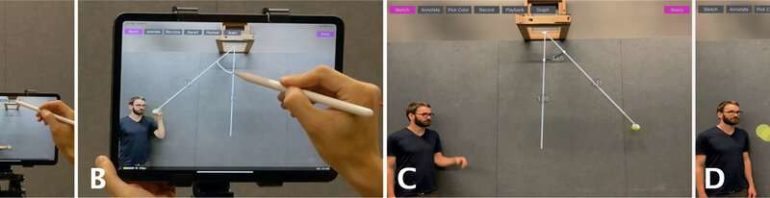

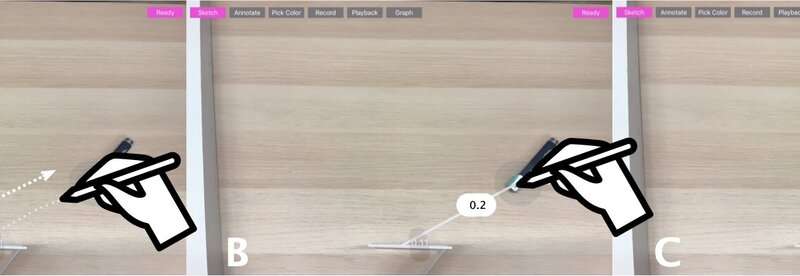

RealitySketch is based on Apple ARKit, a mobile AR platform that allows it to embed dynamic sketches into real-world environments. Initially, users produce simple sketches by drawing on a touchscreen using their fingers or a digital pen. The elements they sketch are then overlaid onto a camera view of the real world.

“The most unique feature of RealitySketch is that it supports real-time animation of sketched elements based on real world motion,” Suzuki said.

While there are countless AR-based sketching interfaces on the market today, most solutions developed so far focus on embedding static graphics into the real-world. Examples of these tools include Pronto, SybiosisSketch, and PintAR, as well as commercial platforms, such as Google’s Just a Line or DoodleLens. Instead of only allowing users to create static sketches, RealitySketch enables the embedding of dynamic and interactive graphics that can bind to real objects in a user’s surroundings and respond to their movements.

“RealitySketch integrates object tracking and AR-based visualization,” Suzuki said. “First, it tracks physical objects with computer vision. When a user taps on an object (e.g., a ball of a pendulum) on the iPad, the system starts tracking the object based on its color. All of the sketched elements are bound to these tracked objects, so that when the physical objects move (e.g., when the user grabs, moves, pushes, throws, etc. an object), the sketched elements also animate based on the motion they are expected to exhibit.”

Suzuki and his colleagues evaluated the interface they created in a study that involved teachers at students at University of Calgary. The participants they recruited were very pleased with RealitySketch and seemed particularly excited about its potential for augmenting physics experiments.

Now that so many students are attending lessons online due to the COVID-19 pandemic, the interface created by Suzuki and his colleagues could prove particularly valuable. In fact, RealitySketch could make remote teaching more engaging, for instance allowing teachers to record videos of dynamic physics experiments and share them with their students, so that they can get a better sense of the concepts and phenomena described during lectures.

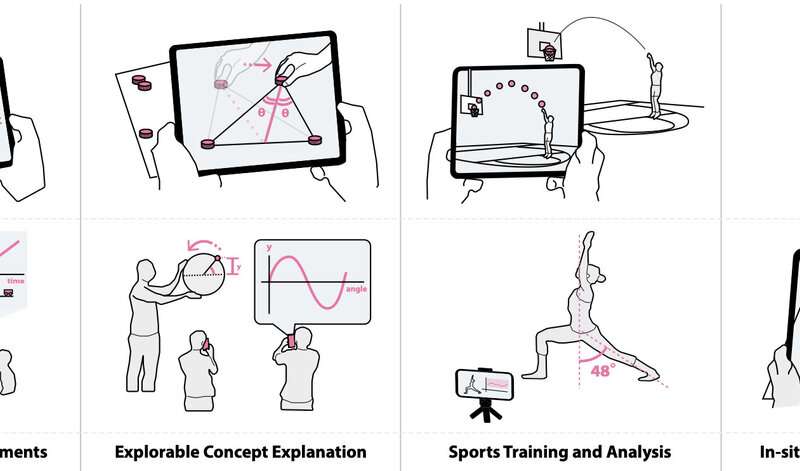

“RealitySketch also shows potential for broader application domains beyond teaching,” Suzuki said. “While we originally focused on math and physics concept explanations, our users were excited about other application scenarios, such as sports training, design explorations, and tangible user interfaces.”

In addition to testing their platform in a classroom setting, Suzuki and his colleagues demonstrated its potential for a wide range of applications that are unrelated to education. For instance, they showed that it could be used by sports trainers to produce real-time visualizations and analyses of fitness-related data, for instance, to show basketball players real-time ball trajectories that would allow them to score, or to make yoga sessions more engaging by producing visualizations of specific body postures.

“We are interested in further developing this project in the future,” Suzuki. “Since it is the result of a collaboration between Adobe Research (Rubaiat Habib Kazi, Li-Yi Wei, Stephen DiVerdi, Wilmot Li) and the University of Calgary (me) /University of Colorado Boulder (my Ph.D. advisor Prof. Daniel Leithinger), we believe that this work will inspire more research and products that support immersive creativity, which would fully realize the potential of AR as a dynamic computational medium.”

RoomShift: A room-scale haptic and dynamic environment for VR applications

More information:

Ryo Suzuki et al. RealitySketch, Proceedings of the 33rd Annual ACM Symposium on User Interface Software and Technology (2020). DOI: 10.1145/3379337.3415892

2020 Science X Network

Citation:

RealitySketch: An AR interface to create responsive sketches (2020, December 3)

retrieved 5 December 2020

from https://techxplore.com/news/2020-12-realitysketch-ar-interface-responsive.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.