Elon Musk recently pronounced that the next Neuralink project will be a “Blindsight” cortical implant to restore vision: “Resolution will be low at first, like early Nintendo graphics, but ultimately may exceed normal human vision.”

Unfortunately, this claim rests on the fallacy that neurons in the brain are like pixels on a screen. It’s not surprising that engineers often assume that “more pixels equals better vision.” After all, that is how monitors and phone screens work.

In our newly published research, we created a computational model of human vision to simulate what sort of vision an extremely high-resolution cortical implant might provide. A movie of a cat with a resolution of 45,000 pixels is sharp and clear. A movie generated using a simplified version of a model of 45,000 cortical electrodes, each of which stimulates a single neuron, still has a recognizable cat but most of the details of the scene are lost.

The movie on the left is generated using 45,000 pixels. The one on the right is generated using a simulation of a cortical prosthesis with 45,000 electrodes, each of which stimulates a single neuron.

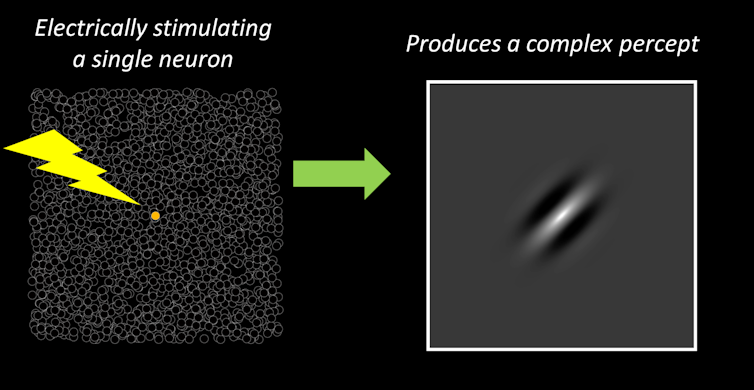

The reason why the movie generated by electrodes is so blurry is because neurons in the human visual cortex do not represent tiny dots or pixels. Instead, each neuron has a particular receptive field, which is the location and pattern a visual stimulus must have in order to make that neuron fire. Electrically stimulating a single neuron produces a blob whose appearance is determined by that neuron’s receptive field. The tiniest electrode – one that stimulates a single neuron – will produce a blob that is roughly the size of your pinkie’s width held at arm’s length.

The left image represents a single neuron being electrically stimulated. The right side represents what a patient might see. The size and shape of the blob depends on the unique receptive field of that stimulated neuron.

Ione Fine and Geoffrey Boynton/University of Washington

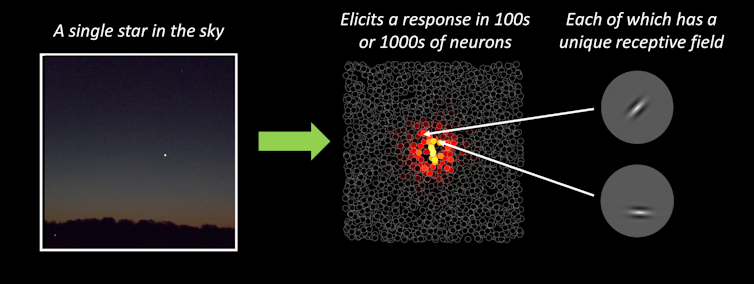

Consider what happens when you look at a single star in the night sky. Each point in space is represented by many thousands of neurons with overlapping receptive fields. A tiny spot of light, such as a star, results in a complex pattern of firing across all these neurons.

A star in the night sky elicits spikes in hundreds of neurons, each of which has a different receptive field.

Ione Fine and Geoffrey Boynton/University of Washington

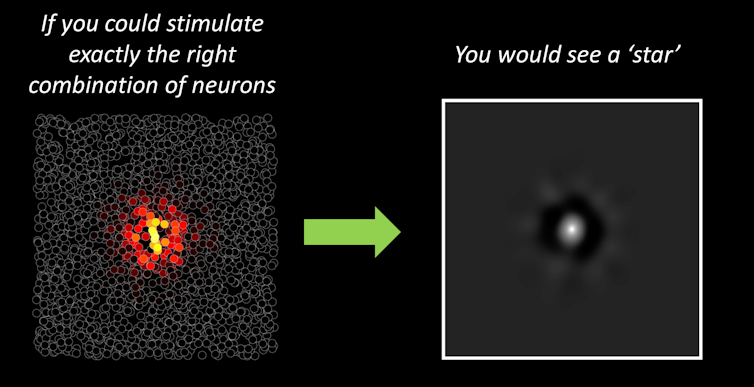

To generate the visual experience of seeing a single star with cortical stimulation, you would need to reproduce a pattern of neural responses that is similar to the pattern that would be produced by natural vision.