Twitter reports that fewer than 5% of accounts are fakes or spammers, commonly referred to as “bots.” Since his offer to buy Twitter was accepted, Elon Musk has repeatedly questioned these estimates, even dismissing Chief Executive Officer Parag Agrawal’s public response.

Later, Musk put the deal on hold and demanded more proof.

So why are people arguing about the percentage of bot accounts on Twitter?

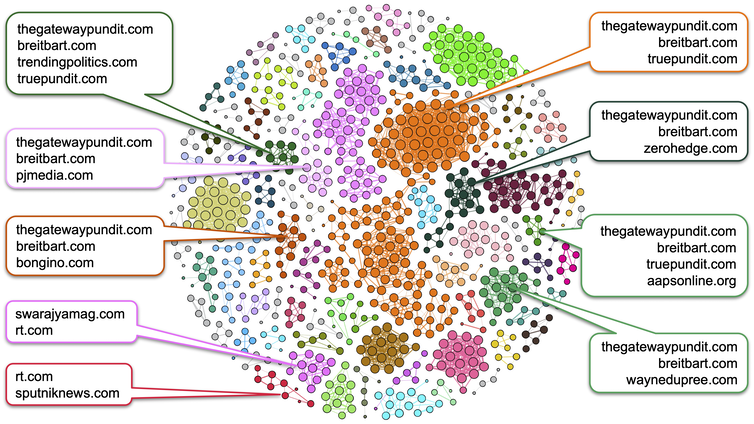

As the creators of Botometer, a widely used bot detection tool, our group at the Indiana University Observatory on Social Media has been studying inauthentic accounts and manipulation on social media for over a decade. We brought the concept of the “social bot” to the foreground and first estimated their prevalence on Twitter in 2017.

Based on our knowledge and experience, we believe that estimating the percentage of bots on Twitter has become a very difficult task, and debating the accuracy of the estimate might be missing the point. Here is why.

What, exactly, is a bot?

To measure the prevalence of problematic accounts on Twitter, a clear definition of the targets is necessary. Common terms such as “fake accounts,” “spam accounts” and “bots” are used interchangeably, but they have different meanings. Fake or false accounts are those that impersonate people. Accounts that mass-produce unsolicited promotional content are defined as spammers. Bots, on the other hand, are accounts controlled in part by software; they may post content or carry out simple interactions, like retweeting, automatically.

These types of accounts often overlap. For instance, you can create a bot that impersonates a human to post spam automatically. Such an account is simultaneously a bot, a spammer and a fake. But not every fake account is a bot or a spammer, and vice versa. Coming up with an estimate without a clear definition only yields misleading results.

Defining and distinguishing account types can also inform proper interventions. Fake and spam accounts degrade the online environment and violate platform policy. Malicious bots are used to spread misinformation, inflate popularity, exacerbate conflict through negative and inflammatory content, manipulate opinions, influence elections, conduct financial fraud and disrupt communication. However, some bots can be harmless or even useful, for example by helping disseminate news, delivering disaster alerts and conducting research.

Simply banning all bots is not in the best interest of social media users.

For simplicity, researchers use the term “inauthentic accounts” to refer to the collection of fake accounts, spammers and malicious bots. This is also the definition Twitter appears to be using. However, it is unclear what Musk has in mind.

Hard to count

Even when a consensus is reached on a definition, there are still technical challenges to estimating prevalence.