If more people told you something was true, you’d think you would tend to believe it.

Not according to a 2019 study by Yale University which found that people believe a single source of information which is repeated across many channels (a ‘false consensus’), just as readily as multiple people telling them something based on many independent original sources (a ‘true consensus’).

The finding showed how misinformation can be bolstered, and it had ramifications for important decisions we make based on advice we receive from places such as governments and media on information like vaccinations, wearing masks during the pandemic, or even who we vote for in an election.

The 2019 ‘illusion of consensus’ finding has fascinated postdoctoral research associate in UNSW Science’s School of Psychology, Saoirse Connor Desai, who has tested the illusion finding and found a way for people to not be tricked with so-called ‘fake news’ from a single source.

Her team’s study has been published in Cognition.

“We found that illusion can be reduced when we give people information about how the original sources used evidence to arrive at their conclusions,” Dr. Connor Desai says.

She says the finding is particularly relevant for science communication best practice—e.g. how policy makers or media present people with expert scientific evidence or research.

For instance, over 80 percent of climate change denial blogs repeat claims from a single person who claims to be a ‘polar bear expert’ .

“You could have a situation where a misleading health proposal is repeated through multiple channels, which could influence people to rely on that information more than they should do, because they think there is evidence for that, or they think there is a consensus,” Dr. Connor Desai says.

“But our finding shows that if you can explain to people where your information comes from, and how the original sources reached their conclusions, that strengthens their ability to identify a ‘true consensus.'”

Dr. Connor Desai says the Yale study finding was surprising to her, “because it seemed to be an indictment of human ability to distinguish between true consensus and false consensus.”

“The original study showed that people are routinely bad at this. There were lots of situations where they are never able to tell the difference between true and false consensus,” she says.

“It’s problematic because if people hear a single person’s false or misleading statements repeated through different channels, they might feel the statement is more valid than it is.”

Dr. Connor Desai says an example of this is multiple independent experts agreeing that Ivermectin should not be used to treat COVID-19 (true consensus), versus a single group or individual saying that people should use it as an anti-viral drug (false consensus).

How the study was conducted

The aim of the UNSW study was to understand why people believe false information when it’s repeated.

“Our main goal was to establish whether one reason that people are equally convinced by true and false consensus is that they assume that different sources share data or methodologies,” Dr. Connor Desai says.

“Do they understand there is potentially more evidence when you have multiple experts saying the same thing?”

The UNSW researchers conducted several experiments.

The first experiment replicated the 2019 Yale study, which saw participants given a variety of articles about a fictional tax policy which took positive, negative, or neutral stances, and then asked to what extent they agreed the proposal would improve the economy.

It replicated the “illusion of consensus” where people are equally convinced by one piece of evidence as they are by many pieces of evidence but added a new condition where they told people who saw a “true consensus” that the sources had used different data and methods to arrive at their conclusions.

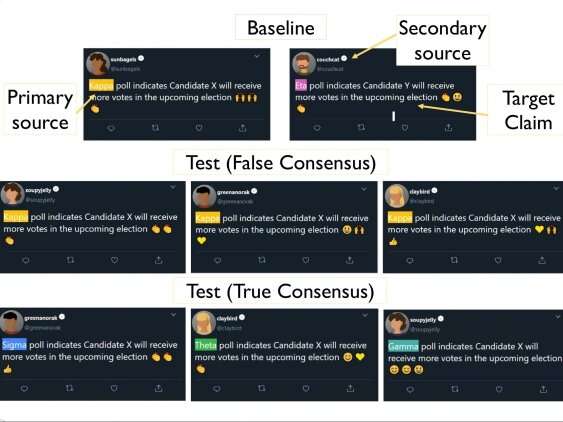

An example of the made up Twitter posts used in the study.

The result was a reduction in the illusion of consensus.

“People were more convinced by true consensus than false consensus.”

In another experiment, 200 participants were given information about an election in a fictional foreign democratic country.

They were shown fictional Twitter posts from news outlets that said which candidates would get more votes in the election: some sourced the same or different pollsters to predict a candidate would win, while another tweet said a different contender would win.

But in the true consensus Twitter posts, they gave people a scenario in which it was clear that different primary sources worked independently and used different data to arrive at their conclusions.

“We expected that many people would be more familiar with such polls and would realize that looking at multiple different polls would be a better way of predicting the election result than just seeing a single poll multiple times,” Dr. Connor Desai says.

After reading the tweets, participants rated which candidate would win based on the polling predictions.

“It seemed that people were more convinced by a true consensus than a false consensus when they understood the pollsters had gathered evidence independently of one another,” Professor of Cognitive Psychology in the School of Psychology, Brett Hayes says.

“Our results suggest that people do see claims endorsed by multiple sources as stronger when they believe these sources really are independent of one another.”

The researchers later replicated and extended the tweet study with 365 more participants.

“This time we had a condition where the tweets came from individual people who showed their endorsement of the polls using emojis,” Dr. Connor Desai says.

“Regardless of whether the tweets came from news outlets, or individual tweeters, people were more convinced by true than false consensus when the relationship between sources was unambiguous.”

False consensus not completely discounted

But the researchers also found the participants didn’t completely discount false consensus.

“There are at least two possible explanations for this effect,” Dr. Connor Desai said.

“The first is that such repetition simply increases the familiarity of the claim—enhancing its memory representation, and this is sufficient to increase confidence.

“The second is that people may make inferences about why a claim is repeated because the source believed it was the most reputable or provided the strongest evidence.

“For instance, if different news channels all cite the same expert you might think that they’re citing the same person because they are the most qualified to talk about whatever it is they’re talking about.”

Dr. Connor Desai plans to next look at why some communication strategies are more effective than others, and if repeating information is always effective.

“Is there a point where there’s too much consensus, and people become suspicious?” she says.

“Can you correct a ‘false consensus’ by pointing out that it’s often better to get information from multiple independent sources? These are the kinds of strategies we wish to look into.”

More information:

Saoirse Connor Desai et al, Getting to the source of the illusion of consensus, Cognition (2022). DOI: 10.1016/j.cognition.2022.105023

Sami R. Yousif et al, The Illusion of Consensus: A Failure to Distinguish Between True and False Consensus, Psychological Science (2019). DOI: 10.1177/0956797619856844

Provided by

University of New South Wales

Citation:

How can we get better at discerning misinformation from reliable expert consensus? (2022, February 15)