Western researchers have developed a novel technique using math to understand exactly how neural networks make decisions—a widely recognized but poorly understood process in the field of machine learning.

Many of today’s technologies, from digital assistants like Siri and ChatGPT to medical imaging and self-driving cars, are powered by machine learning. However, the neural networks—computer models inspired by the human brain—behind these machine learning systems have been difficult to understand, sometimes earning them the nickname “black boxes” among researchers.

“We create neural networks that can perform specific tasks, while also allowing us to solve the equations that govern the networks’ activity,” said Lyle Muller, mathematics professor and director of Western’s Fields Lab for Network Science, part of the newly created Fields-Western Collaboration Centre. “This mathematical solution lets us ‘open the black box’ to understand precisely how the network does what it does.”

The findings were published in the journal PNAS, in collaboration with international researchers including University of Amsterdam’s machine learning research chair Max Welling.

‘Seeing things’ by segmenting images into parts

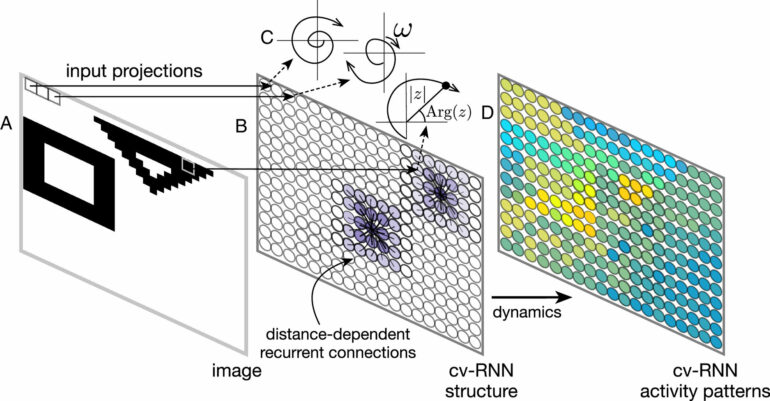

The Western team, which included Muller, post-doctoral scholars Luisa Liboni and Roberto Budzinski and graduate student Alex Busch, first demonstrated this new advancement on a task called image segmentation—a fundamental process in computer vision where machine learning systems divide images into distinct parts, like separating objects in an image from the background.

Starting with simple geometric shapes like squares and triangles, they created a neural network that could segment these basic images.

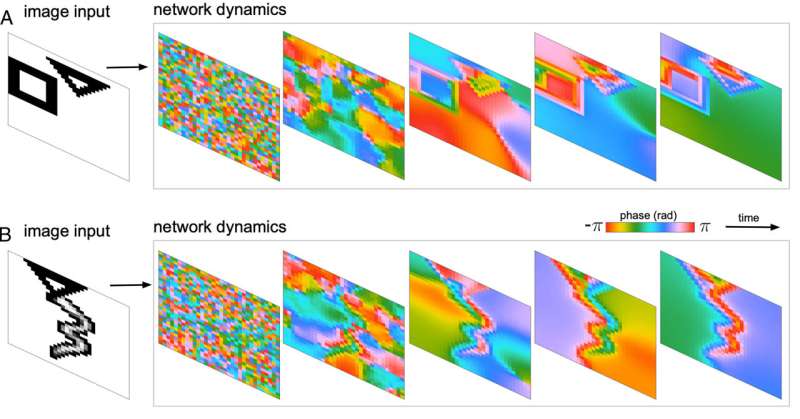

Spatiotemporal dynamics produced by the cv-RNN. (A) An image drawn from the 2Shapes dataset (see Materials and Methods, Image Inputs and Datasets) is input to the oscillator network by modulating the nodes’ intrinsic frequencies 𝜔 . The samples of the phase dynamics in the recurrent layer during transient time show that the nodes are imprinting the visual space by generating three different spatiotemporal patterns: one for the nodes corresponding to the background in the input space, one for the nodes corresponding to the square in the input image, and finally for the nodes corresponding to the triangle in the input space. (B) Image drawn from the MNIST&Shapes dataset is input into the dynamical system. Three different spatiotemporal patterns arise: one for the nodes corresponding to the background in visual input space, one for the nodes corresponding to the triangle in the input space, and finally for the nodes corresponding to the handwritten three-digit. © Proceedings of the National Academy of Sciences (2025). DOI: 10.1073/pnas.2321319121

Muller and his collaborators next used a mathematical approach, which they previously developed to study other networks, to investigate how the new network performed this segmentation task when analyzing these simple images.

The mathematical approach allowed the team to understand precisely how each step of the computation occurred. Somewhat surprisingly, the team then found the network could also segment—or see and interpret—a handful of natural images, like photographs of a polar bear walking through the snow or a bird in the wild.

“By simplifying the process to gain mathematical insight, we were able to construct a network that was more flexible than previous approaches and also performed well on new inputs it had never seen,” said Muller, a member of the Western Institute for Neuroscience.

“What’s particularly exciting is that this is just the beginning, as we believe this mathematical understanding can be useful far beyond this first example.”

Discover the latest in science, tech, and space with over 100,000 subscribers who rely on Phys.org for daily insights.

Sign up for our free newsletter and get updates on breakthroughs,

innovations, and research that matter—daily or weekly.

The implications of the work extend beyond image processing.

In a related study published in Communications Physics in 2024, Muller and his team developed a similar “explainable” network that could perform various tasks, from basic logic operations to secure message-passing and memory functions.

In another collaboration with physiology and pharmacology professor Wataru Inoue and his research team at Schulich School of Medicine & Dentistry, they even successfully connected their network to a living brain cell, creating a hybrid system that bridges artificial and biological neural networks.

“This kind of fundamental understanding is crucial as we continue to develop more sophisticated AI systems that we can trust and rely on,” said Budzinski, a post-doctoral scholar in the Fields Lab for Network Science.

More information:

Luisa H. B. Liboni et al, Image segmentation with traveling waves in an exactly solvable recurrent neural network, Proceedings of the National Academy of Sciences (2025). DOI: 10.1073/pnas.2321319121

Roberto C. Budzinski et al, An exact mathematical description of computation with transient spatiotemporal dynamics in a complex-valued neural network, Communications Physics (2024). DOI: 10.1038/s42005-024-01728-0

Provided by

University of Western Ontario

Citation:

Mathematical technique ‘opens the black box’ of AI decision-making (2025, January 13)