At the atomic and subatomic scales, objects behave in ways that challenge the classical worldview based on day-to-day interactions with macroscopic reality. A familiar example is the discovery that electrons can behave as both particles and waves, depending on the experimental context in which they are observed. To explain this and other phenomena, which appear contrary to the laws of physics inherited from previous centuries, models that are self-consistent but have contradictory interpretations have been proposed by scientists such as Louis de Broglie (1892-1987), Niels Bohr (1885-1962), Erwin Schrödinger (1887-1961) and David Bohm (1917-1992), among others.

However, the great debates that accompanied the formulation of quantum theory, involving especially Einstein and Bohr, did not lead to conclusive results. Most of the next generation of physicists opted for equations that derived from conflicting theoretical frameworks without worrying much about the underlying philosophical concepts. The equations “worked,” and that was apparently sufficient. Various technological artifacts that are now trivial were based on practical applications of quantum theory.

It is human nature to question everything, and a key question that arose later was why the strange, even counter-intuitive, behavior observed in quantum experiments did not manifest itself in the macroscopic world. To answer this question, or circumvent it, Polish physicist Wojciech Zurek has developed the concept of “quantum Darwinism.”

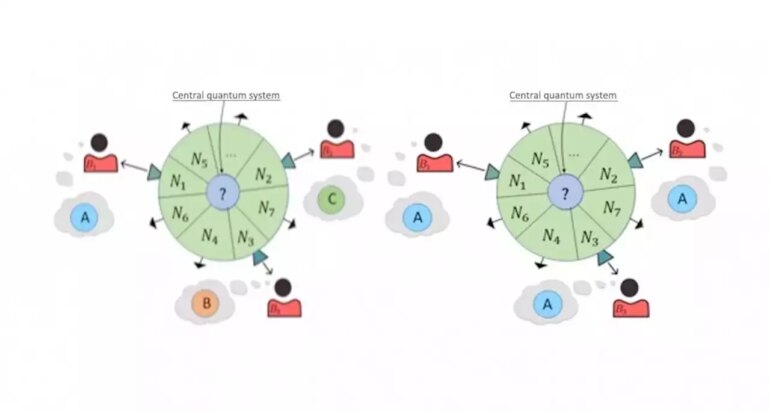

Simply put, the hypothesis is that interaction between a physical system and its environment selects for certain kinds of behavior and rules out others, and that the kinds of behavior conserved by this “natural selection” are precisely those that correspond to the classical description.

Thus, for example, when someone reads this text, their eyes receive photons that interact with their computer or smartphone screen. Another person, from a different viewpoint, will receive different photons, but although the particles in the screen behave in their own strange ways, potentially producing images completely different from each other, interaction with the environment selects for only one kind of behavior and excludes the rest, so that the two readings end up accessing the same text.

This line of theoretical investigation has been taken forward, with an even greater degree of abstraction and generalization, in a paper by Brazilian physicist Roberto Baldijão published in Quantum, an open-access peer-reviewed journal for quantum science and related fields.

The paper reports findings that are part of Baldijão’s Ph.D. research, supervised by Marcelo Terra Cunha, a professor in the Institute of Mathematics, Statistics and Scientific Computing at the University of Campinas (IMECC-UUNICAMP) in Brazil.

The co-authors of the paper include Markus Müller, who supervised Baldijão’s research internship at the Institute for Quantum Optics and Quantum Information (IQOQI) of the Austrian Academy of Sciences in Vienna.

“Quantum Darwinism was proposed as a mechanism to obtain the classical objectivity to which we’re accustomed from inherently quantum systems. In our research, we investigated which physical principles might be behind the existence of such a mechanism,” Baldijão said.

In conducting his investigation, he adopted a formalism known as generalized probabilistic theories (GPTs). “This formalism enables us to produce mathematical descriptions of different physical theories, and hence to compare them. It also enables us to understand which theories obey certain physical principles. Quantum theory and classical theory are two examples of GPTs, but many others can also be described,” he said.

According to Baldijão, working with GPTs is convenient because it enables valid results to be obtained even if quantum theory has to be abandoned at some point. Furthermore, the framework provides for better apprehension of quantum formalism by comparing it with what it is not. For example, it can be used to derive quantum theory from simpler physical principles without assuming the theory from scratch. “Based on the formalism of GPTs, we can find out which principles permit the existence of ‘Darwinism’ without needing to resort to quantum theory,” he said.

The paradoxical result at which Baldijão arrived in his theoretical investigation was that classical theory only emerges via “natural selection” from theories with certain non-classical features if they involve “entanglement.”

“Surprisingly, the manifestation of classical behaviors via Darwinism depends on such a notably non-classical property as entanglement,” he said.

Entanglement, which is a key concept in quantum theory, occurs when particles are created or interact in such a way that the quantum state of each particle cannot be described independently of the others but depends on the entire set.

The most famous example of entanglement is the thought experiment known as EPR (Einstein-Podolsky-Rosen). A number of paragraphs are required to explain it. In a simplified version of the experiment, Bohm imagined a situation in which two electrons interact and are then separated by an arbitrarily large distance, such as the distance between Earth and the Moon. If the spin of one electron is measured, it can be spin up or spin down, with both having the same probability. Electron spins will always end up pointing either up or down after a measurement—never at some angle in between. However, because of the way they interact, the electrons must be paired, meaning that they spin and orbit in opposite directions, whatever the direction of measurement. Which of the two will be spin up or spin down is unknown, but the results will always be opposite owing to their entanglement.

The experiment was supposed to show that the formalism of quantum theory was incomplete because entanglement presupposed that information traveled between the two particles at infinite speed, which was impossible according to relativity theory. How could the distant particles “know” which way to spin in order to produce opposite results? The idea was that hidden variables were acting locally behind the quantum scene and that the classical worldview would be vindicated if these variables were considered by a more comprehensive theory.

Albert Einstein died in 1955. Almost a decade later, his argument was more or less refuted by John Bell (1928-1990), who constructed a theorem to show that the hypothesis that a particle has definitive values independently of the observation process is incompatible with quantum theory, as is the impossibility of immediate communication at a distance. In other words, the non-locality that characterizes entanglement is not a defect but a key feature of quantum theory.

Whatever its theoretical interpretation, the empirical existence of entanglement has been demonstrated in several experiments conducted since then. Preserving entanglement is now the main challenge in the development of quantum computing since quantum systems tend to lose coherence quickly if they interact with the environment. This brings us back to quantum Darwinism.

“In our study, we showed that if a GPT displays decoherence, this is because there’s a transformation in the theory capable of implementing the idealized process of Darwinism we considered,” Baldijão said. “Similarly, if a theory has sufficient structure to allow for reversible computation—computation that can be undone—then there is also a transformation capable of implementing Darwinism. This is most interesting, considering the computational applications of GPTs.”

As a complementary result of the study, the authors offer an example of “non-quantum Darwinism” in the shape of extensions to Spekkens’ toy model, a theory proposed in 2004 by Canadian physicist Robert Spekkens, currently a senior researcher at the Perimeter Institute for Theoretical Physics in Waterloo, Ontario. This model is important to the in-depth investigation of the fundamentals of quantum physics because it reproduces many forms of quantum behavior on the basis of classical concepts.

“The model doesn’t exhibit any kind of non-locality and is incapable of violating any Bell inequalities,” Baldijão said. “We demonstrate that it may exhibit Darwinism, and this example also shows that the conditions we found to guarantee the presence of Darwinism—decoherence or reversible computation—are sufficient but not necessary for this process to occur in GPTs.”

As principal investigator for the project funded by FAPESP, Cunha had this to say: “Quantum theory can be considered a generalization of probability theory, but it’s far from being the only possible one. The big challenges in our research field include understanding the properties that distinguish classical theory from quantum theory in this ocean of possible theories. Baldijão’s Ph.D. thesis set out to explain how quantum Darwinism could eliminate one of the most clearly non-classical features of quantum theory: contextuality, which encompasses the concept of entanglement.

“During his research internship with Markus Müller’s group in Vienna, Baldijão worked on something even more general: the process of Darwinism in general probabilistic theories. His findings help us understand better the dynamics of certain types of theory, showing that because Darwinism preserves only the fittest and hence creates a classical world, it isn’t an exclusively quantum process.”

More information:

Roberto D. Baldijao et al, Quantum Darwinism and the spreading of classical information in non-classical theories, Quantum (2022). DOI: 10.22331/q-2022-01-31-636

Jonathan Barrett, Information processing in generalized probabilistic theories, Physical Review A (2007). DOI: 10.1103/PhysRevA.75.032304

Citation:

Study points to physical principles that underlie quantum Darwinism (2022, April 27)