With more than 1,000 nerve endings, human skin is the brain’s largest sensory connection to the outside world, providing a wealth of feedback through touch, temperature and pressure. While these complex features make skin a vital organ, they also make it a challenge to replicate.

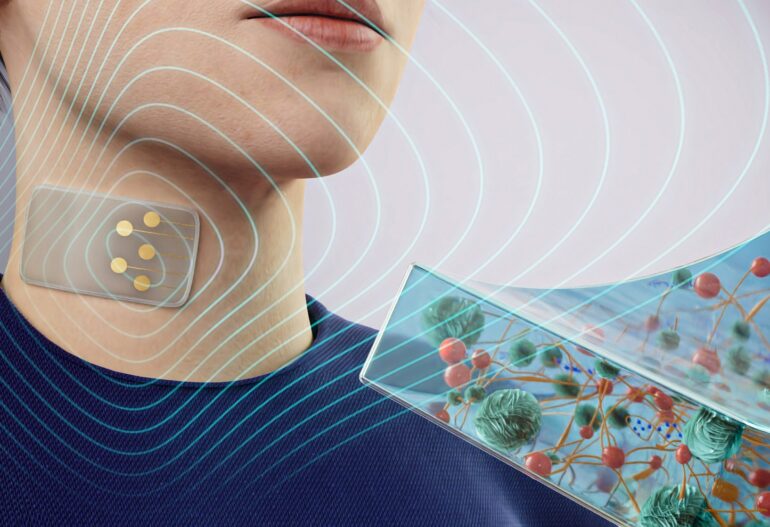

By utilizing nanoengineered hydrogels that exhibit tunable electronic and thermal biosensing capabilities, researchers at Texas A&M University have developed a 3D-printed electronic skin (E-skin) that can flex, stretch and sense like human skin.

“The ability to replicate the sense of touch and integrate it into various technologies opens up new possibilities for human-machine interaction and advanced sensory experiences,” said Dr. Akhilesh Gaharwar, professor and director of research for the Department of Biomedical Engineering. “It can potentially revolutionize industries and improve the quality of life for individuals with disabilities.”

Future uses for the E-skin are vast, including wearable health devices that continuously monitor vital signs like motion, temperature, heart rate and blood pressure, providing feedback to users and helping them improve their motor skills and coordination.

“The inspiration behind developing E-skin is rooted in the desire to create more advanced and versatile interfaces between technology, the human body and the environment,” Gaharwar said. “The most exciting aspect of this research is its potential applications in robotics, prosthetics, wearable technology, sports and fitness, security systems and entertainment devices.”

The E-skin technology, detailed in a study published by Advanced Functional Materials, was developed in Gaharwar’s lab. Drs. Kaivalya Deo, a former student of Gaharwar and now a scientist at Axent Biosciences, and Shounak Roy, a former Fulbright Nehru doctoral fellow in Gaharwar’s Lab, are the paper’s lead authors.

Creating E-skin involves challenges with developing durable materials that can simultaneously mimic the flexibility of human skin, contain bioelectrical sensing capabilities and employ fabrication techniques suitable for wearable or implantable devices.

“In the past, the stiffness of these systems was too high for our body tissues, preventing signal transduction and creating mechanical mismatch at the biotic-abiotic interface,” Deo said. “We introduced a ‘triple-crosslinking’ strategy to the hydrogel-based system, which allowed us to address one of the key limitations in the field of flexible bioelectronics.”

Using nanoengineered hydrogels addresses some of the challenging aspects of E-skin development during 3D printing due to hydrogels’ ability to decrease viscosity under shear stress during E-skin creation, allowing for easier handling and manipulation. The team said this feature facilitates the construction of complex 2D and 3D electronic structures, an essential aspect of replicating the multifaceted nature of human skin.

The researchers also utilized ‘atomic defect’ in molybdenum disulfide nano-assemblies, a material containing imperfections in its atomic structure that allow for high electrical conductivity, and polydopamine nanoparticles to help the E-skin stick to wet tissue.

“These specially designed molybdenum disulfide nanoparticles acted as crosslinkers to form the hydrogel and imparted electrical and thermal conductivity to the E-skin; we are the first to report using this as the key component,” Roy said. “The material’s ability for adhesion to wet tissues is particularly crucial for potential health care applications where the E-skin needs to conform and adhere to dynamic, moist biological surfaces.”

Other collaborators include researchers from Dr. Limei Tian’s group in the biomedical engineering department at Texas A&M and Dr. Amit Jaiswal at the Indian Institute of Technology, Mandi.

More information:

Shounak Roy et al, 3D Printed Electronic Skin for Strain, Pressure and Temperature Sensing, Advanced Functional Materials (2024). DOI: 10.1002/adfm.202313575

Provided by

Texas A&M University College of Engineering

Citation:

3D printed electronic skin provides promise for human-machine interaction (2024, January 26)