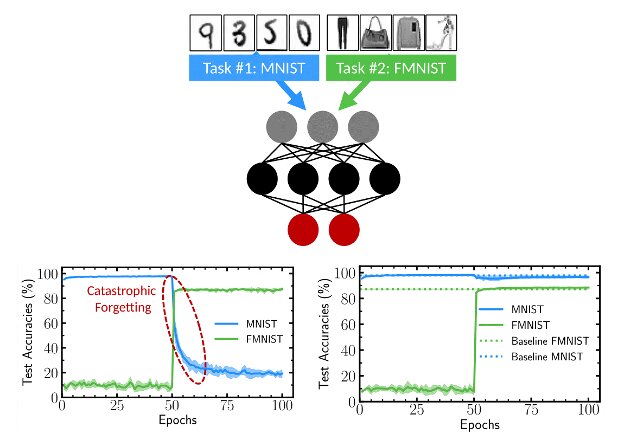

Deep neural networks have achieved highly promising results on several tasks, including image and text classification. Nonetheless, many of these computational methods are prone to what is known as catastrophic forgetting, which essentially means that when they are trained on a new task, they tend to rapidly forget how to complete tasks they were trained to complete in the past.

Researchers at Université Paris-Saclay- CNRS recently introduced a new technique to alleviate forgetting in binarized neural networks. This technique, presented in a paper published in Nature Communications, is inspired by the idea of synaptic metaplasticity, the process through which synapses (junctions between two nerve cells) adapt and change over time in response to experiences.

“My group had been working on binarized neural networks for a few years,” Damien Querlioz, one of the researchers who carried out the study, told TechXplore. “These are a highly simplified form of deep neural networks, the flagship method of modern artificial intelligence, which can perform complex tasks with reduced memory requirements and energy consumption. In parallel, Axel, then a first-year Ph.D. student in our group, started to work on the synaptic metaplasticity models introduced in 2005 by Stefano Fusi.”

Neuroscience studies suggest that the ability of nerve cells to adapt to experiences is what ultimately allows the human brain to avoid ‘catastrophic forgetting’ and remember how to complete a given task even after tackling a new one. Most artificial intelligence (AI) agents, however, forget previously learned tasks very rapidly after learning new ones.

“Almost accidentally, we realized that binarized neural networks and synaptic metaplasticity, two topics that were studying with very different motivations, were in fact connected,” Querlioz said. “In both binarized neural networks and the Fusi model of metaplasticity, the strength of the synapses can only take two values, but the training process involves a ‘hidden’ parameter. This is how we got the idea that binarized neural networks could provide a way to alleviate the issue of catastrophic forgetting in AI.”

To replicate the process of synaptic metaplasticity in binarized neural networks, Querlioz and his colleagues introduced a ‘consolidation mechanism’, where the more a synapse is updated in the same direction (i.e., with its hidden state value going up or going down), the harder it should be for it to switch back in the opposite direction. This mechanism, inspired by the Fusi model of metaplasticity, only differs slightly from the way in which binarized neural networks are usually trained, yet it has a significantly impact on the network’s catastrophic forgetting.

“The most notable findings of our study are, firstly, that the new consolidation mechanism we introduced effectively reduces forgetting and it does so based on the local internal state of the synapse only, without the need to change the metric optimized by the network between tasks, in contrast with other approaches of the literature,” Axel Laborieux, a first-year Ph.D. student who carried out the study, told TechXplore. “This feature is especially appealing for the design of low-power hardware since one must avoid the overhead of data movement and computation.”

The findings gathered by this team of researchers could have important implications for the development of AI agents and deep neural networks. The consolidation mechanism introduced in the recent paper could help to mitigate catastrophic forgetting in binarized neural networks, enabling the development of AI agents that can perform well on a variety of tasks. Overall, the study by Querlioz, Laborieux and their colleagues Maxence Ernoult and Tifenn Hirtzlin also highlights the value of drawing inspiration from neuroscience theory when trying to develop better performing AI agents.

“Our group specializes in developing low-power consumption AI hardware using nanotechnologies,” Querlioz said. “We believe that the metaplastic binarized synapses that we proposed in this work are very adapted for nanotechnology-based implementations, and we have already started to design and fabricate new devices based on this idea.”

The brain’s memory abilities inspire AI experts in making neural networks less ‘forgetful’

More information:

Synaptic metaplasticity in binarized neural networks. Nature Communications(2021). DOI: 10.1038/s41467-021-22768-y. www.nature.com/articles/s41467-021-22768-y

2021 Science X Network

Citation:

A bio-inspired technique to mitigate catastrophic forgetting in binarized neural networks (2021, June 10)

retrieved 10 June 2021

from https://techxplore.com/news/2021-06-bio-inspired-technique-mitigate-catastrophic-binarized.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.