People and institutions are grappling with the consequences of AI-written text. Teachers want to know whether students’ work reflects their own understanding; consumers want to know whether an advertisement was written by a human or a machine.

Writing rules to govern the use of AI-generated content is relatively easy. Enforcing them depends on something much harder: reliably detecting whether a piece of text was generated by artificial intelligence.

Some studies have investigated whether humans can detect AI-generated text. For example, people who themselves use AI writing tools heavily have been shown to accurately detect AI-written text. A panel of human evaluators can even outperform automated tools in a controlled setting. However, such expertise is not widespread, and individual judgment can be inconsistent. Institutions that need consistency at a large scale therefore turn to automated AI text detectors.

The problem of AI text detection

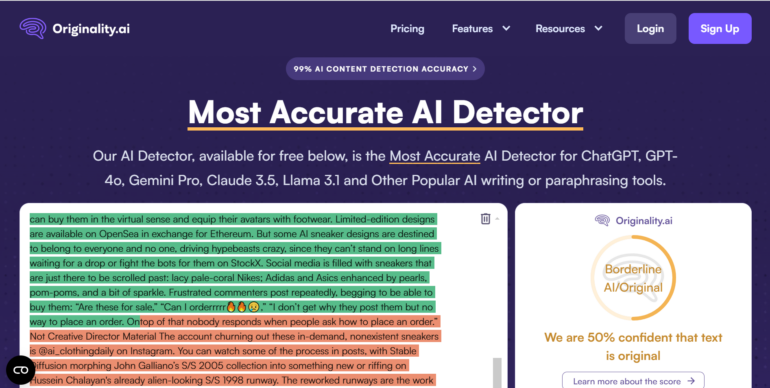

The basic workflow behind AI text detection is easy to describe. Start with a piece of text whose origin you want to determine. Then apply a detection tool, often an AI system itself, that analyzes the text and produces a score, usually expressed as a probability, indicating how likely the text is to have been AI-generated. Use the score to inform downstream decisions, such as whether to impose a penalty for violating a rule.

This simple description, however, hides a great deal of complexity. It glosses over a number of background assumptions that need to be made explicit. Do you know which AI tools might have plausibly been used to generate the text? What kind of access do you have to these tools? Can you run them yourself, or inspect their inner workings? How much text do you have? Do you have a single text or a collection of writings gathered over time? What AI detection tools can and cannot tell you depends critically on the answers to questions like these.

There is one additional detail that is especially important: Did the AI system that generated the text deliberately embed markers to make later detection easier?

These indicators are known as watermarks. Watermarked text looks like ordinary text, but the markers are embedded in subtle ways that do not reveal themselves to casual inspection. Someone with the right key can later check for the presence of these markers and verify that the text came from a watermarked AI-generated source. This approach, however, relies on cooperation from AI vendors and is not always available.

How AI text detection tools work

One obvious approach is to use AI itself to detect AI-written text. The idea is straightforward. Start by collecting a large corpus, meaning collection of writing, of examples labeled as human-written or AI-generated, then train a model to distinguish between the two. In effect, AI text detection is treated as a standard classification problem, similar in spirit to spam filtering. Once trained, the detector examines new text and…