If you’re going to follow the news in artificial intelligence, you had better have a copy of an English dictionary with you, and maybe a couple of etymological dictionaries as well.

Today’s deep learning forms of AI are proliferating uses of ordinary words that can be potentially deeply misleading. That includes suggesting that the machine is actually doing something that a person does, such as thinking, reasoning, knowing, seeing, wondering.

The latest example is a new program from DeepMind, the AI unit of Google based in London. DeepMind researchers on Thursday unveiled what they call PonderNet, a program that can make a choice about whether to explore possibilities for a problem or to give up.

DeepMind phrases this exercise on the part of the computer as “pondering,” but really it might just as well be called “cut your losses,” or “halt and catch fire,” seeing as it actually has very little to do with pondering in the human sense of that term, and much more to do with how a computer completes a task.

The program, described by scientists Andrea Banino, Jan Balaguer, and Charles Blundell, dwells at an interesting intersection of neural network design and computer optimization.

The program is all about efficiency of compute, and the trade-off between efficiency and accuracy. As the authors describe it, PonderNet is “a new algorithm that learns to adapt the amount of computation based on the complexity of the problem at hand.”

Also: Google’s Supermodel: DeepMind Perceiver is a step on the road to an AI machine that could process anything and everything

PonderNet acquires a capability of cutting short its computation effort if it seems the effort up to a point in time is sufficient for the neural net to make an acceptable prediction. Conversely, it can extend its calculations if doing so may produce better results.

The program balances the deep learning goal of accuracy on benchmark tests, on the one hand, against a probabilistic guess that further effort won’t really make much of a difference.

Banino and colleagues built upon work by a number of researchers over the years in areas such as conditional computation. But their most direct influence appears to be the work of their Google colleague Alex Graves.

Graves has amassed a track record of interesting investigations at the intersection of neural network design and computer operation. For example, he and colleagues some years ago proposed a “neural Turing machine” in which the selection of memory from a register file would be the result of a neural network computation.

In the case of PonderNet, Banino and team are building upon Graves’s work in 2016 on what’s called Adaptive Computation Time. The insight in that paper is that in the realm of human reasoning, the statement of a problem and the solution of a problem are often asymmetrical. A problem may sometimes take very little effort to express, but a lot of time to figure out. For example, it’s easier to add two numbers than to divide them, though the symbol notation looks almost identical.

The classic example, per Graves, is Fermat’s Last Theorem, as mathematicians call the note that Pierre de Fermat made in the margins of a book that subsequently took scientists three centuries to prove.

So, Graves came up with a way for a computer to calculate how long it should “ponder” a problem of prediction given the complexity of the problem.

Also: DeepMind’s AlphaFold 2 reveal: Convolutions are out, attention is in

What that actually means is how many layers of a neural network should be allowed for the calculation of a prediction. A neural network program is a transformation machine: it automatically finds a way to transform input into output. The number of layers of artificial neurons through which input must be passed to be successfully transformed into an accurate output is one way to measure the compute effort.

Pondering, then, means varying the number of network layers, and thereby the network’s compute, deciding how soon the computer should give up or if, instead, it should keep trying.

As Graves writes,

In the interests of both computational efficiency and ease of learning it seems preferable to dynamically vary the number of steps for which the network ‘ponders’ each input before emitting an output. In this case the effective depth of the network at each step along the sequence becomes a dynamic function of the inputs received so far.

The term “depth” refers to how many layers of artificial neurons a program should have.

The way Graves went about the matter is to attach to the end of a neural network what’s called a “ponder cost,” an amount of compute time one seeks to minimize. The program then predicts the best amount of compute at which to stop computing its prediction.

In echoes of Alan Turing’s famous theory of the “halting problem,” which kicked off the age of computing, Graves labeled the part of the program that computes when to stop based on the cost the “halting unit.”

Flash forward to PonderNet. Banino and team take up Graves’s quest, and their main contribution is to re-interpret how to think about that last part, the halting unit. Banino and team notice that Graves’s objective is overly simplistic, in that it simply looks at the last neural network layer and says, “that’s enough,” which is a not terribly sophisticated way to evaluate compute costs.

So, the researchers come up with what they call a probabilistic approach. Rather than adding simply a cost to the program that encourages it to be more efficient, the development of PonderNet uses what’s called a Markov Decision Process, a state model where at each layer of processing of the net, the program is calculating what the likelihood is that it is time to stop calculating.

Also: AI in sixty seconds

The answer to that question is a bit of a balance: zero probability of halting could be the default, if the initial layers of a neural network have never before been an effective stopping point; but somewhat closer to one may be the probability if past experience indicates a threshold has been crossed at which more compute is going to lead to diminishing returns.

As the authors define it, “At evaluation, the network samples on a step basis from the halting Bernoulli random variable […] to decide whether to continue or to halt.”

The proof is in the pudding. Banino and colleagues apply the apparatus to a variety of kinds of neural networks for a variety of tasks. The approach, they write, can be used for numerous kinds of machine learning programs, from simple feed-toward neural nets to so-called self-attention programs such as Google’s Transformer and its descendants.

In their experiments, they find that the program actually changes its amount of computation to do better on a test. Again they go back to Graves. That paper proposed what’s called a parity test. Feed the program a bunch of digits consisting of a random assortment of zero, one and negative one, and predict what their output is. A very simple neural network, one with a single layer of artificial neurons, will basically come up with answers that are fifty-fifty accurate, a coin toss.

With the ability to increase computation, the program that Graves and colleagues had built did much better. And Banino and colleagues find their program does even better than that one. In particular, given a greater number of random digits, PonderNet proceeds to arrive at the correct sum with high reliability, versus what starts to be random guessing by Graves and team’s program.

“PonderNet was able to achieve almost perfect accuracy on this hard extrapolation task, whereas ACT [Adaptive Computation Time] remained at chance level,” they write.

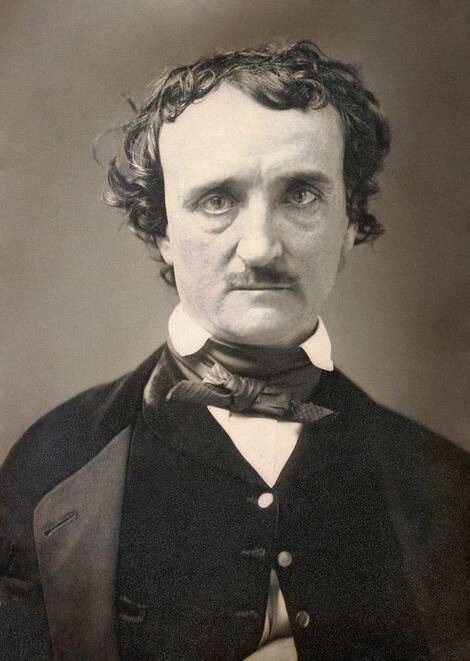

Edgar Allan Poe did not suggest optimization when he wrote in The Raven “Once upon a midnight dreary, while I pondered, weak and weary.”

Unknown author; Restored by Yann Forget and Adam Cuerden

You can see a key shift going on. Graves and team sought to impose a boundary, an amount of efficient compute budget which a program should seek not to exceed. Banino and team in a sense go the opposite way, setting levels of compute which a program may repeatedly exceed, as a hurdle jumper goes over each obstacle, in this case on the path to improved accuracy.

The upshot of all this fascinating science is that it could add an important tool to deep learning, the ability to apply conditions, in a rigorous fashion, to how much work a program should do to arrive at an answer.

The authors note the significance for making neural networks more efficient. The escalating compute budget for AI has been cited as a key ethical issue for the field, given its risk of exacerbating climate change. The authors write, “Neural networks […] require much time, expensive hardware and energy to train and to deploy.

“PonderNet […] can be used to reduce the amount of compute and energy at inference time, which makes it particularly well suited for platforms with limited resources such as mobile phones.”

The authors interestingly also suggest PonderNet takes machine learning in the direction of “behaving more like algorithms, and less like ‘flat’ mappings.” That change, they asset, could “help develop deep learning methods to their the full potential,” a rather cryptic assertion.

As promising as PonderNet sounds, you should not be mislead by the name. This is not actual pondering. The Merriam Webster dictionary defines the word ponder as being “to weigh in the mind.” Also offered are the definitions “reflect on” and “to think about.”

Whatever goes on with humans when they do such things, it doesn’t appear to involve a “cost function” to optimize. Nor is a human weighing the likelihood of success when they ponder, at least not in the way a program can be made to do.

Sure, someone might come into the room, see you at your desk, deep in thought, and ask, somewhat exasperatedly, “you’re still thinking about this?” Other people attach a cost function, and calculate the probability that you are wasting your time. The ponderer just ponders.

The poet Edgar Allan Poe captured some of the mysterious quality of the act of pondering — a thing that is much more an experience than a process, a thing without a trace of optimization — in the opening line of his poem The Raven: “Once upon a midnight dreary, while I pondered, weak and weary.”