You may well have seen sci-fi movies or television shows where the protagonist asks to zoom in on an image and enhance the results – revealing a face, or a number plate, or any other key detail – and Google’s newest artificial intelligence engines, based on what’s known as diffusion models, are able to pull off this very trick.

It’s a difficult process to master, because essentially what’s happening is that picture details are being added that the camera didn’t originally capture, using some super-smart guesswork based on other, similar-looking images.

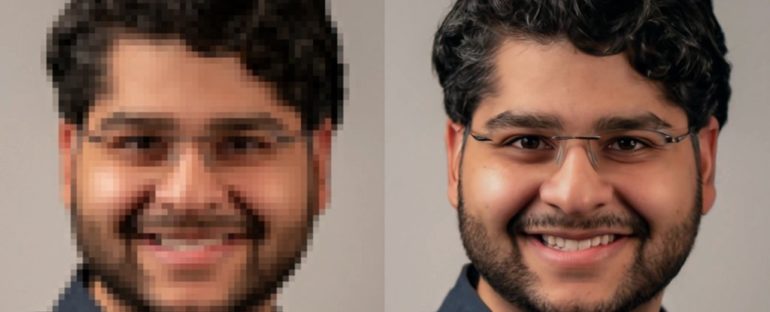

The technique is called natural image synthesis by Google, and in this particular scenario, image super-resolution. You start off with a small, blocky, pixelated photo, and you end up with something sharp, clear, and natural-looking. It may not match the original exactly, but it’s close enough to look real to a pair of human eyes.

(Google Research)

Google has actually unveiled two new AI tools for the job. The first is called SR3, or Super-Resolution via Repeated Refinement, and it works by adding noise or unpredictability to an image and then reversing the process and taking it away – much as an image editor might try to sharpen up your vacation snaps.

“Diffusion models work by corrupting the training data by progressively adding Gaussian noise, slowly wiping out details in the data until it becomes pure noise, and then training a neural network to reverse this corruption process,” explain research scientist Jonathan Ho and software engineer Chitwan Saharia from Google Research.

Through a series of probability calculations based on a vast database of images and some machine learning magic, SR3 is able to envisage what a full-resolution version of a blocky low-resolution image looks like. You can read more about it in the paper Google has posted on arXiv.

The second tool is CDM, or Cascaded Diffusion Models. Google describes these as “pipelines” through which diffusion models – including SR3 – can be directed for high-quality image resolution upgrades. It takes the enhancement models and makes larger images out of it, and Google has published a paper on this too.

By using different enhancement models at different resolutions, the CDM approach is able to beat alternative methods for upsizing images, Google says. The new AI engine was tested on ImageNet, a gigantic database of training images commonly used for visual object recognition research.

The end results of SR3 and CDM are impressive. In a standard test with 50 human volunteers, SR3-generated images of human faces were mistaken for real photos around 50 percent of the time – and considering a perfect algorithm would be expected to hit a 50 percent score, that’s impressive.

It’s worth reiterating that these enhanced images aren’t exact matches for the originals, but they’re carefully calculated simulations based on some advanced probability maths.

Google says the diffusion approach produces better results than alternative options, including generative adversarial networks (GANs) that pit two neural networks against each other to refine results.

Google is promising much more from its new AI engines and associated technologies – not just in terms of upscaling images of faces and other natural objects, but in other areas of probability modeling as well.

“We are excited to further test the limits of diffusion models for a wide variety of generative modeling problems,” the team explains.