by Frederik Efferenn, Alexander von Humboldt Institut für Internet und Gesellschaft

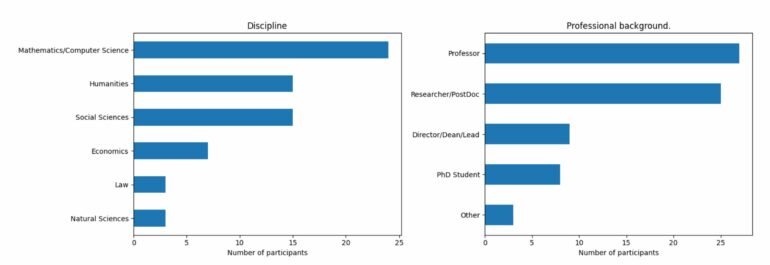

Large Language Models (LLMs), such as those used by the chatbot ChatGPT, have the power to revolutionize the science system. This is the conclusion of a Delphi study conducted by the Alexander von Humboldt Institute for Internet and Society (HIIG), encompassing an extensive survey of 72 international experts specializing in the fields of AI and digitalization research.

The respondents emphasize that the positive effects on scientific practice clearly outweigh the negative ones. At the same time, they stress the urgent task of science and politics to actively combat possible disinformation by LLMs in order to preserve the credibility of scientific research. They therefore call for proactive regulation, transparency and new ethical standards in the use of generative AI.

The study “Friend or Foe? Exploring the Implications of Large Language Models on the Science System” is now available as a preprint on the arXiv server.

According to the experts, the positive effects are most evident in the textual realm of academic work. In the future, large language models will enhance the efficiency of research processes by automating various tasks involved in writing and publishing papers. Likewise, they can alleviate scientists from the mounting administrative reporting and research proposal procedures that have grown substantially in recent years.

As a result, they create additional time for critical thinking and open up avenues for new innovations, as researchers can refocus on their research content and effectively communicate it to a broader audience.

While acknowledging the undeniable benefits, the study underlines the importance of addressing possible negative consequences. As per the respondents, LLMs have the potential to generate false scientific claims that are indistinguishable from genuine research findings at first glance. This misinformation could be spread in public debates and influence policy decisions, exerting a negative impact on society. Similarly, flawed training data from large language models can embed various racist and discriminatory stereotypes in the produced texts.

These errors could infiltrate scientific debates if researchers incorporate LLM-generated content into their daily work without thorough verification.

To overcome these challenges, researchers must acquire new skills. These include the ability to critically contextualize the results of LLMs. At a time when disinformation from large language models is on the rise, researchers need to use their expertise, authority and reputation to advance objective public discourse. They advocate for stricter legal regulations, increased transparency of training data as well as the cultivation of responsible and ethical practices in utilizing generative AI in the science system.

Dr. Benedikt Fecher, the lead researcher on the survey, comments, “The results point to the transformative potential of large language models in scientific research. Although their enormous benefits outweigh the risks, the expert opinions from the fields of AI and digitization show how important it is to concretely address the challenges related to misinformation and the loss of trust in science. If we use LLMs responsibly and adhere to ethical guidelines, we can use them to maximize the positive impact and minimize the potential harm.”

More information:

Benedikt Fecher et al, Friend or Foe? Exploring the Implications of Large Language Models on the Science System, arXiv (2023). DOI: 10.48550/arxiv.2306.09928

Provided by

Alexander von Humboldt Institut für Internet und Gesellschaft

Citation:

Experts encourage proactive use of ChatGPT with new ethical standards (2023, June 19)