Can large language models (LLMs) reason by analogy? Some outputs suggest that they can, but it has been argued that these results reflect mimicry of the results of analogical reasoning in the models’ training data.

To test this claim, LLMs have been asked to solve counterfactual problems that are unlikely to be similar to problems in training data sets. Here is an example:

Let’s solve a puzzle problem involving the following fictional alphabet:

[x y l k w b f z t n j r q a h v g m u o p d i c s e]

Here is the problem:

[x y l k] [x y l w]

[j r q a] [ ? ]

What four letters solve the puzzle?

The correct answer would be “j r q h,” as h is one letter beyond a in the fictional alphabet, just as w is one letter beyond k in the fictional alphabet. However, many models have been unable to solve similar problems.

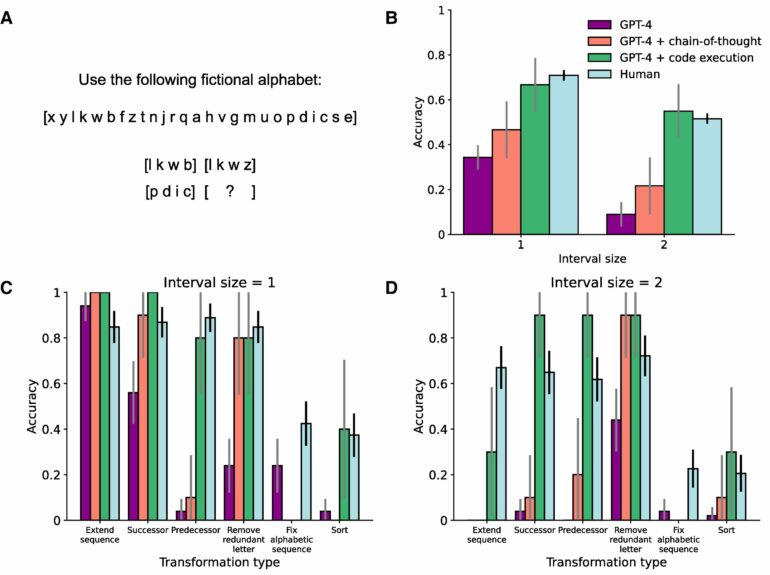

Published in PNAS Nexus, Taylor W. Webb and colleagues propose that the failure to solve these counterfactual problems has more to do with LLMs’ well-known difficulty in counting, since the problems require basic counting in order to establish the position of each letter in the sequence.

The authors evaluated a recent version of GPT-4 that can write and execute code, which allowed the model to create a code to count items. This LLM was able to solve these counterfactual letter-string analogies at a roughly human level of performance, and gave coherent and accurate explanations of why the correct solution was correct.

According to the authors, GPT-4 can use analogies to reason, a capacity that may be supported by a set of structured operations and emergent relational representations.

More information:

Taylor W Webb et al, Evidence from counterfactual tasks supports emergent analogical reasoning in large language models, PNAS Nexus (2025). DOI: 10.1093/pnasnexus/pgaf135

Provided by

PNAS Nexus

Citation:

GPT-4 matches human performance on analogical reasoning tasks, study shows (2025, May 27)