Researchers used Oak Ridge National Laboratory’s Quantum Computing User Program (QCUP) to achieve major improvements in quantum fidelity, a potential step toward more accurate, reliable quantum networks and supercomputers.

Quantum computing relies on transfer and storage of information via quantum bits, known as qubits, rather than the traditional single-value bits used by classical computers. Qubits, unlike classical bits, use the properties of quantum mechanics to store more than one value at a time, which enables transmission of vast amounts of information greater than possible otherwise. The technology holds promise for solving challenges, such as unhackable encryption standards, that have so far stumped traditional computers—but first scientists must achieve consistently accurate results.

“We know quantum computers that are available to date can perform functions classical computers can’t,” said Ruslan Shaydulin, the study’s lead author and a Maria Goeppert Mayer fellow at Argonne National Laboratory. “The major problem with existing quantum computers is their relatively high error rate, or noise. How can we reduce that error rate and boost fidelity so we can harvest this computational power to solve major problems? Our study sets the stage for further improvements that suggest it’s only a matter of time before we can put this quantum power to good use.”

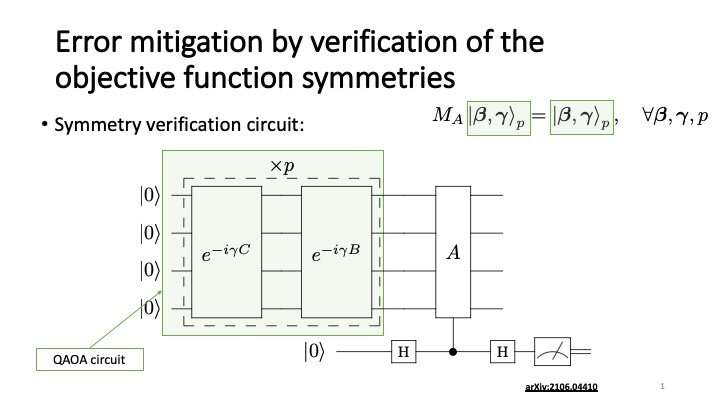

The study by Shaydulin and Alexey Galda, then a research assistant professor at the University of Chicago and now principal scientist at Menten AI, tested an approach to reduce the error rates of a quantum optimization algorithm running on five qubits of a computer from the IBM Quantum Network. Their approach relied on measuring and verifying symmetries across a series of quantum gates.

“This algorithm required completing many operations, so inevitably errors would accumulate,” Shaydulin said. “The more operations, the more errors. We wanted to perform as many operations as possible and try to hold down the error rate.”

Time on the computer was provided by QCUP, part of the Oak Ridge Leadership Computing Facility, which awards time on privately owned quantum processors around the country to support research projects. The program gave the team unrestricted access to perform as many calculations as needed.

“We were able to execute hundreds of circuits and collect millions of measurement outcomes,” Shaydulin said. “We wouldn’t have been able to do this without QCUP’s support.”

The researchers used quantum state tomography, which estimates the properties of a quantum state based on the properties of similar states, to keep track of values and correct for errors upon completion. The results showed a 23 percent improvement in quantum state fidelity—one of the most successful refinements for a quantum problem so far.

“We achieved a much larger-scale validation on this hardware with more qubits than had been done before,” Shaydulin said. “These results put us one step closer to realizing the potential of quantum computers.”

He expects to build on the study’s findings in future experiments.

“We performed the mitigation at the end, but in principle you could perform the correction throughout the operation, which could yield even better results,” Shaydulin said. “This solution would work across a wide range of applications, so it would be easy to combine with other error-mitigation techniques to squeeze as much performance as possible from devices. That’s definitely in the pipeline, probably within the next year. I think we’ll soon be able to obtain even better results.”

More information:

Ruslan Shaydulin et al, Error Mitigation for Deep Quantum Optimization Circuits by Leveraging Problem Symmetries, 2021 IEEE International Conference on Quantum Computing and Engineering (QCE) (2021). DOI: 10.1109/QCE52317.2021.00046

Provided by

Oak Ridge National Laboratory

Citation:

Major improvements in quantum fidelity (2022, January 17)