For many years, a bottleneck in technological development has been how to get processors and memories to work faster together. Now, researchers at Lund University in Sweden have presented a new solution integrating a memory cell with a processor, which enables much faster calculations, as they happen in the memory circuit itself.

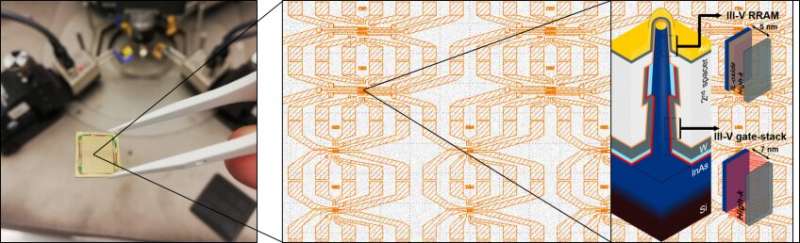

In an article in Nature Electronics, the researchers present a new configuration, in which a memory cell is integrated with a vertical transistor selector, all at the nanoscale. This brings improvements in scalability, speed and energy efficiency compared with current mass storage solutions.

The fundamental issue is that anything requiring large amounts of data to be processed, such as AI and machine learning, requires speed and more capacity. For this to be successful, the memory and processor need to be as close to each other as possible. In addition, it must be possible to run the calculations in an energy-efficient manner, not least as current technology generates high temperatures with high loads.

The problem of processors’ computations happening much faster than the speed of the memory unit has been well known for many years. In technical terms, this is known as the “von Neumann bottleneck.” The bottleneck happens because the memory and computation units are separate, and it takes time to send information back and forth via what is known as a data bus, which limits speed.

“Processors have developed a lot over many years. On the memory side, storage capacity has steadily increased, but things have been pretty quiet on the function side,” says Saketh Ram Mamidala, doctoral student in nanoelectronics at Lund University and one of the authors of the article.

© Lund University

Traditionally, the limitation has been in the construction of circuit boards with units placed next to each other on a flat surface. Now, the idea is to build vertically in a 3D configuration and to integrate the memory and processor, with computations taking place within the memory circuit itself.

“Our version is a nanowire with a transistor at the bottom, and a very small memory element located further up on the same wire. This makes it into a compact integrated function where the transistor controls the memory element. The idea has been around before, but it has proven difficult to achieve performance. Now, however, we have shown that this can be achieved and that it works surprisingly well,” says Lars-Erik Wernersson, professor of nanoelectronics.

The researchers are working with a RRAM (resistive random access memory) memory cell, which is nothing new in itself; what is new is how they have succeeded in achieving a functional integration that gives rise to great possibilities. It opens up potential new research fields and new, improved functions in everything from AI and machine learning to ordinary computers as well, eventually. Future applications could, for example, be various forms of machine learning such as radar-based gesture control, climate modeling or development of various drugs.

“The memory even works without a power supply,” adds Saketh Ram Mamidala.

At Lund, researchers have long been successful when it comes to building nanowires in what is known as the III-V technology platform. The material integration in Lund is unique, and researchers have greatly benefited from the MAX IV laboratory in developing the material and being able to understand its chemical properties.

“Solutions can probably be found in silicon as well, which is the most common material but, in our case, it is the choice of material that enables the performance. We want to pave the way for industry with our research,” concludes Lars-Erik Wernersson.

More information:

Mamidala Saketh Ram et al, High-density logic-in-memory devices using vertical indium arsenide nanowires on silicon, Nature Electronics (2021). DOI: 10.1038/s41928-021-00688-5

Citation:

Nanowire transistor with integrated memory to enable future supercomputers (2022, January 10)