Deep learning (DL) has significantly transformed the field of computational imaging, offering powerful solutions to enhance performance and address a variety of challenges. Traditional methods often rely on discrete pixel representations, which limit resolution and fail to capture the continuous and multiscale nature of physical objects. Recent research from Boston University (BU) presents a novel approach to overcome these limitations.

As reported in Advanced Photonics Nexus, researchers from BU’s Computational Imaging Systems Lab have introduced a local conditional neural field (LCNF) network, which they use to address the problem. Their scalable and generalizable LCNF system is known as “neural phase retrieval”—”NeuPh” for short.

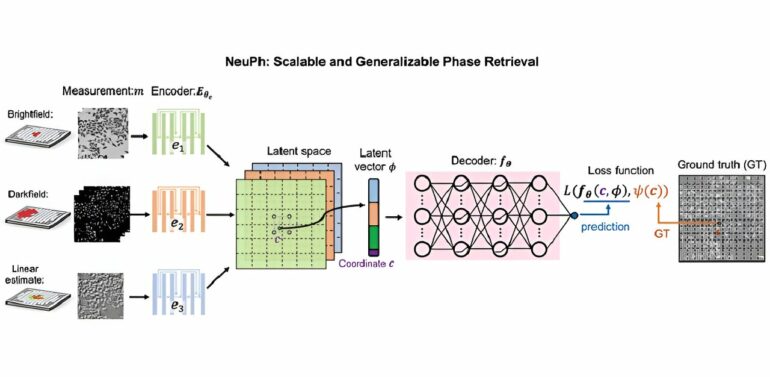

NeuPh leverages advanced DL techniques to reconstruct high-resolution phase information from low-resolution measurements. This method employs a convolutional neural network (CNN)-based encoder to compress captured images into a compact latent-space representation.

Then, this is followed by a multilayer perceptron (MLP)-based decoder that reconstructs high-resolution phase values, effectively capturing multiscale object information. By doing so, NeuPh provides robust resolution enhancement and outperforms both traditional physical model-based methods and current state-of-the-art neural networks.

The reported results highlight NeuPh’s ability to apply continuous and smooth object priors to the reconstruction, showcasing more accurate results compared to existing models. Using experimental datasets, the researchers demonstrated that NeuPh can accurately reconstruct intricate subcellular structures, eliminate common artifacts such as residual phase unwrapping errors, noise, and background artifacts, and maintain high accuracy even with limited or imperfect training data.

NeuPh also exhibits strong generalization capabilities. It consistently performs high-resolution reconstructions when trained with very limited data or under different experimental conditions. This adaptability is further enhanced by training on physics-model-simulated datasets, which allows NeuPh to generalize well to real experimental data.

According to lead researcher Hao Wang, “We also explored a hybrid training strategy combining both experimental and simulated datasets, emphasizing the importance of aligning the data distribution between simulations and real experiments to ensure effective network training.”

Wang adds, “NeuPh facilitates ‘super-resolution’ reconstruction, surpassing the diffraction limit of input measurements. By utilizing ‘super-resolved’ latent information during training, NeuPh achieves scalable and generalizable high-resolution image reconstruction from low-resolution intensity images, applicable to a wide range of objects with varying spatial scales and resolutions.”

As a scalable, robust, accurate, and generalizable solution for phase retrieval, NeuPh opens new possibilities for DL-based computational imaging techniques.

More information:

Hao Wang et al, NeuPh: scalable and generalizable neural phase retrieval with local conditional neural fields, Advanced Photonics Nexus (2024). DOI: 10.1117/1.APN.3.5.056005

Citation:

New neural framework enhances reconstruction of high-resolution images (2024, September 5)