When we perceive the world around us, parts of it come into and out of focus. While this visual cue is a natural one for humans, it can be hard for technology to imitate, especially in 3D displays. Researchers at Carnegie Mellon University have developed a new method for generating natural focal blur in a virtual reality headset, called the Split-Lohmann multifocal display.

The eye has a lens, and by changing its focal length, it can focus on objects at a specific depth, resolving them at sharp details, while points at other depths go into defocus. This feature of the eye—referred to as its accommodation—is a crucial cue that 3D displays need to satisfy.

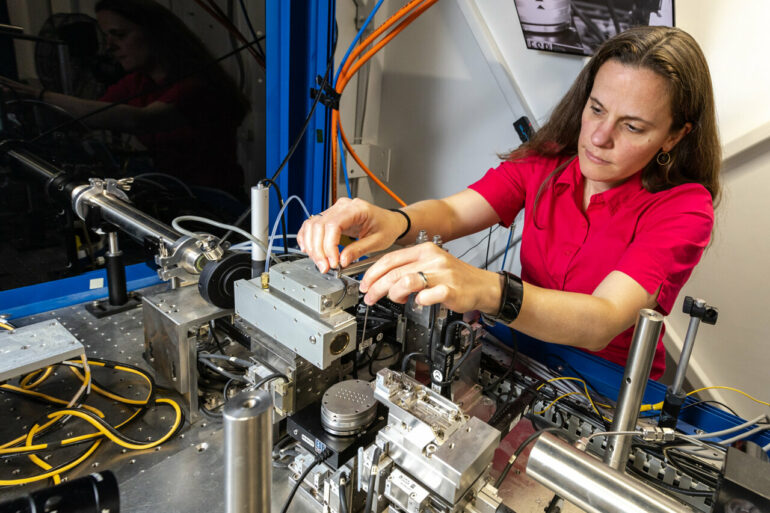

“Most head mounted displays lack the ability to render focus cues,” says Aswin Sankaranarayanan, professor of electrical and computer engineering. “Conventional headsets consist of a 2D screen and a lens that make the screen appear to be at a fixed distance from the eye. However, the displayed images remain flat. We have created a lens that can simultaneously position pixels of a display at different distances from the eye.”

The technique revisits ideas first developed in the 1970s, where a technique for focus tunability called Lohmann lenses was first invented. These consist of two optical elements called cubic phase plates, that allow for the focus to be adjusted by shifting the two elements with respect to one another. However, this approach required mechanical motion as well as slower operating speeds, both of which are undesirable in AR/VR displays.

The new display splits the Lohmann lens, placing the two cubic plates in different parts of the optical system. A phase modulator positioned in-between them allows the translation to be optical, instead of mechanical. This system has an added benefit. The underlying arrangement allows for different parts of the scene to be subject to different amounts of translations, and so be placed at different distances to the eye.

“The advantage of this system is that we can create a virtual 3D scene that satisfies the eye’s accommodation without resorting to high-speed focus stacks. And everything operates at real-time which is very favorable for near-eye displays,” explains Yingsi Qin, Ph.D. student in electrical and computer engineering and the lead author on this work.

This improvement to focusing technology will benefit many disciplines, from photography to gaming, but medical technology may have the most to gain.

“There are domains beyond entertainment that will benefit from such 3D displays,” says Matthew O’Toole, an assistant professor in the Robotics Institute and a co-author on this work. “The display could be used for robotic surgery, where the multi-focal capabilities of the display provides a surgeon with realistic depth cues.”

The team will present their research findings at the SIGGRAPH 2023 Conference this August.

More information:

View the team’s project page for more information.

Provided by

Carnegie Mellon University Electrical and Computer Engineering

Citation:

Researchers develop new method for generating natural focal blur in a VR headset (2023, June 22)