Over the past decade or so, roboticists developed increasingly sophisticated robotic systems that could help humans to complete a variety of tasks, both at home and in other environments. In order to assist users, however, these systems should be able to efficiently navigate and explore their surroundings, without colliding with other objects in their vicinity.

While there are now a number of navigation systems and techniques, the mobility of most robots is still fairly limited, particularly in unknown and unmapped environments. Most existing navigation methods have two main components: one designed to construct a map that a robot can used as reference (e.g. Simultaneous Localization and Mapping techniques) and one that generates collision-free or optimal paths for the robot (e.g., probabilistic road maps or rapidly exploring random trees).

Although some of these methods achieved promising results, they tend to be highly sensitive to noise picked up by a robot’s sensors. As a result, they often heavily rely on maps and perform poorly in dynamic or rapidly changing environments. Deep learning-based navigation approaches that do not rely on maps could ultimately help to overcome the limitations of these systems.

Researchers at Nanjing University of Aeronautics and Astronautics and the National University of Defense Technology in China have recently developed a new system that could enable more efficient robot navigation in indoor environments. Instead of relying on pre-defined maps, this system uses a training approach known as generative imitation learning, allowing robots to navigate their surroundings and fulfill their goals.

“Our method takes a multi-view observation of a robot and a target as inputs at each time step to provide a sequence of actions that move the robot to the target without relying on odometry or GPS at runtime,” the researchers wrote in their paper.

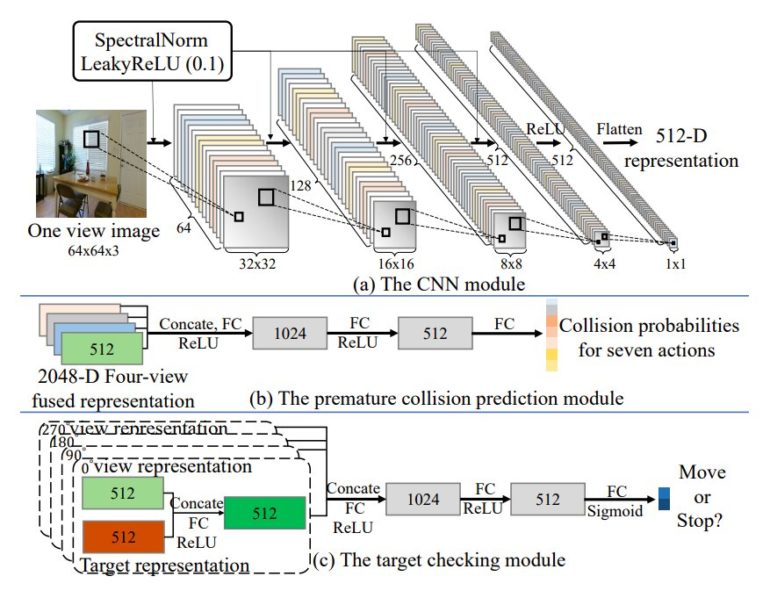

The navigation system devised by the researchers has three key components. The first is a variational generative module trained on human demonstrations, which is designed to predict changes in the environment before a robot starts planning its actions.

The second component predicts static collisions, improving the safety of the robot’s navigation. Finally, a target-checking module considers the final action or target that a robot is trying to achieve, using this information to devise more effective navigation policies.

“The three proposed designs all contribute to the improved training data efficiency, static collision avoidance, and navigation generalization performance, resulting in a novel target-driven mapless navigation system,” the researchers explained in their paper.

In the future, the new system introduced by the research team at Nanjing University of Aeronautics and Astronautics and the National University of Defense Technology could be used to enhance the navigation of other robots designed to operate in people’s homes, in offices or in other indoor environments. Moreover, the results achieved by the system could inspire other researchers to create similar tools to enable more efficient target-driven navigation in robots.

So far, the navigation system was evaluated in a series of real-world experiments using Turtlebot, a low-cost robotic platform created by two engineers at Willow Garage. The results of these tests are highly promising, as the system was easily integrated into the robot and allowed it to navigate indoor environments more effectively.

A new approach to enhance robot navigation in indoor environments

More information:

Wu et al., Towards target-driven visual navigation in indoor scenes via generative imitation learning. arXiv:2009.14509 [cs.RO]. arxiv.org/abs/2009.14509

2020 Science X Network

Citation:

A system to improve a robot’s indoor navigation (2020, October 30)

retrieved 30 October 2020

from https://techxplore.com/news/2020-10-robot-indoor.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.