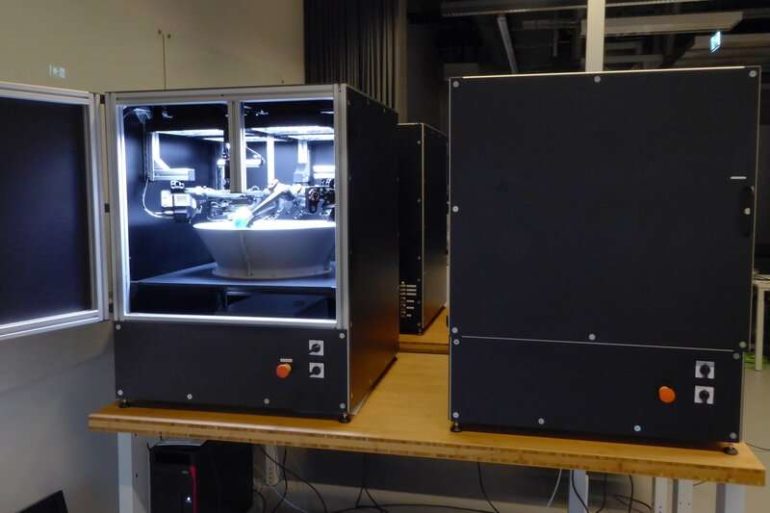

Last year, the Max Planck Institute for Intelligent Systems organized the Real Robot Challenge, a competition that challenged academic labs to come up with solutions to the problem of repositioning and reorienting a cube using a low-cost robotic hand. The teams participating in the challenge were asked to solve a series of object manipulation problems with varying difficulty levels.

To tackle one of the problems posed by the Real Robot Challenge, researchers at University of Toronto’s Vector Institute, ETH Zurich and MPI Tubingen developed a system that allows robots to acquire challenging dexterous manipulation skills, effectively transferring these skills from simulations to a real robot. This system, presented in a paper pre-published on arXiv, achieved a remarkable success rate of 83% in allowing the remote TriFinger system proposed by the challenge organizers to complete challenging tasks that involved dexterous manipulation.

“Our objective was to use learning-based methods to solve the problem introduced in last year’s Real Robot Challenge in a low-cost manner,” Animesh Garg, one of the researchers who carried out the study, told TechXplore. “We are particularly inspired by previous work on OpenAI’s Dactyl system, which showed that it is possible to use model free Reinforcement Learning in combination with Domain Randomization to solve complex manipulation tasks.”

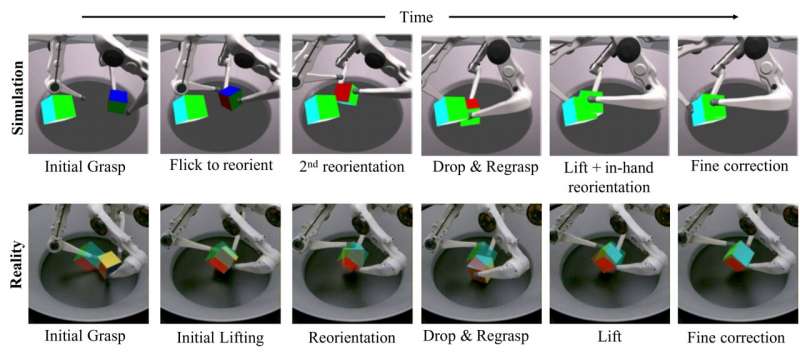

Essentially, Garg and his colleagues wanted to demonstrate that they could solve dexterous manipulation tasks using a Trifinger robotic system, transferring results achieved in simulations to the real world using fewer resources than those employed in previous studies. To do this, they trained a reinforcement learning agent in simulations and created a deep learning technique that can plan future actions based on a robot’s observations.

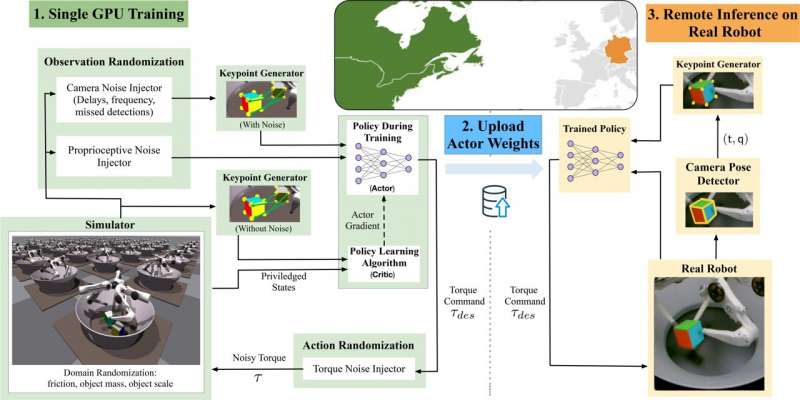

“The process we followed consists of four main steps: setting up the environment in physics simulation, choosing the correct parameterization for a problem specification, learning a robust policy and deploying our approach on a real robot,” Garg explained. “First, we created a simulation environment corresponding to the real-world scenario we were trying to solve.”

The simulated environment was created using NVIDIA’s recently released Isaac Gym Simulator. This simulator can achieve highly realistic simulations, leveraging the power of NVIDIA GPUs. By using the Isaac Gym platform, Garg and his colleagues were able to significantly reduce the amount of computations necessary to translate dexterous manipulation skills from simulations to real-world settings, decreasing their system’s requirements from a cluster with hundreds of CPUs and multiple GPUs to a single GPU.

“Reinforcement learning requires us to use representations of variables in our problem appropriate to solving the task,” Garg said. “The Real Robot challenge required competitors to repose cubes in both position and orientation. This made the task significantly more challenging than previous efforts, as the learned neural network controller needed to be able to trade off these two objectives.”

To solve the object manipulation problem posed by the Real Robot challenge, Garg and his colleagues decided to use ‘keypoint representation,” a way of representing objects by focusing on the main ‘interest points’ in an image. These are points that remain unchanged irrespective of an image’s size, rotation, distortions or other variations.

In their study, the researchers used keypoints to represent the pose of a cube that the robot was expected to manipulate in the image data fed to their neural network. They also used them to calculate the so-called reward function, which can ultimately allow reinforcement learning algorithms to improve their performance over time.

“Finally, we added randomizations to the environment,” Garg said. “These include randomizing the inputs to the network, the actions it takes, as well as various environment parameters such as the friction of the cube and adding random forces upon it. The result of this is to force the neural network controller to exhibit behavior which is robust to a range of environment parameters.”

The researchers trained their reinforcement learning model in the simulated environment they created using Isaac Gym, over the course of one day. In simulation, the algorithm was presented with 16,000 simulated robots, producing ~50,000 steps / second of data that was then used to train the network.

“The policy was then uploaded to the robot farm, where it was deployed on a random robot from a pool of multiple similar robots,” Garg said. “Here, the policy does not get re-trained based on each robot’s unique parameters—it is already able to adapt to them. After the manipulation task is completed, the data is uploaded to be accessed by the researchers.”

Garg and his colleagues were ultimately able to effectively transfer the results achieved by their deep reinforcement learning algorithm in simulations to real robots, with far lower computational power than other teams required in the past. In addition, they demonstrated the effective integration of highly parallel simulation tools with modern deep reinforcement learning methods to effectively solve challenging dextrous manipulation tasks.

The researchers also found that the use of keypoint representation led to faster training and a higher success rate in real-world tasks. In the future, the framework they developed could help to accelerate research about dexterous manipulation and sim2real transfer, for instance allowing researchers to develop policies entirely in simulation with moderate computational resources and deploy them on real low-cost robots.

“We now hope to build on our framework to continue to advance the state of in-hand manipulation for more general-purpose manipulation beyond in-hand reposing,” Garg said. “This work lays the foundation for us to study the core concepts of the language of manipulation, particularly tasks that involve direct grasping and object reorientation ranging from opening water bottles to grasping coffee cups.”

Solving a Rubik’s Cube with a dexterous hand

More information:

Arthur Allshire et al, Transferring dexterous manipulation from GPU simulation to a remote real-world trifinger. arXiv:2108.09779v1 [cs.RO], arxiv.org/abs/2108.09779

2021 Science X Network

Citation:

A system to transfer robotic dexterous manipulation skills from simulations to real robots (2021, October 20)

retrieved 21 October 2021

from https://techxplore.com/news/2021-10-robotic-dexterous-skills-simulations-real.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.