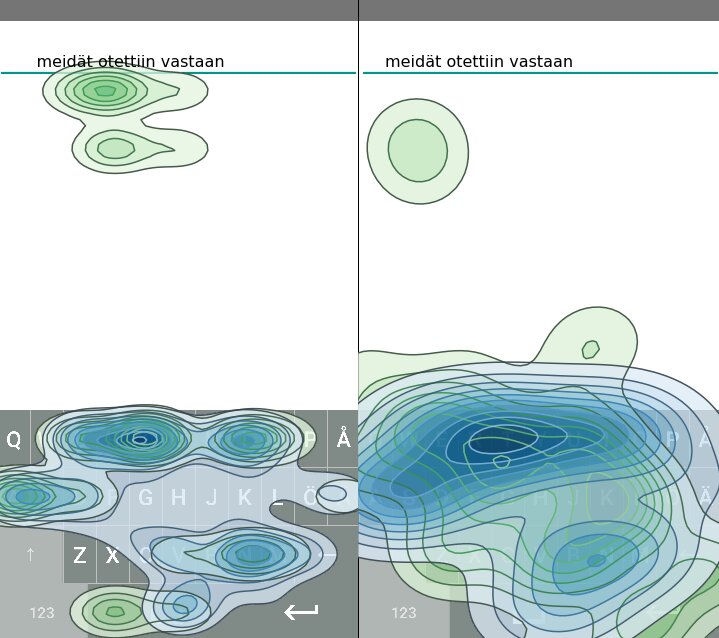

Touchscreens are notoriously difficult to type on. Since we can’t feel the keys, we rely on the sense of sight to move our fingers to the right places and check for errors, a combination of tasks that is difficult to accomplish simultaneously. To really understand how people type on touchscreens, researchers at Aalto University and the Finnish Center for Artificial Intelligence (FCAI) have created the first artificial intelligence model that predicts how people move their eyes and fingers while typing.

The AI model can simulate how a human user would type any sentence on any keyboard design. It makes errors, detects them—though not always immediately—and corrects them very much like humans would. The simulation also predicts how people adapt to alternating circumstances, like how their writing style changes when they start using a new auto-correction system or keyboard design.

“Previously, touchscreen typing has been understood mainly from the perspective of how our fingers move. AI-based methods have helped shed new light on these movements: What we’ve discovered is the importance of deciding when and where to look. Now, we can make much better predictions on how people type on their phones or tablets,” says Dr. Jussi Jokinen, who led the work.

The study, to be presented at ACM CHI on 12 May, lays the groundwork for developing, for instance, better and even personalized text entry solutions.

“Now that we have a realistic simulation of how humans type on touchscreens, it should be a lot easier to optimize keyboard designs for better typing—meaning fewer errors, faster typing, and, most importantly for me, less frustration,” Jokinen explains.

In addition to predicting how a generic person would type, the model is also able to account for different types of users, like those with motor impairments, and could be used to develop typing aids or interfaces designed with these groups in mind. For those facing no particular challenges, it can deduce from personal writing styles—by noting, for instance, the mistakes that repeatedly occur in texts and emails—what kind of a keyboard, or auto-correction system, would best serve a user.

The novel approach builds on the group’s earlier empirical research, which provided the basis for a cognitive model of how humans type. The researchers then produced the generative model capable of typing independently. The work was done as part of a larger project on Interactive AI at the Finnish Center for Artificial Intelligence.

The results are underpinned by a classic machine learning method, reinforcement learning, that the researchers extended to simulate people. Reinforcement learning is normally used to teach robots to solve tasks by trial and error; the team found a new way to use this method to generate behavior that closely matches that of humans—mistakes, corrections and all.

“We gave the model the same abilities and bounds that we, as humans, have. When we asked it to type efficiently, it figured out how to best use these abilities. The end result is very similar to how humans type, without having to teach the model with human data,” Jokinen says.

Comparison to data of human typing confirmed that the model’s predictions were accurate. In the future, the team hopes to simulate slow and fast typing techniques to, for example, design useful learning modules for people who want to improve their typing.

The paper, “Touchscreen Typing As Optimal Supervisory Control,” will be presented 12 May 2021 at the ACM CHI conference.

Teaching AI agents to type on a Braille keyboard

More information:

Find the paper and other materials: userinterfaces.aalto.fi/touchscreen-typing/

Citation:

AI learns to type on a phone like humans (2021, May 12)

retrieved 16 May 2021

from https://techxplore.com/news/2021-05-ai-humans.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.