Emerging technologies such as artificial intelligence (AI) algorithms, mobile robots and unmanned aerial vehicles (UAVs) could enhance practices in a variety of fields, including cinematography. In recent years, many cinematographers and entertainment companies specifically began exploring the use of UAVs to capture high-quality aerial video footage (i.e., videos of specific locations taken from above).

Researchers at University of Zaragoza and Stanford University recently created CineMPC, a computational tool that can be used for autonomously controlling a drone’s on-board video cameras. This technique, introduced in a paper pre-published on arXiv, could significantly enhance current cinematography practices based on the use of UAVs.

“When reading existing literature about autonomous cinematography and, in particular, autonomous filming drones, we noticed that existing solutions focus on controlling the extrinsincs of the camera (e.g., the position and rotations of the camera),” Pablo Pueyo, one of the researchers who carried out the study, told TechXplore. “According to cinematography literature, however, one of the most decisive factors that determine a good or a bad footage is controlling the intrinsic parameters of the lens of the camera, such as focus distance, focal length and focus aperture.”

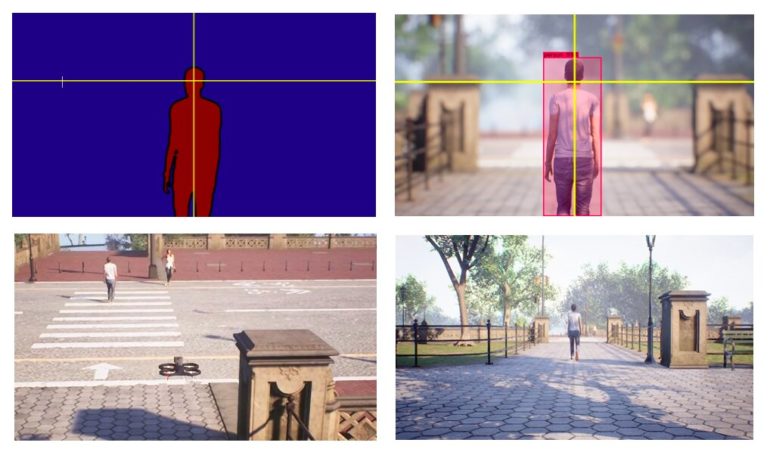

A camera’s intrinsic parameters (e.g., focal distance, length and aperture) are those that determine which parts of an image are in focus or blurred, which can ultimately change a viewer’s perception of a given scene. Being able to change these parameters allows cinematographers to create specific effects, for instance, producing footage with varying depth of field or effectively zooming into specific parts of an image. The overall objective of the recent work by Pueyo and his colleagues was to achieve optimal control of a drone’s movements in ways that would automatically produce these types of effects.

In one of their previous studies, Pueyo and his colleagues developed an approach called CinemAirSim. This technique allowed them to simulate drones with an on-board cinematographic camera that they could control. By integrating CinemAirSim with CineMPC, the new algorithm they developed, the researchers were able to simulate the effects that specific changes in the intrinsic parameters of a lens would have on the overall video footage collected by a drone.

“Using the well-known Model Predictive Control (MPC), an advanced method of process control, we optimize the extrinsic and intrinsic parameters of the drone’s camera to satisfy a set of artistic and composition guidelines given by the user,” Pueyo explained. “MPC finds the optimal parameters to configure the camera, minimizing the cost functions that are related to the user’s constraints. These cost functions are mathematical expressions that help us to control some artistic aspects such as the depth of field, the canonical shots described by the literature, or the position of the elements of the scene, such as actors in a particular position of the resulting image (e.g., satisfying the ‘rule of thirds’).”

CineMPC can detect specific objects or people in a scene and track them across a specific camera trajectory specified by a user. Notably, this “approximate” trajectory also includes associated information related to the most desirable intrinsic parameters.

“Up to date, and to the best of our knowledge, there are no prior solutions that optimize the parameters of the lens of the camera to achieve cinematographic objectives,” Pueyo said. “This is very practical in terms of autonomous drone filming. Non-experts and experts in cinematography can state some cinematographic constraints that our solution will satisfy by tweaking the extrinsic and intrinsic parameters of the camera.”

In the future, the new tool could enhance drone-based cinematography, allowing filmmakers to capture higher-quality footage from an aerial view. In contrast with previously developed methods, in fact, CineMPC allows researchers to continuously adapt the camera’s intrinsic parameters to achieve their artistic objectives.

“We are now planning to improve CineMPC with more sophisticated artistic and robotic ideas,” Pueyo said. “For instance, we would like to introduce more filming drones (i.e., devise a multi-robot approach) and optimize additional artistic intelligence to ensure that the drone can autonomously decide the best artistic guidelines, rather than following the constraints introduced by a user.”

Sony Airpeak drone: A small unmanned aerial vehicle that shoots 4K movie videos from above

More information:

CineMPC: Controlling camera intrinsics and extrinsics for autonomous cinematography. arXiv:2104.03634 [cs.RO]. arxiv.org/abs/2104.03634

2021 Science X Network

Citation:

CineMPC: An algorithm to enable autonomous drone-based cinematography (2021, May 5)

retrieved 5 May 2021

from https://techxplore.com/news/2021-05-cinempc-algorithm-enable-autonomous-drone-based.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.