A team of researchers at Yale University has developed a new kind of algorithm to improve the functionally of a robot hand. In their paper published in the journal Science Robotics, the group describes their algorithm and then demonstrate, via videos, how it can be used.

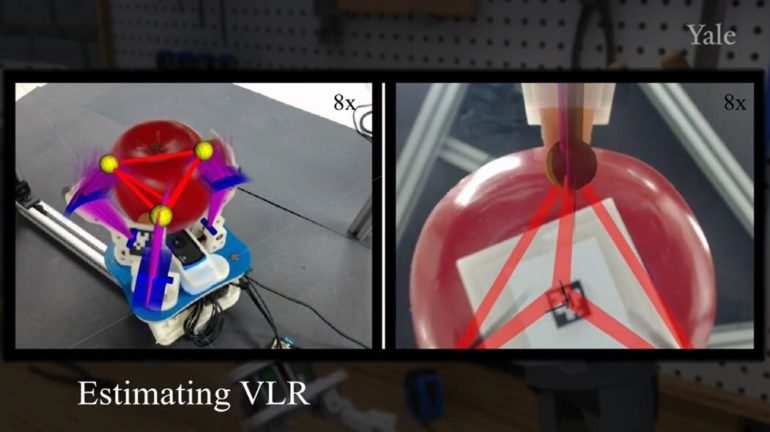

As the researchers note, most robot hands rely on data-intensive programming to achieve their results—an approach that works well for single-function tasks, but not so well when the environment in which it is operating changes. To address this problem, the researchers developed what they call a virtual linkage representation (VLR) algorithm. It is an analytical method that maps the desired motion to points on an object to be manipulated, which the team describes as making linkages. Using their approach requires less information about the environment—instead of multiple sensors, it needs just one camera mounted on the hand. To achieve a particular goal, the robot hand continually refines its movement predictions from the outset (along with resampling) until it reaches near-convergence.

The researchers tested their algorithm using the Yale Model O hand—a three-fingered, open-source robotic hand that has been designed for use in research efforts. In its initial state, it has no tactile sensors or encoders. The first experiment involved directing the hand to grab an object like a tomato while the researchers monitored its parameters. They next tested the hand with a wine glass, a box of Jell-O, a screwdriver and a Lego block. Manipulation was allowed to continue until VLR convergence. They next used the robot hand to draw the letter “O” and followed that up by having it write the word “SCIENCE.” They then demonstrated the ability of the robot hand to control the rotation of an object by having it play a handheld marble maze game.

The researchers next demonstrated the superiority of their approach over prior methods by having the robot hand carry out a cup-stacking task in which smaller cups were inserted into larger cups—using a conventional approach, the robot hand failed at the task. When employing the VLR algorithm, however, the robot hand was able to successfully pick up and stack five cups.

A highly dexterous robot hand with a caging mechanism

More information:

Kaiyu Hang et al, Manipulation for self-Identification, and self-Identification for better manipulation, Science Robotics (2021). DOI: 10.1126/scirobotics.abe1321

2021 Science X Network

Citation:

Using a virtual linkage representation algorithm to improve functionally of a robot hand (2021, May 20)

retrieved 22 May 2021

from https://techxplore.com/news/2021-05-virtual-linkage-representation-algorithm-functionally.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.