In a project aimed at developing intelligent tools to fight child exploitation, University of Groningen computer scientists have developed a system to analyze the noise produced by individual cameras. This information can be used to link a video or an image to a particular camera. The results were published in the journals SN Computer Science on 4 Jun 2022 and Expert Systems with Applications on 10 Jun 2022.

The Netherlands is the main distributor of digital content showing child sexual abuse, as reported by the Internet Watch Foundation in 2019. To fight this type of abuse, forensic tools are needed to analyze digital content in order to identify which images or videos contain suspicious child abuse content. Another untapped source of information is the noise in the images or video frames. As part of an EU project, University of Groningen computer scientists, together with colleagues from the University of León (Spain), have found a way to extract and classify the noise from an image or a video that reveals the “fingerprint” of the camera with which it was made.

“You could compare it to the specific grooves on a fired bullet,” says George Azzopardi, assistant professor in the Information Systems research group at the Bernoulli Institute for Mathematics, Computer Science and Artificial Intelligence at the University of Groningen. Each firearm produces a specific pattern on the bullet, so forensic experts can match a bullet found at a crime scene to a specific firearm, or link two bullets found at different crime scenes to the same weapon.

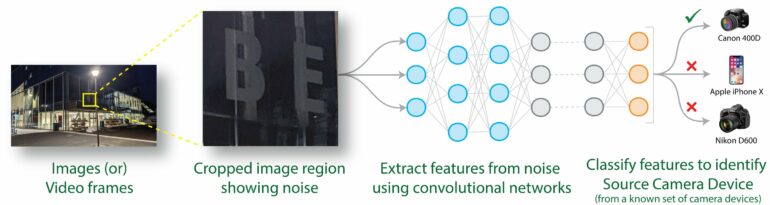

“Every camera has some imperfections in its embedded sensors, which manifest themselves as image noise in all frames but are invisible to the naked eye,” explains Azzopardi. This produces a camera-specific noise. Guru Bennabhaktula, a Ph.D. student both in Groningen and at the University of León, developed a system to extract and analyze this noise. “In image recognition, classifiers are used to extract information on the shapes and textures of objects in the image to identify a scene,” says Bennabhaktula. “We used these classifiers to extract the camera-specific noise.”

He created a computational model to extract camera noise from video frames shot with 28 different cameras, taken from the publicly available VISION dataset, and used this to train a convolutional neural network. Subsequently, he tested whether the trained system could recognize frames made by the same camera. “It turned out we could do this with a 72 percent accuracy,” says Bennabhaktula. He also notes that the noise can be unique to a brand of cameras, to a specific type, and to individual cameras. In another set of experiments, he achieved 99 percent accuracy in classifying 18 camera models using images from the publicly available Dresden dataset.

His work formed part of an EU project, 4NSEEK, in which scientists and law enforcement agencies collaborated to develop intelligent tools to help fight child exploitation. Azzopardi says that “each group was responsible for developing a specific forensic tool.” The model that was created by Bennabhaktula could have such a practical use. “If the police find a camera on a child abuse suspect, they can link it to images or videos found on storage devices.”

The model is scalable, adds Bennabhaktula. “By using only five random frames from a video, it is possible to classify five videos per second. The classifier used in the model has been used by others to distinguish over 10,000 different classes for other computer vision applications.” This means that the classifier could compare the noise from tens of thousands of cameras. The 4NSEEK project has now ended, but Azzopardi is still in touch with forensic specialists and law enforcement agencies to continue this research line. “And we are also working on identifying source similarity between a pair of images, which has different challenges. That will form our next paper on this subject.”

More information:

Guru Swaroop Bennabhaktula et al, Source Camera Device Identification from Videos, SN Computer Science (2022). DOI: 10.1007/s42979-022-01202-0

Guru Swaroop Bennabhaktula et al, Camera model identification based on forensic traces extracted from homogeneous patches, Expert Systems with Applications (2022). DOI: 10.1016/j.eswa.2022.117769

Dasara Shullani et al, VISION: a video and image dataset for source identification, EURASIP Journal on Information Security (2017). DOI: 10.1186/s13635-017-0067-2

Thomas Gloe et al, The ‘Dresden Image Database’ for benchmarking digital image forensics, Proceedings of the 2010 ACM Symposium on Applied Computing—SAC ’10 (2010). DOI: 10.1145/1774088.1774427

Provided by

University of Groningen

Citation:

Sensor imperfections are perfect for forensic camera analysis (2022, June 21)