The most important thing you need to understand about the role Arm processor architecture plays in any computing or communications market — smartphones, personal computers, servers, or otherwise — is this: Arm Holdings, Ltd., which is based in Cambridge, UK, designs the components of processors for others to build. Arm owns these designs, along with the architecture of their instruction sets, such as 64-bit ARM64. Its business model is to license the intellectual property (IP) for these components and the instruction set to other companies, enabling them to build systems around them that incorporate their own designs as well as Arm’s. For its customers who build systems around these chips, Arm has done the hard part for them.

How does Arm, a chip company, conduct business without making chips?

Arm Holdings, Ltd. does not manufacture its own chips. It has no fabrication facilities of its own. Instead, it licenses these rights to other companies, which Arm Holdings calls “partners.” They utilize Arm’s architectural model as a kind of template, building systems that use Arm cores as their central processors.

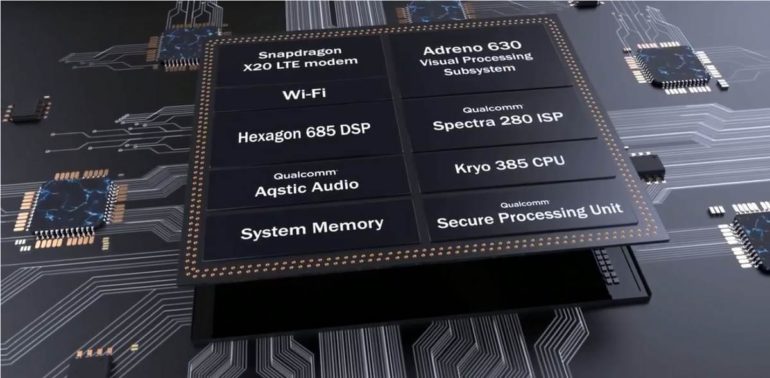

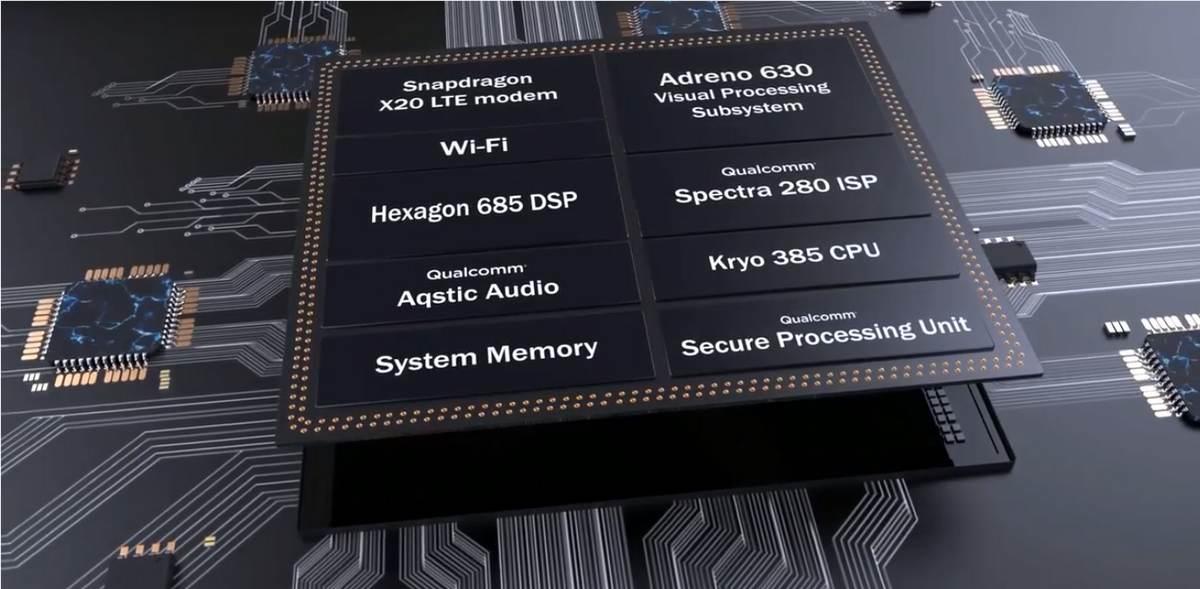

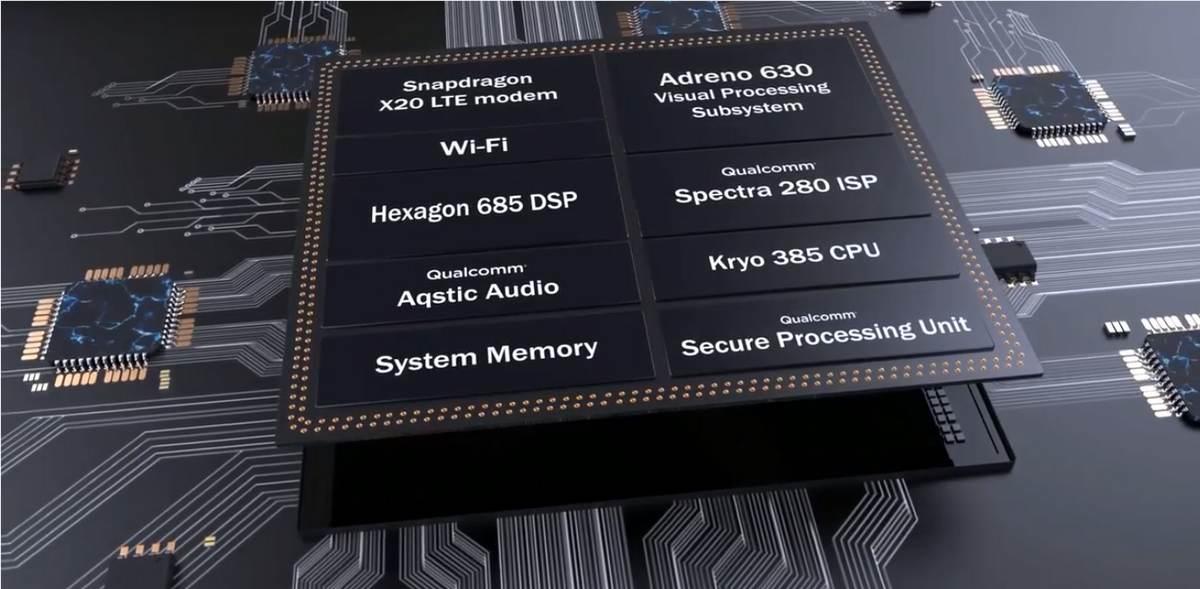

These Arm partners are given the opportunity to design, and possibly manufacture, their systems around these processors, or else outsource their production to others, but in any event sell implementations of these designs in commercial markets. Many Samsung and Apple smartphones and tablets, and essentially all devices produced by Qualcomm, utilize some Arm intellectual property. A new wave of servers produced with Arm-based systems-on-a-chip (SoC) has already made headway in competing against x86, especially with low-power or special-use models. Each device incorporating an Arm processor tends to be its own, unique system, like the multi-part Qualcomm Snapdragon 845 mobile processor depicted above.

(Qualcomm announced its 865 Plus 5G mobile platform in July 2020. Last January, the chip maker announced its 888 5G mobile platform would power Samsung’s Galaxy S21, S21+, and S21 Ultra smartphones.)

Perhaps the best explanation of Arm’s business model, as well as its relationship with its own intellectual property, is to be found in a 2002 filing with the US Securities and Exchange Commission:

We take great care to establish and maintain the proprietary integrity of our products. We focus on designing and implementing our products in a “cleanroom” fashion, without the use of intellectual property belonging to other third parties, except under strictly maintained procedures and express license rights. In the event that we discover that a third party has intellectual property protections covering a product that we are interested in developing, we would take steps to either purchase a license to use the technology or work around the technology in developing our own solution so as to avoid infringement of that other company’s intellectual property rights. Notwithstanding such efforts, third parties may yet make claims that we have infringed their proprietary rights, which we would defend.

LEARN MORE:

Why x86 is sold and Arm is licensed

The maker of an Intel- or AMD-based x86 computer does not design nor does it own any portion of the intellectual property for the CPU. It also cannot reproduce x86 IP for its own purposes. “Intel Inside” is a seal certifying a license for the device manufacturer to build a machine around Intel’s processor. An Arm-based device may be designed to incorporate the processor, perhaps even making adaptations to its architecture and functionality. For that reason, rather than a “central processing unit” (CPU), an Arm processor is instead called a system-on-a-chip (SoC). Much of the functionality of the device may be fabricated onto the chip itself, cohabiting the die with Arm’s exclusive cores, rather than built around the chip in separate processors, accelerators, or expansions.

As a result, a device run by an Arm processor, such as one of the Cortex series, is a different order of machine from one run by an Intel Xeon or an AMD Epyc. It means something quite different to be an original device based around an Arm chip. Most importantly from a manufacturer’s perspective, it means a somewhat different, and hopefully more manageable, supply chain. Since Arm has no interest in marketing itself to end users, you don’t typically hear much about “Arm Inside.”

Equally important, however, is the fact that an Arm chip is not necessarily a central processor. Depending on the design of its system, it can be the heart of a device controller, a microcontroller (MCU), or some other subordinate component in a system.

LEARN MORE:

What’s the relationship between Arm and Apple?

Apple CEO Tim Cook announces his company’s chip manufacturing unit at WWDC 2020.

Apple Silicon is the phrase Apple presently uses to describe its own processor production, beginning in June 2020 with Apple’s announcement of the replacement of its x86 Mac processor line. In its place, in Mac laptop units that are reportedly already shipping, will be a new system-on-a-chip called A12Z, code-named “Bionic,” produced by Apple using 64-bit component designs licensed to it by Arm Holdings, Ltd. In this case, Arm isn’t the designer, but the producer of the instruction set around which Apple makes its original design. In December 2020, Apple chose Taiwan-based TSMC as the fabricator for its A12Z.

For MacOS 11 to continue to run software compiled for Intel processors, under an Arm SoC, the new system will run a kind of “just-in-time” instruction translator called Rosetta 2. Rather than run an old MacOS image in a virtual machine, the new OS will run a live x86 machine code translator that re-fashions x86 code into what Apple now calls Universal 2 binary code — an intermediate-level code that can still be made to run on older Intel-based Macs — in real-time. That code will run in what sources outside of Apple call an “emulator,” but which isn’t really an emulator in that it doesn’t simulate the execution of code in an actual, physical machine (there is no “Universal 2” chip).

The first results of independent performance benchmarks comparing an iPad Pro using the A12Z chip planned for the first Arm-based Macs, against Microsoft Surface models, looked promising. Geekbench results as of the time of this writing gave the Bionic-powered tablet a multi-core processing score of 4669 (higher is better), versus 2966 for the Pentium-powered Surface Pro X, and 3033 for the Core i5-powered Surface Pro 6.

Apple’s newly claimed ability to produce its own SoC for Mac, just as it does for iPhone and iPad, could save the company over time as much as 60 percent on production costs, according to its own estimates. Of course, Apple is typically tight-lipped as to how it arrives at that estimate, and how long such savings will take to be realized.

The relationship between Apple and Arm Holdings dates back to 1990, when Apple Computer UK became a founding co-stakeholder in Arm Holdings, Ltd. The other co-partners at that time were the Arm concept’s originator, Acorn Computers Ltd. (more about Acorn later) and custom semiconductor maker VLSI Technology (named for the common semiconductor manufacturing process called “very large-scale integration“). Today, Arm Holdings is a wholly-owned subsidiary of SoftBank, which announced its intent to purchase the licensor in July 2016. At the time, the acquisition deal was the largest for a Europe-based technology firm.

LEARN MORE:

What will Nvidia’s role be in managing Arm as a corporate division?

On September 13, 2020, Nvidia announced a deal to acquire Arm Holdings, Ltd. from its parent company, Tokyo-based Softbank Group Corp., in a cash and stock exchange valued at $40 billion. The deal is pending regulatory review in the European Union, United States, Japan, and China, in separate processes that may yet conclude in 2022.

In a press conference following the announcement, Nvidia CEO Jensen Huang told reporters his intention is to maintain Arm’s current business model, without influencing its current mix of partners. However, Huang also stated his intention to “add” access to Nvidia’s GPU technology to Arm’s portfolio of IP offered to partners, giving Arm licensees access to Nvidia designs. What’s unclear at the time the deal was announced is what a prospective partner would want with a GPU design, besides the opportunity to compete against Nvidia.

Arm designs are created with the intention of being mixed-and-matched in various configurations, depending on the unique needs of its partners. The Arm Foundry program is a partnership between Arm Holdings and fabricators of semiconductors, such as Taiwan-based TSMC and US-based Intel, giving licensees multiple options for producing systems that incorporate Arm technology. (Prior to the September announcement, when Arm was considered for sale, rumored potential buyers included TSMC and Samsung.) By comparison, Nvidia produces exclusive GPU designs, with the intention of being exclusively produced at a foundry of its choosing — originally IBM, then largely TSMC, and most recently Samsung. Nvidia’s designs are expressly intended for these particular foundries — for instance, to take advantage of Samsung’s Extreme Ultra-Violet (EUV) lithography process.

LEARN MORE:

What gives Arm architecture value?

As of March 30, 2021, there officially will have been nine generations of Arm processor architecture since the company’s inception. When a company manufactures its own processors, or licenses their manufacture exclusively to other foundries to be marketed in the licensee’s name only, the design is typically based on a reference implementation that is easily varied to suit performance parameters. For example, on-chip static memory caches are added or left out, cores are appropriated but only included in premium models, and memory bandwidth may be artificially limited for budget-class processors.

In Arm’s case, its architecture is like an encyclopedia of functions. Each class of processor core brings both basic and specialist functions to the table. Each licensee, or “partner,” builds a design around the core series that provides the functions it needs. The partner’s design is then certified by Arm as abiding by its guidelines, as upholding the Arm engineers’ security principles and original design intent, and perhaps most importantly, as being capable of running software produced for that processor’s design generation. No specialization introduced by the partner should render the processor incapable of running software that Arm has already certified as executable on its designated core class.

Once certified, Arm gives its partner license to produce its design with Arm’s intellectual property (IP) included, either through its own foundries or, as is more often the case, by outsourcing production to a commercial foundry such as Foxconn or TSMC.

What is Arm’s plan for its latest architectural generation?

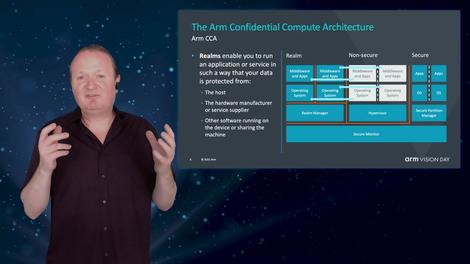

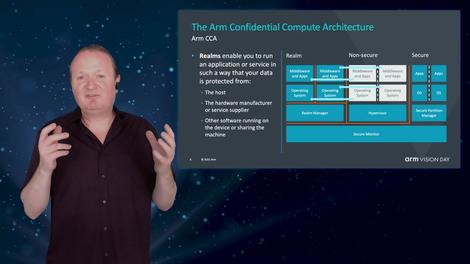

The portfolio of processor designs now called Armv9 (arm · vee · nine) introduces a concept that’s familiar to software architects, but perhaps foreign to hardware engineers: execution in isolation. Conceptually similar to the original idea of containers, Armv9’s realms are isolated execution threads that have no connection to any threads in which the operating system, or any system services, would be run.

Realms’ objective is to render the most common type of processor exploit on x86 architectures, functionally impossible on Armv9: the stack overflow. The tactic for such an exploit is to use ordinary instructions to trigger an error condition, then while the processor is cleaning up, force bytes that had been delivered as data, to be executed as privileged code while the processor is incapable of checking for privilege. Theoretically, so long as a thread runs in a discrete Armv9 realm, it can’t trip any of the registers relating to the system, or to any hypervisors supporting virtual machines, even if it triggers an error condition for itself.

“Using this, for example, a driver’s ride-sharing application downloaded from a standard app store, and installed on a personal device,” explained Richard Grisenthwaite, Arm’s chief architect, “could dynamically create a realm to hold and work with its secrets, a world away from the operating system and hypervisor. This ensures the protection of the employer’s data, even if the phone’s operating system is compromised. By preventing the theft of commercially viable algorithms and data, and ensuring that mission-critical supervisory controls needed by the employer cannot be subverted, it’s no longer necessary for drivers or couriers to be provided with dedicated corporate devices.”

In other words, if the Realms initiative is successful, the isolation for applications that employers seek in purchasing separate phones, can be obtained instead through a single smartphone whose processor works as though it were two (or more) isolated components.

Realms is part of a corporate-wide Arm initiative to implement so-called Confidential Compute Architecture across the board. But Realms alone could lead to a market advantage for Arm processor-driven devices, compared with competitors. While others may continue to push for the speed and performance lead, Arm could offer security-conscious customers an alternative for which they might be willing to trade off some performance gains.

LEARN MORE:

What makes Arm processor architecture unique?

The “R” in the acronym “Arm” actually stands for another acronym: Reduced Instruction Set Computer (RISC). Its purpose is to leverage the efficiency of simplicity, with the goal of rendering all of the processor’s functionality on a single chip. Keeping a processor’s instruction set small means it can be coded using a fewer number of bits, thus reducing memory consumption as well as execution cycle time. Back in 1982, students at the University of California, Berkeley, were able to produce the first working RISC architectures by judiciously selecting which functions would be used most often, and rendering only those in hardware — with the remaining functions rendered as software. Indeed, that’s what makes an SoC with a set of small cores feasible: relegating as much functionality to software as possible.

Retroactively, architectures such as x86 which adopted strategies quite opposite to RISC, were dubbed Complex Instruction Set Computers (CISC), although Intel has historically avoided using that term for itself. The power of x86 comes from being able to accomplish so much with just a single instruction. For instance, with Intel’s vector processing, it’s possible to execute 16 single-precision math operations, or 8 double-precision operations, simultaneously; here, the vector acts as a kind of “skewer,” if you will, poking through all the operands in a parallel operation and racking them up.

That makes complex math easier, at least conceptually. With a RISC system, math operations are decomposed into fundamentals. Everything that would happen automatically with a CISC architecture — for example, clearing up the active registers when a process is completed — takes a full, recorded step with RISC. However, because fewer bits (binary digits) are required to encapsulate the entire RISC instruction set, it may end up taking about as many bits in the end to encode a sequence of fundamental operations in a RISC processor — perhaps even fewer — than a complex CISC instruction where all the properties and arguments are piled together in a big clump.

Intel can, and has, demonstrated very complex instructions with higher performance statistics than the same processes for Arm processors, or other RISC chips. But sometimes such performance gains come at an overall performance cost for the rest of the system, making RISC architectures somewhat more efficient than CISC at general-purpose tasks.

Then there’s the issue of customization. Intel enhances its more premium CPUs with functionality by way of programs that would normally be rendered as software, but are instead embedded as microcode. These are routines designed to be quickly executed at the machine code level, and that can be referenced by that code indirectly, by name. This way, for example, a program that needs to invoke a common method for decrypting messages on a network can address very fast processor code, very close to where that code will be executed. (Conveniently, many of the routines that end up in microcode are the ones often employed in performance benchmarks.) These microcode routines are stored in read-only memory (ROM) near the x86 cores.

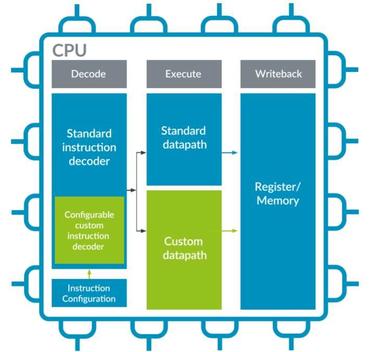

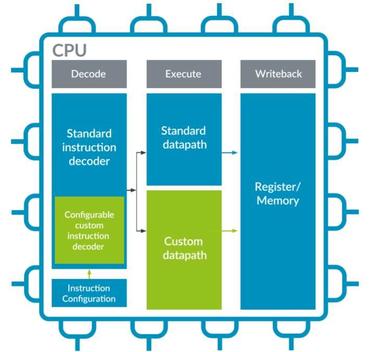

An Arm processor, by contrast, does not use digital microcode in its on-die memory. The current implementation of Arm’s alternative is a concept called custom instructions [PDF]. It enables the inclusion of completely client-customizable, on-die modules, whose logic is effectively “pre-decoded.” These modules are represented in the above Arm diagram by the green boxes. All the program has to do to invoke this logic is cue up a dependent instruction for the processor core, which passes control to the custom module as though it were another arithmetic logic unit (ALU). Arm asks its partners who want to implement custom modules to present it with a configuration file, and map out the custom data path from the core to the custom ALU. Using just these items, the core can determine the dependencies and instruction interlocking mechanisms for itself.

This is how an Arm partner builds up an exclusive design for itself, using Arm cores as their starting ingredients.

Although Arm did not create the concept of RISC, it had a great deal to do with realizing the concept, and making it publicly available. One branch of the original Berkeley architecture to have emerged unto its own is RISC-V, whose core specification was made open source under the Creative Commons 4.0 license. Nvidia, among others including Qualcomm, Samsung, Huawei, and Micron Technology, has been a founding member of the RISC-V Foundation. When asked, Nvidia CEO Jensen Huang indicated he intends for his company to continue contributing to the RISC-V effort, maintaining that its ecosystem is naturally separate from that of Arm.

LEARN MORE:

How is an Arm processor different from x86/x64 CPUs?

An x86-based PC or server is built to some common set of specifications for performance and compatibility. Any more, such a PC isn’t so much designed as assembled. This keeps costs low for hardware vendors, but it also relegates most of the innovation and feature-level premiums to software, and perhaps a few nuances of implementation. The x86 device ecosystem is populated by interchangeable parts, at least insofar as architecture is concerned (granted, AMD and Intel processors have not been socket-compatible for quite some time). The Arm ecosystem is populated by some of the same components, such as memory, storage, and interfaces, but otherwise by complete systems designed and optimized for the components they utilize.

This does not necessarily give Arm devices, appliances, or servers any automatic advantage over Intel and AMD. Intel and x86 have been dominant in the computing processor space for the better part of four decades, and Arm chips have existed in one form or another for nearly all of that time — since 1985. Its entire history has been about finding success in markets that x86 technology had not fully exploited or in which x86 was showing weakness, or in markets where x86 simply cannot be adapted.

For tablet computers, more recently in data center servers, and soon once again in desktop and laptop computers, the vendor of an Arm-based device or system is no longer relegated to being just an assembler of parts. This makes any direct, unit-to-unit comparison of Arm vs. x86 processor components somewhat frivolous, as a device or system based on one could easily and consistently outperform the other, based on how that system was designed, assembled, and even packaged.

How is an Arm chip different from a GPU?

The class of processor now known as GPU originated as a graphics co-processor for PCs, and is still prominently used for that purpose. However, largely due to the influence of Nvidia in the artificial intelligence space, the GPU has come to be regarded as one class of general-purpose accelerator, as well as a principal computing component in supercomputers — being coupled with, rather than subordinate to, supercomputers. The GPU’s strong suit is its ability to execute many clusters of instructions, or threads, in parallel, greatly accelerating many academic tasks.

Arm does produce a reference design for GPUs to be used in graphics processing, called Mali. It makes this design available for licensing to makers of value-oriented, Android-based tablets and smart TVs. Low-price online electronics retailer Kogan.com is known to have resold several such models under its own brand.

In March 2021, Arm indicated its intention to continue producing GPU reference designs, unaltered from their original architecture, well after Arm’s acquisition by Nvidia is completed. Previously, in November 2020, Nvidia announced its introduction of a reference platform enabling systems architects to couple Arm-based server designs with Nvidia’s own GPU accelerators.

LEARN MORE:

What are the classes of Arm processors produced today?

To stay competitive, Arm offers a variety of processor core styles, or series. Some are marketed for a variety of use cases; others are earmarked for just one or two. It’s important to note here that Intel uses the term “microarchitecture,” and sometimes by extension “architecture,” to refer to the specific stage of evolution of its processors’ features and functionality — for example, its most recently shipped generation of Xeon server processors is a microarchitecture Intel has codenamed Cascade Lake. By comparison, Arm architecture encompasses the entire history of Arm RISC processors. Each iteration of this architecture has been called a variety of things, but most recently a series. All that having been said, Arm processors’ instruction sets have evolved at their own pace, with each iteration generally referred to using the same abbreviation Intel uses for x86: ISA. And yes, here the “A” stands for “architecture.”

Intel manufactures Celeron, Core, and Xeon processors for very different classes of customers; AMD manufactures Ryzen for desktop and laptop computers, and Epyc for servers. By contrast, Arm produces designs for complete processors, that may be utilized by partners as-is, or customized by those partners for their own purposes. Here are the principal Arm Holdings, Ltd. design series, which are expected to continue being used through the 2020s:

Cortex-A has been marketed as the workhorse of the Arm family, with the “A” in this instance standing for application. As originally conceived, the client looking to build a system around Cortex-A had a particular application in mind for it, such as a digital audio amplifier, digital video processor, the microcontroller for a fire suppression system, or a sophisticated heart rate monitor. As things turned out, Cortex-A ended up being the heart of two emerging classes of device: single-board computers capable of being programmed for a variety of applications, such as cash register processing; and most importantly of all, smartphones. Importantly, Cortex-A processors include memory management units (MMU) on-chip. Decades ago, it was the inclusion of the MMU on-chip by Intel’s 80286 CPU that changed the game in its competition against Motorola chips, which at that time powered Macintosh. The principal tool in Cortex-A’s arsenal is its advanced single-instruction, multiple-data (SIMD) instruction set, code-named NEON, which executes instructions like accessing memory and processing data in parallel over a larger set of vectors. Imagine pulling into a filling station and loading up with enough fuel for 8 or 16 tanks, and you’ll get the basic idea.Cortex-R is a class of processor with a much narrower set of use cases: mainly microcontroller applications that require real-time processing. One big case-in-point is 4G LTE and 5G modems, where time (or what a music composer might more accurately call “tempo”) is a critical factor in achieving modulation. Cortex-R’s architecture is tailored in such a way that it responds to interrupts — the requests for attention that trigger processes to run — not only quickly but predictably. This enables R to run more consistently and deterministically, and is one reason why Arm is promoting its use as a high-capacity storage controller for solid-state flash memory.Cortex-M is a more miniaturized form factor, making it more suitable for tight spaces: for example, automotive control and braking systems, and high-definition digital cameras with image recognition. A principal use for M is as a digital signal processor (DSP), which responds to and manages analog signals for applications such as sound synthesis, voice recognition, and radar. Since 2018, Arm has taken to referring to all its Cortex series collectively under the umbrella term Cosmos.Ethos-N is a series of processor specifically intended for applications that may involve machine learning, or some other form of neural network processing. Arm calls this series a neural processor (NPU), although it’s not quite the same class as Google’s tensor processing unit, which Google itself admits is actually a co-processor and not a stand-alone controller [PDF]. Arm’s concept of the NPU includes routines used in drawing logical inferences from data, which are the building blocks of artificial intelligence used in image and pattern recognition, as well as machine learning.Ethos-U is a slimmed-down edition of Ethos-N that is designed to work more as a co-processor, particularly in conjunction with Cortex-A.Neoverse, launched in October 2018, represents a new and more concentrated effort by Arm to design cores that are more applicable in servers and the data centers that host them — especially the smaller varieties. The term Arm uses in marketing Neoverse is “infrastructure” — without being too specific, but still targeting the emerging use cases for mini and micro data centers stationed at the “customer edge,” closer to where end users will actually consume processor power.SecurCore is a class of processor designed by Arm exclusively for use in smart card, USB-based certification, and embedded security applications.

These are series whose designs are licensed for others to produce processors and microcontrollers. All this being said, Arm also licenses certain custom and semi-custom versions of its architecture exclusively, enabling these clients to build unique processors that are available to no other producer. These special clients include:

Apple, which has fabricated for itself a variety of Arm-based designs over the years for iPhone and iPad, and announced last June an entirely new SoC for Mac (see above);Nvidia, which co-designed two processor series with Arm, the most recent of which is called CArmel. Known generally as a GPU producer, Nvidia leverages the CArmel design to produce its 64-bit Tegra Xavier SoC. That chip powers the company’s small-form-factor edge computing device, called Jetson AGX Xavier.Samsung, which produces a variety of 32-bit and 64-bit Arm processors for its entire consumer electronics line, under the internal brand Exynos. Some have used a Samsung core design called Mongoose, while most others have utilized versions of Cortex-A. Notably (or perhaps notoriously) Samsung manufactures variations of its Galaxy Note, Galaxy S, and Galaxy A series smartphones with either its own Exynos SoCs (outside the US) or Qualcomm Snapdragons (US only).Qualcomm, whose most recent Snapdragon SoC models utilize a core design called Kryo, which is a semi-custom variation of Cortex-A. Earlier Snapdragon models were based on a core design called Krait, which was still officially an Arm-based SoC even though it was a purely Qualcomm design. Analysts estimate Snapdragon 855, 855 Plus, and 865 together to comprise the nucleus of greater than half the world’s 5G smartphones. Although Qualcomm did give it a go in November 2017 with producing Arm chips for data center servers, with a product line called Centriq, it began winding down production of that line in December 2018, turning over the rights to continue its production to China-based Huaxintong Semiconductor (HXT), at the time a joint venture partner. That partnership was terminated the following April.

LEARN MORE:

Is a system-on-a-chip the same as a chipset?

Technically speaking, the class of processor to which an Arm chip belongs is application-specific integrated circuit (ASIC). Consider a hardware platform whose common element is a set of processing cores. That’s not too difficult; that describes essentially every device ever manufactured. But miniaturize these components so that they all fit on one die — on the same physical platform — interconnected using an exclusive mesh bus.

As you know, for a computer, the application program is rendered as software. In many appliances such as Internet routers, front-door security systems, and “smart” HDTVs, the memory in which operations programs are stored is non-volatile, so we often call it firmware. In a device whose core processor is an ASIC, its main functionality is rendered onto the chip, as a permanent component. So the functionality that makes a device a “system” shares the die with the processor cores, and an Arm chip can have dozens of those.

Some analysis firms have taken to using the broad phrase applications processor, or AP, to refer to ASICs, but this has not caught on generally. In more casual use, an SoC is also called a chipset, even though in recent years, more often than not, the number of chips in the set is just one. In general use, a chipset is a set of one or more processors that collectively function as a complete system. A CPU executes the main program, while a chipset manages attached components and communicates with the user. On a PC motherboard, the chipset is separate from the CPU. On an SoC, the main processor and the system components share the same die.

The rising prospects for Arm in servers

In June 2020, a Fujitsu Arm-powered supercomputer named Fugaku (pictured left), built for Japan’s RIKEN Center for Computational Science, seized the #1 slot on the semi-annual Top 500 Supercomputer list. Fugaku retained that slot in the November 2020 rankings.

Yet of all the differences between an x86 CPU and an Arm SoC, this may be the only one that matters to a data center’s facilities manager: Given any pair of samples of both classes of processor, it’s the Arm chip that is least likely to require an active cooling system. Put another way, if you open up your smartphone, chances are you won’t find a fan. Or a liquid cooling apparatus.

The buildout of 5G Wireless technology is, ironically enough, expanding the buildout of fiber optic connectivity to locations near the “customer edge” — the furthest point from the network operations center. This opens up the opportunity to station edge computing devices and servers at or near such points, but preferably without the heat exchanger units that typically accompany racks of x86 servers.

This is where startups such as Bamboo Systems come in. Radical reductions in the size and power requirements for cooling systems enable server designers to devise new ways to think “out-of-the-box” — for instance, by shrinking the box. A Bamboo server node is a card not much larger than the span of most folks’ hands, eight of which may be securely installed in a 1U box that typically supports 1, maybe 2, x86 servers. Bamboo aims to produce servers, the company says, that use as little as one-fifth the rack space and consume one-fourth the power, of x86 racks with comparable performance levels.

Where did Arm processors come from?

An Acorn. Indeed, that’s what the “A” originally stood for.

Back in 1981, a Cambridge, UK-based company called Acorn Computers was marketing a microcomputer (what we used to call “PCs” back before IBM popularized the term) based on Motorola’s 6502 processor — which had powered the venerable Apple II, the Commodore 64, and the Atari 400 and 800. Although the name “Acorn” was a clever trick to appear earlier on an alphabetized list than “Apple,” its computer had been partly subsidized by the BBC, and was thus known nationwide as the BBC Micro.

Arm Holdings, Ltd. CEO Simon Segars. To his left, below the monitor, is a working BBC Micro computer, circa 1981.

All 6502-based machines used 8-bit processor architecture, and in 1981, Intel was working towards a fully compatible 16-bit architecture to replace the 8086 used in the IBM PC/XT. The following year, Intel’s 80286 would enable IBM to produce its PC AT so that MS-DOS, and all the software that ran on DOS, would not have to be changed or recompiled to run on 16-bit architecture. It was a tremendous success, and Motorola could not match it. Although Apple’s first Macintosh was based on the 16-bit Motorola 68000 series, its architecture was only “inspired” by the earlier 8-bit design, not compatible with it. (Eventually it would produce a 16-bit Apple IIGS based on the 65C816 processor, but only after several months waiting for the makers of the 65816 to ship a working test model. The IIGS did have an “Apple II” step-down mode but technically not full compatibility.)

Acorn’s engineers wanted a way forward, and Motorola was leaving them at a dead end. After experimenting with a surprisingly fast co-processor for the 6502 called Tube that just wasn’t fast enough, they opted to take the plunge with a full 32-bit pipeline. Following the lead of the Berkeley RISC project, in 1983, they built a simulator for a processor they called Arm1 that was so simple, it ran on the BASIC language interpreter of the BBC Micro (albeit not at speed). They would collaborate with VLSI, and would produce two years later their first Arm1 working model, with a 6 MHz clock speed. It utilized so little power that, as one project engineer tells the story, one day they noticed the chip was running without its power supply connected. It was actually being powered by leakage from the power rails leading to the I/O chip.

At this early stage, the Arm1, Arm2, and Arm3 processors were all technically CPUs, not SoCs. Yet in the same sense that today’s Intel Core processors are architectural successors of its original 4004, Cortex-A is the architectural successor to Arm1.

LEARN MORE: