At Google I/O on Tuesday, the company revealed a bevy of new features related to Google Photo and the cameras on Google devices. The company said more than four trillion photos and videos are currently stored in Google Photos, yet most aren’t viewed at all.

Using machine learning, Little Patterns helps users look back at photos they may have missed by resurfacing images. The program translates photos into numbers and groups them together based on color and lighting, creating packages of pictures that go together.

The collections of similar photos are first presented to the user who can then decide whether they want to keep or share them.

Machine learning is also used for Cinematic Moments, which allows Google Photo users to stitch together different frames of the same image to create a vivid moving picture.

Google will also provide users more control over what photos resurface and provide settings that allow you to block photos from certain time periods or of certain people.

The new Locked Folder feature also allows users to add photos to a “passcode protected space and they won’t show up as you scroll through Photos or other apps on your phone.”

The feature will be available on Google Pixel first and then will roll out widely to more Android devices through the rest of the year.

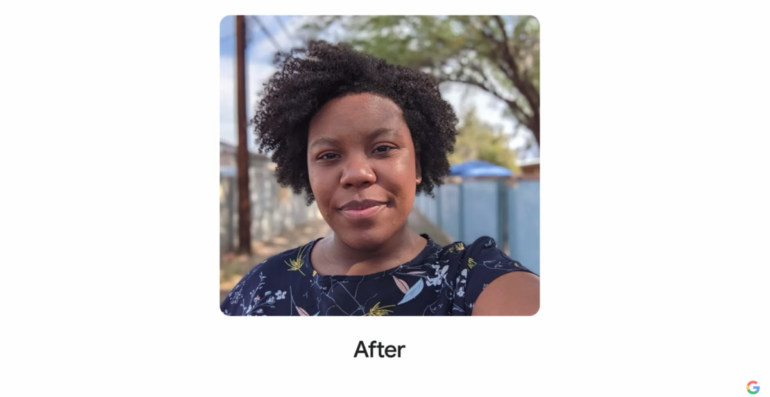

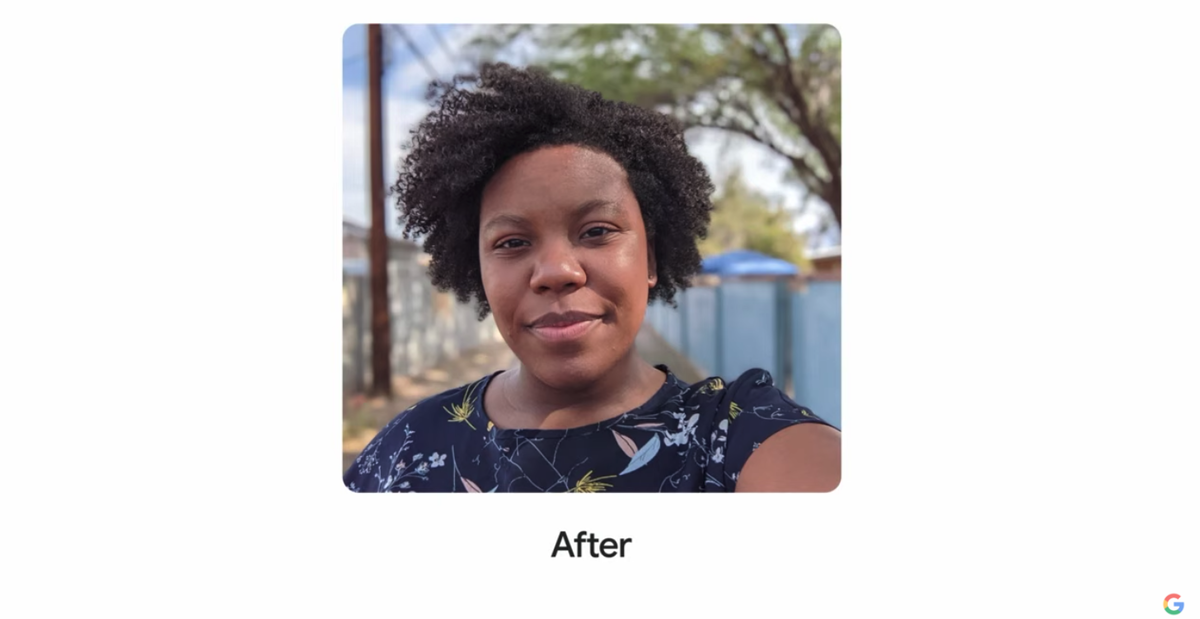

The presentation also included a lengthy section on Google’s work to improve smartphone photography so that it captures people of all skin tones better. People of color have long complained that photos, both with traditional cameras and smartphones, fail to render the true color of darker skin tones accurately.

Google worked with industry experts to create a more accurate camera, putting thousands more photos into data sets to help improve the accuracy of the auto-white balance and auto-exposure algorithms.

Google’s engineering team said it was making changes to its computational photography algorithms to address the longstanding problems people of color have had with photos as well.

The company said it was making auto-white balance adjustments to algorithmically reduce stray light, to bring out natural brown tones and prevent over-brightening and desaturation of darker skin tones.

Even curly and wavy hair types will be rendered more accurately in selfies thanks to new algorithms that more succinctly separate people from the background in any image, according to the Google presentation. All of these new features will be rolling out this fall on Google Pixel devices.