Working with Intel, Google, and colleagues at Dartmouth College, Princeton researchers have completely redesigned the technology for powering high-performance computers, resulting in systems that increase power delivery 10 times beyond the current state of the art.

The demand for power ultimately comes from the demand for computing. With designers cramming more circuits into microscopic sections of microchips, the power delivered to tiny clusters of circuits is approaching levels equivalent to the interior of a nuclear reactor. Engineers call the amount of power delivered to an area power density. Supercomputers’ power density is reaching nuclear levels not primarily because of the computing demands but because of the tiny spaces of computer chips.

This demand for highly controlled power has proven to be a limit to chip designers and a major challenge for power electronics engineers. Sending large amounts of power to tiny areas generates heat, which is not only inefficient, but can also be fatal to computer components.

“It has to be extremely efficient with very low noise, precisely controlled to a very tiny area,” said Minjie Chen, assistant professor of electrical and computer engineering and the leader of the Princeton research team. “If the efficiency is not high enough, you will overheat. If the components overheat, you will not be able to deliver the power.”

Among other areas, Chen said his research team has focused on developing “smaller, smarter and more efficient power electronics for emerging and important applications.” Although new generations of chips and circuitry draw the most attention, power delivery is an increasingly critical element of computer system design. As Chen points out, better power delivery is required for smaller smart phones and other devices, more efficient server centers and advanced processors to support increasingly sophisticated artificial intelligence systems. The group also is looking beyond computer systems to programmable designs solar power arrays, the smart grid and other critical infrastructure.

In the most recent project, presented in a paper in the IEEE Transactions on Power Electronics, the researchers demonstrated a strategy for meeting the industry’s goals in a way that can apply to small systems or scale to meet the needs of massive data centers.

To meet the demands of new computer systems, the team had to overcome three challenges: Deliver power to smaller areas to allow microprocessors to sit ever-closer together; operate with high efficiency both to cut costs and to prevent overheating; and switch power among components with blinding speed to match the demands of microprocessors.

“Google and Intel originally asked “How do you deliver 10 times more power to a millimeter square of silicon without sacrificing speed or efficiency?'” Chen said.

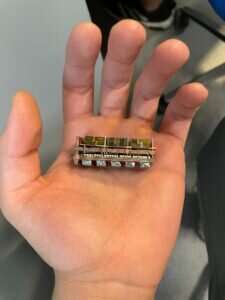

The new system provides the efficiency, power and flexibility demanded by modern high-performance computers. © Princeton University

The research team accomplished all three by rethinking everything from power delivery components to their architecture and control. They used capacitors instead of the traditional method of magnetics to process power, and they built the systems vertically instead of the traditional horizontal construction. Both features introduced significant design challenges, but once the researchers solved those, they were able to deliver a superior system.

“We have tested the efficiency up to full power, and we have tested the dynamics,” Chen said. “It is a fully functioning system 10 times smaller than the best off-the-shelf.”

The article, “Vertical Stacked LEGO-PoL CPU Voltage Regulator,” appeared online Dec. 14, 2021.

More information:

Jaeil Baek et al, Vertical Stacked LEGO-PoL CPU Voltage Regulator, IEEE Transactions on Power Electronics (2021). DOI: 10.1109/TPEL.2021.3135386

Provided by

Princeton University

Citation:

Vertical thinking broke the bottleneck in powering high-performance computers (2022, May 2)