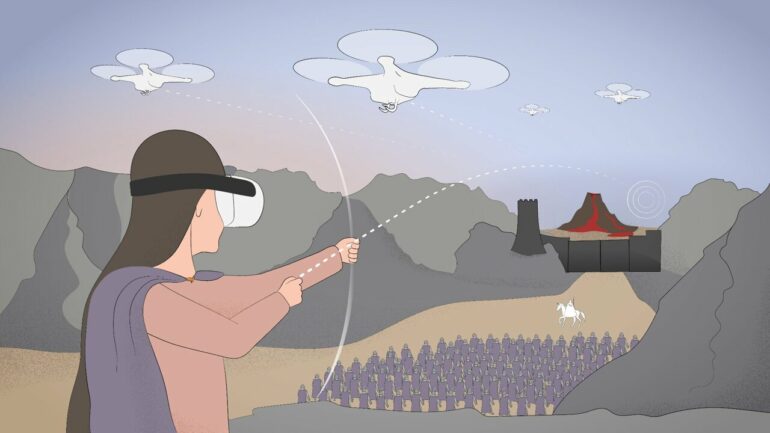

Skoltech researchers have developed an effective—and pretty dramatic—way for positioning a swarm of rescue or research drones. The operator wears a virtual reality helmet and a tactile interface to imitate shooting a bow to guide each drone toward its intended position with a series of shots.

The technique is enhanced by deep reinforcement learning, which serves to prevent the robots from colliding with each other. Presented at the 21st IEEE International Symposium on Mixed and Augmented Reality, the study is the first Russian project to have been selected for this high-profile conference since its inception over 20 years ago.

“Steve Jobs radically changed our idea of an intuitive interaction with the digital world when he championed touch screens and gestures. The goal of our research is even more ambitious in that it seeks to address the challenge of how to enable a user with no drone piloting experience to deploy an entire swarm within mere seconds,” commented the study’s principal investigator, Skoltech Associate Professor Dzmitry Tsetserukou, who heads the Intelligent Space Robotics Laboratory.

“Right now, there aren’t that many interfaces for deploying a swarm of drones,” the study’s lead author, Skoltech MSc graduate Ekaterina Dorzhieva, commented. “A joystick is convenient for controlling one drone, but once you have a whole swarm, you either need multiple operators or very complex software with code that explicitly accounts for a lot of stuff in a nonintuitive way. We offer an alternative to this.”

The alternative solution presented by the researchers from the Institute’s Engineering Center is a tactile interface proposed by Skoltech Ph.D. student Miguel Altamirano Cabrera and inspired by LinkTouch, an earlier design created by Tsetserukou in Japan.

The operator wears a virtual reality helmet, a pair of gloves with markers, and a tactile interface. A motion capture system incorporating several IR cameras is set up around the “shooter” to track the positions of the gloves and drones. The tactile interface allows the wearer to feel the tension of the virtual bowstring based on how far from each other the gloves are and use this feedback to adjust the distance to the location they are about to deploy a drone to.

Meanwhile, the VR helmet keeps visualizing the ballistic trajectory of the drone in real time. When the operator is satisfied with the trajectory, they unclench the fist. This is registered by the camera, locking the trajectory and sending the drone to traverse it.

Once the destination is reached, some conventional form of control has to take over, guiding the drone on its mission, which might involve a rescue operation, natural resource management, forest fire detection, vegetation index-based crop monitoring, pollution detection, infrastructure inspection or maintenance.

“The benefit of using an arrowlike ballistic trajectory is that it feels natural for a person, and that way a human operator can quickly find a way to deploy drones while avoiding obstacles. It’s a fairly natural task for humans,” Dorzhieva said. “You could use software to set the coordinates for drones to travel to, but then they would have to figure out the path that avoids the obstacles on their own, and that means each machine must be equipped with a camera.”

There are two more twists to this. First, using virtual reality tech means the operator does not have to be anywhere close to the actual location where the swarm is being deployed. Whatever harsh conditions the drones are built to withstand—raging fire, radioactive contamination, freezing temperatures in a remote location, etc.—the VR helmet could be used to simulate operator presence on location and position the robots.

Also, once you put virtual reality and bow shooting together, there’s clearly potential for the entertainment and video game industry. Second, the new method is bolstered by reinforcement learning, enabling the drones to foresee possible collisions with each other and adapt the trajectories they are on accordingly.

The research is currently published on the preprint server arXiv.

More information:

Ekaterina Dorzhieva et al, DroneARchery: Human-Drone Interaction through Augmented Reality with Haptic Feedback and Multi-UAV Collision Avoidance Driven by Deep Reinforcement Learning, arXiv (2022). DOI: 10.48550/arxiv.2210.07730. arxiv.org/abs/2210.07730

Provided by

Skolkovo Institute of Science and Technology

Citation:

Virtual bow deploys drone swarm in a series of shots (2022, October 31)