Exploring a new way to teach robots, Princeton researchers have found that human-language descriptions of tools can accelerate the learning of a simulated robotic arm lifting and using a variety of tools.

The results build on evidence that providing richer information during artificial intelligence (AI) training can make autonomous robots more adaptive to new situations, improving their safety and effectiveness.

Adding descriptions of a tool’s form and function to the training process for the robot improved the robot’s ability to manipulate newly encountered tools that were not in the original training set. A team of mechanical engineers and computer scientists presented the new method, Accelerated Learning of Tool Manipulation with LAnguage, or ATLA, at the Conference on Robot Learning on Dec. 14.

Robotic arms have great potential to help with repetitive or challenging tasks, but training robots to manipulate tools effectively is difficult: Tools have a wide variety of shapes, and a robot’s dexterity and vision are no match for a human’s.

“Extra information in the form of language can help a robot learn to use the tools more quickly,” said study co-author Anirudha Majumdar, an assistant professor of mechanical and aerospace engineering at Princeton who leads the Intelligent Robot Motion Lab.

The team obtained tool descriptions by querying GPT-3, a large language model released by OpenAI in 2020 that uses a form of AI called deep learning to generate text in response to a prompt. After experimenting with various prompts, they settled on using “Describe the [feature] of [tool] in a detailed and scientific response,” where the feature was the shape or purpose of the tool.

“Because these language models have been trained on the internet, in some sense you can think of this as a different way of retrieving that information,” more efficiently and comprehensively than using crowdsourcing or scraping specific websites for tool descriptions, said Karthik Narasimhan, an assistant professor of computer science and co-author of the study. Narasimhan is a lead faculty member in Princeton’s natural language processing (NLP) group, and contributed to the original GPT language model as a visiting research scientist at OpenAI.

This work is the first collaboration between Narasimhan’s and Majumdar’s research groups. Majumdar focuses on developing AI-based policies to help robots—including flying and walking robots—generalize their functions to new settings, and he was curious about the potential of recent “massive progress in natural language processing” to benefit robot learning, he said.

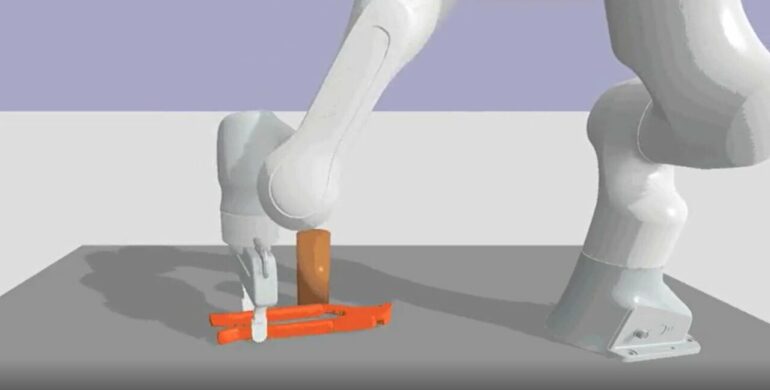

For their simulated robot learning experiments, the team selected a training set of 27 tools, ranging from an axe to a squeegee. They gave the robotic arm four different tasks: push the tool, lift the tool, use it to sweep a cylinder along a table, or hammer a peg into a hole. The researchers developed a suite of policies using machine learning training approaches with and without language information, and then compared the policies’ performance on a separate test set of nine tools with paired descriptions.

This approach is known as meta-learning, since the robot improves its ability to learn with each successive task. It’s not only learning to use each tool, but also “trying to learn to understand the descriptions of each of these hundred different tools, so when it sees the 101st tool it’s faster in learning to use the new tool,” said Narasimhan. “We’re doing two things: We’re teaching the robot how to use the tools, but we’re also teaching it English.”

The researchers measured the success of the robot in pushing, lifting, sweeping and hammering with the nine test tools, comparing the results achieved with the policies that used language in the machine learning process to those that did not use language information. In most cases, the language information offered significant advantages for the robot’s ability to use new tools.

One task that showed notable differences between the policies was using a crowbar to sweep a cylinder, or bottle, along a table, said Allen Z. Ren, a Ph.D. student in Majumdar’s group and lead author of the research paper.

“With the language training, it learns to grasp at the long end of the crowbar and use the curved surface to better constrain the movement of the bottle,” said Ren. “Without the language, it grasped the crowbar close to the curved surface and it was harder to control.”

The research is part of a larger project in Majumdar’s research group aimed at improving robots’ ability to function in novel situations that differ from their training environments.

“The broad goal is to get robotic systems—specifically, ones that are trained using machine learning—to generalize to new environments,” said Majumdar.” Other work by his group has addressed failure prediction for vision-based robot control, and used an “adversarial environment generation” approach to help robot policies function better in conditions outside their initial training.

The article, “Leveraging language for accelerated learning of tool manipulation,” was presented Dec. 14 at the Conference on Robot Learning. Besides Majumdar, Narasimhan and Ren, coauthors include recent Princeton graduate Bharat Govil and Tsung-Yen Yang, who completed a Ph.D. in electrical engineering at Princeton this year and is now a machine learning scientist at Meta Platforms Inc.

More information:

Allen Z. Ren et al, Leveraging Language for Accelerated Learning of Tool Manipulation (2022)

Provided by

Princeton University

Citation:

Words prove their worth as teaching tools for robots (2022, December 21)