As of April 2022, there have been nearly 1 million confirmed COVID-19 deaths in the U.S. For most people, visualizing what a million of anything looks like is an impossible task. The human brain just isn’t built to comprehend such large numbers.

We are two neuroscientists who study the processes of learning and numerical cognition – how people use and understand numbers. While there is still much to discover about the mathematical abilities of the human brain, one thing is certain: People are terrible at processing large numbers.

During the peak of the omicron wave, over 3,000 U.S. residents died per day – a rate faster than in any other large high-income country. A rate of 3,000 deaths per day is already an incomprehensible number; 1 million is unfathomably larger. Modern neuroscience research can shed light on the limitations of the brain in how it deals with large numbers – limitations that have likely factored in to how the American public perceives and responds to COVID-related deaths.

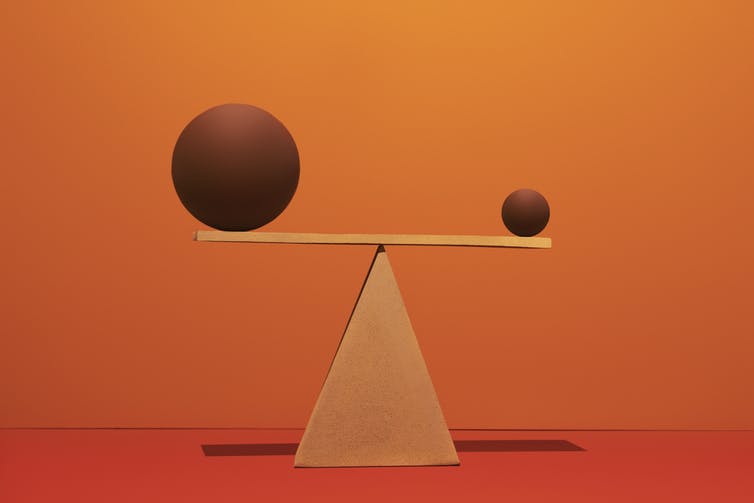

Brains are much better at thinking of large numbers in terms of what is bigger or smaller than in assessing absolute values.

Daniel Grizelj/Digital Vision via Getty Images

The brain is built to compare, not to count

Humans process numbers using networks of interconnected neurons throughout the brain. Many of these pathways involve the parietal cortex – a region of the brain located just above the ears. It’s responsible for processing all different sorts of quantities or magnitudes, including time, speed and distance, and provides a foundation for other numerical abilities.

While the written symbols and spoken words that humans use to represent numbers are a cultural invention, understanding quantities themselves is not. Humans – as well as many animals including fish, birds and monkeys – show rudimentary numerical abilities shortly after birth. Infants, adults and even rats find it easier to distinguish between relatively small numbers than larger ones. The difference between 2 and 5 is much easier to visualize than the difference between 62 and 65, despite the fact that both number sets differ by only 3.

The brain is optimized to recognize small quantities because smaller numbers are what people tend to interact with most on a daily basis. Research has shown that when presented with different numbers of dots, both children and adults can intuitively and rapidly recognize quantities less than three or four. Beyond that, people have to count, and as the numbers get higher, intuitive understanding is replaced by abstract concepts of large, individual numbers.

This bias toward smaller numbers even plays out day to day in the grocery store. When researchers asked shoppers in a checkout line to estimate the total cost of their purchase, people reliably named a lower price than the actual amount. And this distortion increased with price – the more expensive the groceries were, the larger the…