Bandwidth is a simple number that people think they understand. The bigger the number, the faster the storage.

Nope.

Leaving aside that many consumer bandwidth numbers are bogus – link speed is not storage speed – actual performance is rarely dependent on pure bandwidth.

Bandwidth is a convenient metric, easily measured, but not the critical factor in storage performance. What most storage performance tools measure is the bandwidth with large requests. Why? Because small requests don’t use much bandwidth.

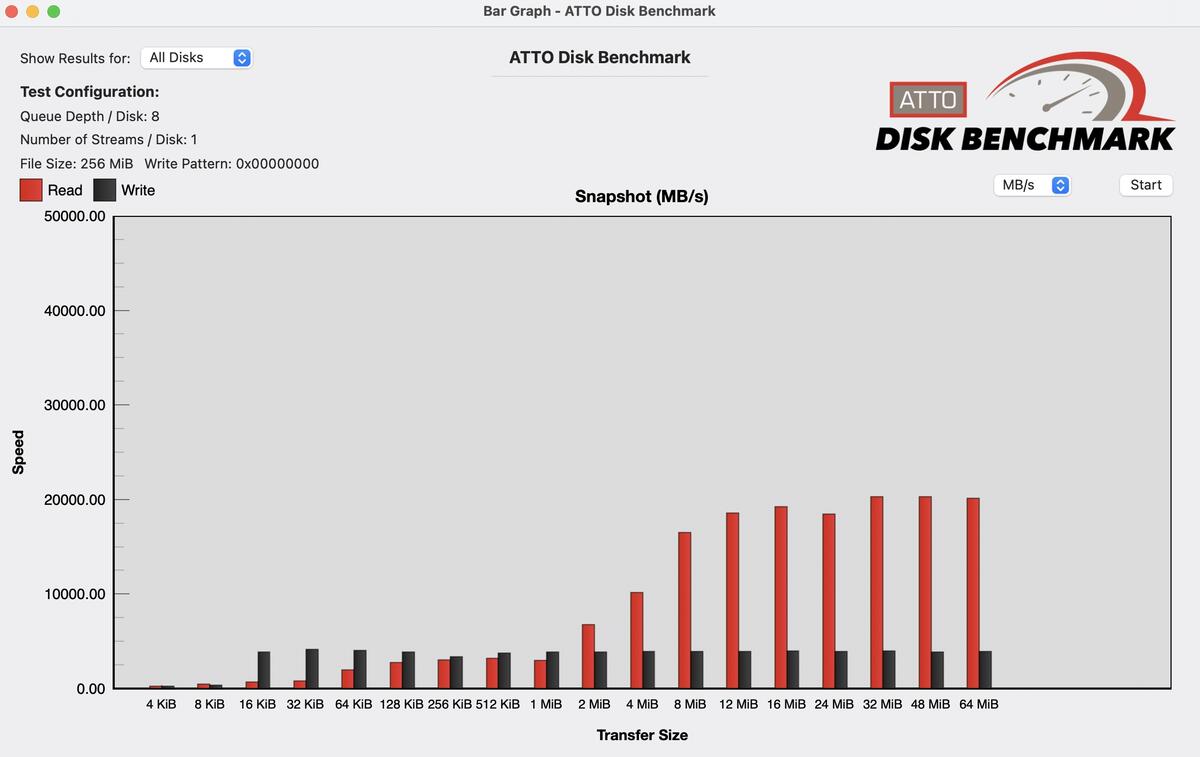

The following graphic, produced with a tool from ATTO storage, illustrates this. On the X axis is the bandwidth and on the Y axis is the access size. The correlation is obvious: small requests don’t use much bandwidth.

Drive benchmark on a fast internal PCIe SSD.

Robin Harris

But why doesn’t the CPU issue more I/O requests to soak up that unused bandwidth? Because every I/O takes time and resources – context switches, memory management and metadata updates, and more – to complete.

There a lot of small requests even if you’re editing huge video files. That’s because behind the scenes, the CPU’s Memory Management Unit (MMU) is constantly swapping out least-used pages and swapping in whatever data or program segments your workload requires.

These pages are fixed in size at 4KB for Windows and 16KB for recent versions of macOS. If you have a lot of physical memory there’s less paging initially after a reboot, but over time as you run more programs and open more tabs, physical memory fills up and the swapping starts.

Thus much of the I/O traffic to storage isn’t under your direct control. Nor does it require much bandwidth.

What’s really important?

Latency. How quickly does a storage device service a request.

There’s an obvious reason for latency’s importance, and another subtle – but nearly as important – reason.

Let’s start with the obvious.

Say you had a storage device with infinite bandwidth but each access took 10 milliseconds. That device could handle 100 accesses per second (1000ms/10ms = 100). If the average access was 16K, you would have a total bandwidth of 1,600,000 KB per second – less than the nominal 500 Mbits/sec USB 2.0 offers – wasting an almost infinite amount of bandwidth.

A 10 ms access is around what the average hard drive handles, which is why storage vendors packaged up hundreds, even thousands, of HDDs to maximize accesses. But that was the bad old days.

Today’s high-performance SSDs have latencies well into the microsecond range, meaning they can handle as many I/Os as a million dollar storage array did 15 years ago. Only if you had infinite 16KB accesses would you be limited by the bandwidth of the connection.

The subtle reason for latency’s importance is more complicated. Let’s say you have 100 storage devices with a 10ms access time, and your CPU is issuing 10,000 I/Os per second (IOPS).

Your 100 storage devices can handle 10,000 IOPS, so no problem, right? Wrong. Since each I/O takes 10 ms, your CPU is juggling 100 uncompleted I/Os. Drop the latency to 1ms and the CPU has only 10 uncompleted I/Os.

If there’s an I/O burst, the number of uncompleted I/Os can cause the page map to exceed available on-board memory and force it to start paging. Which, since paging is already slow, is a Bad Thing.

The Take

The problem with latency as a performance metric is twofold: it’s not easy to measure; and, few understand its importance. But people have been buying and using lower-latency interfaces for decades, probably without knowing why they were better than cheaper, and nominally as fast, interfaces.

For example, FireWire’s advantage over USB 2, even though the bandwidth numbers were roughly comparable, was latency. USB 2 – 500 Mbits/sec – used a polling access protocol with higher latency. A FireWire drive would always seem snappier than the same drive over USB, because of the lower-latency protocol. You could boot a Mac off a USB 2 drive, but running apps was dead slow.

Similarly, Thunderbolt has always been optimized for latency, which is one reason it costs more.

Comments welcome. This is prep for another piece where I look at USB 3.0 and Thunderbolt drives. Stay tuned.