December 16, 2020

feature

Over the past decade or so, researchers have developed a variety of computational models based on artificial neural networks (ANNs). While many of these models have been found to perform well on specific tasks, they are not always able to identify iterative, sequential or algorithmic strategies that can be applied to new problems.

Past studies have found that the addition of an external memory component can improve a neural network’s ability to acquire these strategies. Even with an external memory, however, they can remain prone to errors, are sensitive to changes in the data presented to them and require large amounts of training data to perform well.

Researchers at Technische Universität Darmstadt have recently introduced a new memory-augmented ANN-based architecture that can learn abstract strategies for solving problems. This architecture, presented in a paper published in Nature Machine Intelligence, separates algorithmic computations from data-dependent manipulations, dividing the flow of information processed by algorithms into two distinct ‘streams’.

“Extending neural networks with external memories has increased their capacities to learn such strategies, but they are still prone to data variations, struggle to learn scalable and transferable solutions, and require massive training data,” the researchers wrote in their paper. “We present the neural Harvard computer, a memory-augmented network-based architecture that employs abstraction by decoupling algorithmic operations from data manipulations, realized by splitting the information flow and separated modules.”

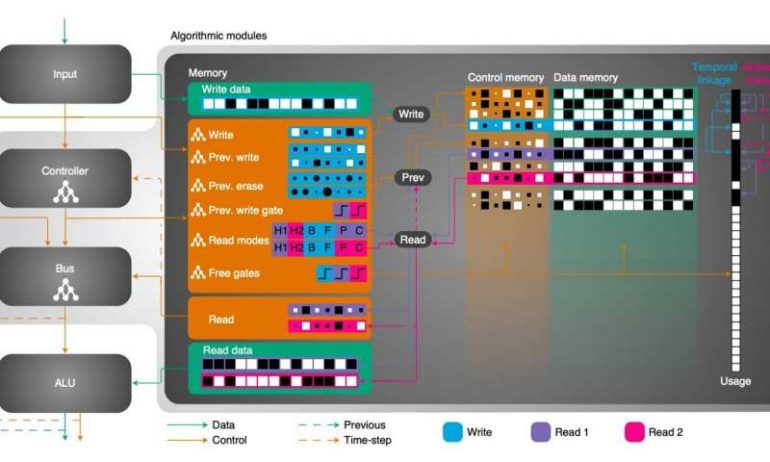

The neural Harvard computer or NHC, divides the flow of information fed to an algorithm into two different streams, namely a data stream (containing data-specific manipulations) and control stream (containing algorithmic operations). This ultimately allows it to differentiate between modules related to data and algorithmic modules, creating two separate and yet coupled memories.

The NHC has three main algorithmic modules, which are referred to as the controller, the memory and the bus. These three components have distinct functions but interact with each other to acquire abstractions that can be applied to future tasks.

“This abstraction mechanism and evolutionary training enable the learning of robust and scalable algorithmic solutions,” the researchers explained in their paper.

The team at Technische Universität Darmstadt evaluated the NHC by using it to train and run 11 different algorithms. They then tested the performance of these algorithms, along with their generalization and abstraction capabilities. The researchers found that the NHC could reliably run all 11 algorithms, while also allowing them to perform well on tasks that were more complex than those they were originally trained to complete.

“On a diverse set of 11 algorithms with varying complexities, we show that the NHC reliably learns algorithmic solutions with strong generalization and abstraction, achieves perfect generalization and scaling to arbitrary task configurations and complexities far beyond those seen during training, and independent of the data representation and the task domain,” the researchers wrote in their paper.

The recent study carried out by this team of researchers confirms the potential of using external memory components to augment the performance and generalizability of neural network-based architectures across tasks of varying complexities. In the future, the NHC architecture could be used to combine and improve the capabilities of different ANNs, aiding the development of models that can identify useful strategies to make accurate predictions based on new data.

Engineers offer smart, timely ideas for AI bottlenecks

More information:

Evolutionary training and abstraction yields algorithmic generalization of neural computers. Nature Machine Intelligence(2020). DOI: 10.1038/s42256-020-00255-1.

2020 Science X Network

Citation:

A memory-augmented, artificial neural network-based architecture (2020, December 16)

retrieved 16 December 2020

from https://techxplore.com/news/2020-12-memory-augmented-artificial-neural-network-based-architecture.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.