Animals are constantly moving and behaving in response to instructions from the brain. But while there are advanced techniques for measuring these instructions in terms of neural activity, there is a paucity of techniques for quantifying the behavior itself in freely moving animals. This inability to measure the key output of the brain limits our understanding of the nervous system and how it changes in disease.

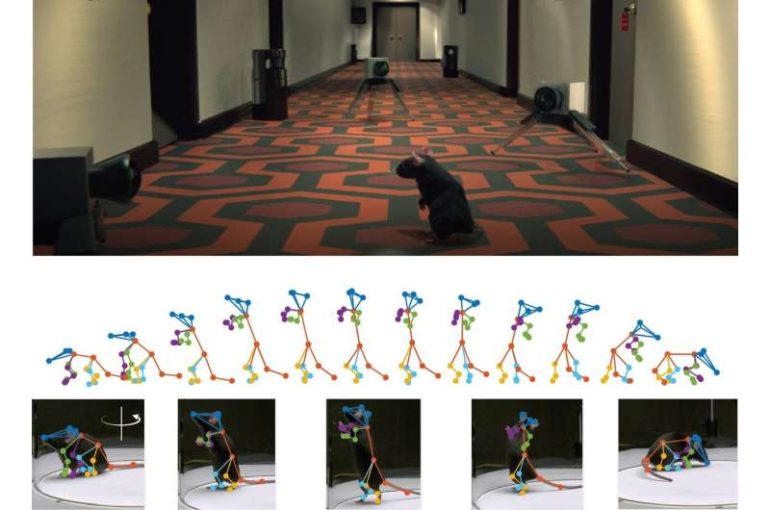

A new study by researchers at Duke University and Harvard University introduces an automated tool that can readily capture behavior of freely behaving animals and precisely reconstruct their three dimensional (3D) pose from a single video camera and without markers.

The April 19 study in Nature Methods led by Timothy W. Dunn, Assistant Professor, Duke University, and Jesse D. Marshall, postdoctoral researcher, Harvard University, describes a new 3D deep-neural network, DANNCE (3-Dimensional Aligned Neural Network for Computational Ethology). The study follows the team’s 2020 study in Neuron which revealed the groundbreaking behavioral monitoring system, CAPTURE (Continuous Appendicular and Postural Tracking using Retroreflector Embedding), which uses motion capture and deep learning to continuously track the 3D movements of freely behaving animals. CAPTURE yielded an unprecedented detailed description of how animals behave. However, it required using specialized hardware and attaching markers to animals, making it a challenge to use.

“With DANNCE we relieve this requirement,” said Dunn. “DANNCE can learn to track body parts even when they can’t be seen, and this increases the types of environments in which the technique can be used. We need this invariance and flexibility to measure movements in naturalistic environments more likely to elicit the full and complex behavioral repertoire of these animals.”

DANNCE works across a broad range of species and is reproducible across laboratories and environments, ensuring it will have a broad impact on animal—and even human—behavioral studies. It has a specialized neural network tailored to 3D pose tracking from video. A key aspect is that its 3D feature space is in physical units (meters) rather than camera pixels. This allows the tool to more readily generalize across different camera arrangements and laboratories. In contrast, previous approaches to 3D pose tracking used neural networks tailored to pose detection in two-dimensions (2D), which struggled to readily adapt to new 3D viewpoints.

“We compared DANNCE to other networks designed to do similar tasks and found DANNCE outperformed them,” said Marshall.

To predict landmarks on an animal’s body DANNCE required a large training dataset, which at the outset seemed daunting to collect. “Deep neural networks can be incredibly powerful, but they are very data hungry,” said senior author Bence Ölveczky, Professor in the Department of Organismic and Evolutionary Biology, Harvard University. “We realized that CAPTURE generates exactly the kind of rich and high-quality training data these little artificial brains need to do their magic.”

The researchers used CAPTURE to collect seven million examples of images and labeled 3D keypoints in rats from 30 different camera views. “It worked immediately on new rats, even those not wearing the markers,” Marshall said. “We really got excited though when we found that it could also track mice with just a few extra examples.”

Following the discovery, the team collaborated with multiple groups at Duke University, MIT, Rockefeller University and Columbia University to demonstrate the generality of DANNCE in various environments and species including marmosets, chickadees, and rat pups as they grow and develop.

“What’s remarkable is that this little network now has its own secrets and can infer the precise movements of animals it wasn’t trained on, even when large parts of their body is hidden from view,” said Ölveczky.

The study highlights some of the applications of DANNCE that allow researchers to examine the microstructure of animal behavior well beyond what is currently possible with human observation. The researchers show that DANNCE can extract individual ‘fingerprints’ describing the kinematics of different behaviors that mice make. These fingerprints should allow researchers to achieve standardized definitions of behaviors that can be used to improve reproducibility across laboratories. They also demonstrate the ability to carefully trace the emergence of behavior over time, opening new avenues in the study of neurodevelopment.

Measuring movement in animal models of disease is critically important for both basic and clinical research programs and DANNCE can be readily applied to both domains, accelerating progress across the board. Partial funding for CAPTURE and DANNCE was provided by the NIH and the Simons Foundation Autism Research Initiative (SFARI) and the researchers note the value of these tools hold for autism-related and motor-related studies, both in animal models and in humans.

“Because we’ve had very poor ability to quantify motion and movement rigorously in humans this has prevented us from separating movement disorders into specialized subtypes that potentially could have different underlying mechanisms and remedies. I think any field in which people have noticed but have been unable to quantify effects across their population will see great benefits from applying this technology,” said Dunn.

The researchers open sourced the tool and it is already being put to use in other labs. Going forward, they plan to apply the system to multiple animals interacting. “DANNCE changes the game for studying behavior in free moving animals,” said Marshall. “For the first time we can track actual kinematics in 3D and learn in unprecedented detail what animals do. These approaches are going to be more and more essential in our quest to understand how the brain operates.”

More information:

Geometric deep learning enables 3D kinematic profiling across species and environments, Nature Methods (2021). DOI: 10.1038/s41592-021-01106-6

Provided by

Harvard University

Citation:

3D deep neural network precisely reconstructs freely-behaving animal’s movements (2021, April 19)

retrieved 20 April 2021

from https://techxplore.com/news/2021-04-3d-deep-neural-network-precisely.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.