It’s an everyday scenario: you’re driving down the highway when out of the corner of your eye you spot a car merging into your lane without signaling. How fast can your eyes react to that visual stimulus? Would it make a difference if the offending car were blue instead of green? And if the color green shortened that split-second period between the initial appearance of the stimulus and when the eye began moving towards it (known to scientists as the saccade), could drivers benefit from an augmented reality overlay that made every merging vehicle green?

Qi Sun, a joint professor in Tandon’s Department of Computer Science and Engineering and the Center for Urban Science and Progress (CUSP), is collaborating with neuroscientists to find out.

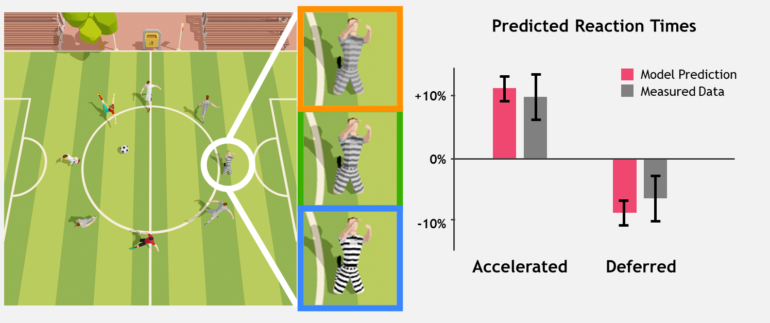

He and his Ph.D. student Budmonde Duinkharjav—along with colleagues from Princeton, the University of North Carolina, and NVIDIA Research—recently authored the paper “Image Features Influence Reaction Time: A Learned Probabilistic Perceptual Model for Saccade Latency,” presenting a model that can be used to predict temporal gaze behavior, particularly saccadic latency, as a function of the statistics of a displayed image. Inspired by neuroscience, the model could ultimately have great implications for highway safety, telemedicine, e-sports, and in any other arena in which AR and VR are leveraged.

“While AR/VR technology may not transform us into Superhumans right away,” Sun, who heads the Immersive Computing Lab at Tandon, says, “the potential of these new forms of emerging media to boost human performance is very exciting.”

“Image Features Influence Reaction Time” garnered best-paper honors at the Association for Computing Machinery’s 2022 SIGGRAPH (Special Interest Group on Computer Graphics and Interactive Techniques), the field’s premier annual conference, and a second paper Sun co-authored, “Joint Neural Phase Retrieval and Compression for Energy- and Computation-efficient Holography on the Edge,” received an honorable mention.

In that second paper, he and his co-authors delve into the challenges of creating high-fidelity holographic displays, which require greater computational demand and energy consumption than many devices can provide; as the researchers point out, even conducting computation entirely on a cloud server does not pose an effective solution since it can result in prohibitively high latency and storage. They instead propose an innovative framework that jointly generates and compresses high-quality holograms by distributing the computation and optimizing the transmission—resulting in an 83% reduction in energy costs and significantly reducing average bit rates and decoding times.

In another recent paper, Sun, a former research scientist at software giant Adobe, posited that we can predict and change people’s perception of time, even by a span of several minutes, by altering different visual features seen in VR settings. “Time perception is fluid,” he explains, “and our findings have the potential to have a profound impact in real-world situations. Imagine, for example, that we could reduce how much pain a patient perceives during a medical procedure with the use of VR or help a pilot in training to feel less fatigue. There are even applications within a field like urban planning since perceived waiting times for public transit are a source of dissatisfaction for commuters in many cities.”

As neuroscientists make new discoveries about how the brain works, Sun hopes to bring them to bear in emerging media to unlock real-world benefits. “Think of the brain as a low-powered computer,” he says. “We know new technologies have an effect on our cognition and behavior, and we should be harnessing that for the good of society and helping prevent any negative effects.”

More information:

Budmonde Duinkharjav et al, Image features influence reaction time, ACM Transactions on Graphics (2022). DOI: 10.1145/3528223.3530055

Provided by

NYU Tandon School of Engineering

Citation:

How image features influence reaction times (2022, August 5)