A scientist from the Graduate School of Engineering at Osaka University proposed a numerical scale to quantify the expressiveness of robotic android faces. By focusing on the range of deformation of the face instead of the number of mechanical actuators, the new system can more accurately measure how much robots are able to mimic actual human emotions. This work, published in Advanced Robotics, may help develop more lifelike robots that can rapidly convey information.

Imagine on your next trip to the mall, you head to the information desk to ask for directions to a new store. But, to your surprise, an android is manning the desk. As much as it might sound like science fiction, this situation may not be so far in the future. However, one obstacle to this is the lack of standard methodology for measuring the expressiveness of android faces. It would be especially useful if the index could be applied equally to both humans and androids.

Now, a new evaluation method was proposed at Osaka University to precisely measure the mechanical performance of android robot faces. Although facial expressions are important for transmitting information during social interactions, the degree to which mechanical actuators can reproduce human emotions can vary greatly.

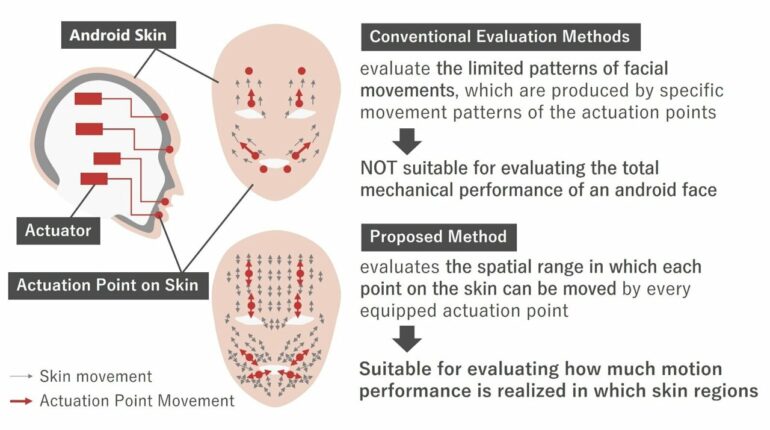

“The goal is to understand how expressive an android face can be compared to humans,” author Hisashi Ishihara says. While previous evaluation methods focused only on specific coordinated facial movements, the new method employs the spatial range over which each skin part can move for the numerical indicator of “expressiveness.” That is, instead of counting on the number of facial patterns that can be created by the mechanical actuators that control the movements or evaluating the quality of these patterns, the new index looked at the total spatial range of motion accessible by every point on the face.

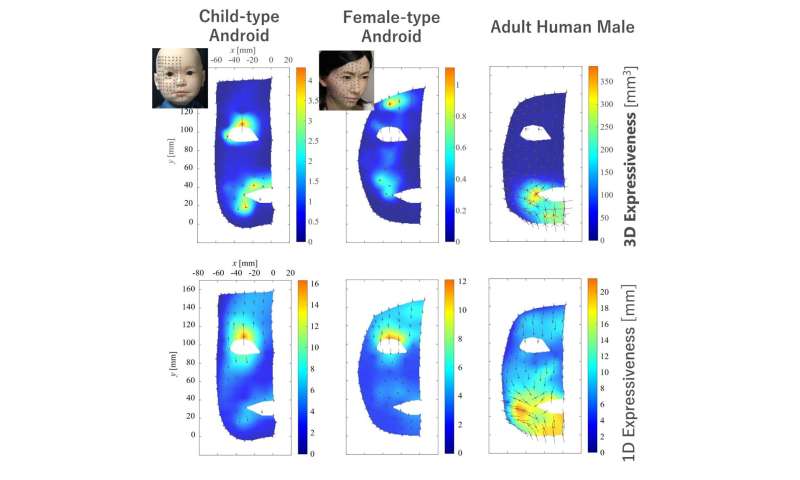

Spatial distributions of the expressiveness on faces of androids and a human. © Hisashi Ishihara

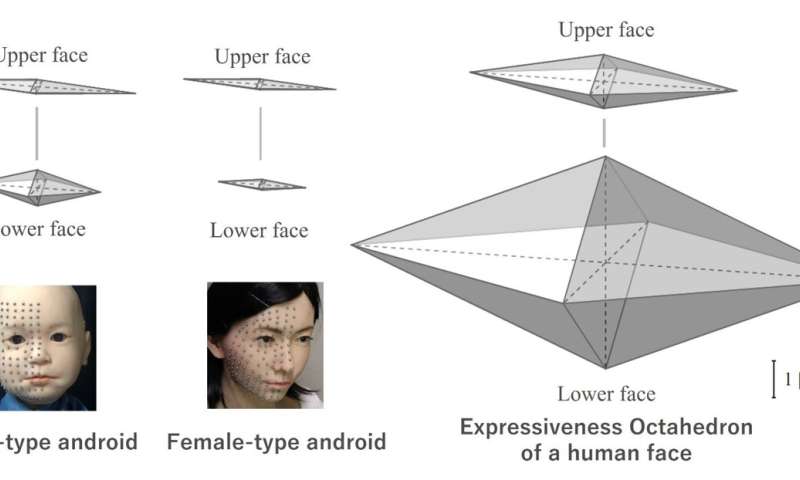

Comparison of approximate octahedrons of the expressiveness. © Hisashi Ishihara

For this study, two androids—one representing a child and one representing an adult female—were analyzed, along with three human adult males. Displacements of over 100 facial points for each subject were measured using an optical motion capture system. It was discovered that the expressiveness of the androids was significantly less than that of humans, especially in the lower regions of the faces. In fact, the potential range of motion for the androids was only about 20% that of humans even in the most lenient evaluation.

“This method is expected to aid in the development of androids with expressive power that rivals what humans are capable of,” Ishihara says. Future research on this evaluation method may help android developers create robots with increased expressiveness.

More information:

Hisashi Ishihara, Objective evaluation of mechanical expressiveness in android and human faces, Advanced Robotics (2022). DOI: 10.1080/01691864.2022.2103389

Citation:

Objective evaluation of mechanical expressiveness in android and human faces (2022, August 17)