Over the past few decades, roboticists and computer scientists have developed a variety of data-based techniques for teaching robots how to complete different tasks. To achieve satisfactory results, however, these techniques should be trained on reliable and large datasets, preferably labeled with information related to the task they are learning to complete.

For instance, when trying to teach robots to complete tasks that involve the manipulation of objects, these techniques could be trained on videos of humans manipulating objects, which should ideally include information about the types of grasps they are using. This allows the robots to easily identify the strategies they should employ to grasp or manipulate specific objects.

Researchers at University of Pisa, Istituto Italiano di Tecnologia, Alpen-Adria-Universitat Klagenfurt and TU Delft recently developed a new taxonomy to label videos of humans manipulating objects. This grasp classification method, introduced in a paper published in IEEE Robotics and Automation Letters, accounts for movements prior to the grasping of objects, for bi-manual grasps and for non-prehensile strategies.

“We have been working for some time now (some of us for a long time) on studying human behavior in grasping and manipulation, and on using the great insights that you get from the human example to build more effective robotic hands and algorithms,” Cosimo Della Santina, one of the researchers who carried out the study, told TechXplore. “In this process, one exercise that we do a lot is finding ways of representing the wide variety of human capabilities.”

In the past, other research teams introduced taxonomies that characterize human grasping strategies and actions. Nonetheless, the taxonomies they proposed so far were not developed with video labeling in mind, thus they have considerable limitations when applied to this task.

“For example, existing taxonomies do not have the right trade-off between granularity and ease of implementation and usually discard important aspects that are present in hand-centered video material, such as bimanual grasps,” Matteo Bianchi, another researcher involved in the study, told TechXplore. “For these reasons, we propose a new taxonomy that was specifically developed for enabling the labeling of human grasping videos. This taxonomy can account for pre-grasp phases, bimanual grasps, nonprehensile

manipulation, and environmental exploitation events.”

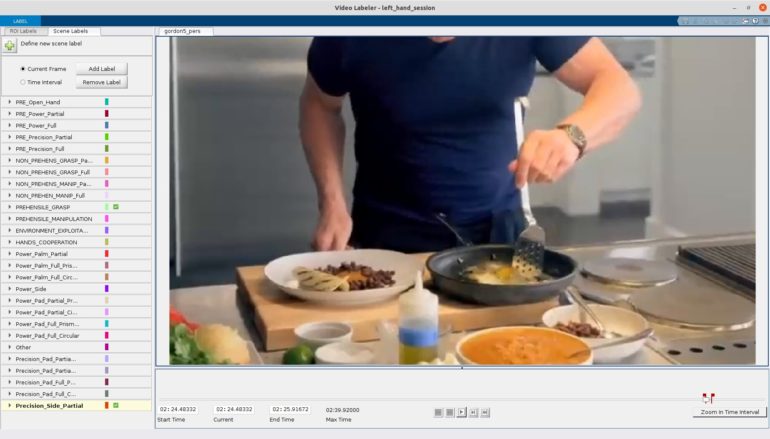

In addition to introducing a new taxonomy, Della Santina, Bianchi and their colleagues used this taxonomy to create a labeled dataset containing videos of humans performing daily activities that involve object manipulation. In their paper, they also describe a series of MatLab tools for labeling new videos of humans completing manipulation tasks.

“We show that there is a lot to gain in looking for the right tradeoff between capability of explaining complex human behaviors and easing the practical endeavor of labeling videos which are not explicitly produced as output of scientific research,” Della Santina said.

“Our study opens up the possibility of leveraging the large abundance of videos involving human hands that can be easily found on the web (e.g., youtube) to study human behavior in a precise and scientific way.”

When considering, for instance, videos of cooks preparing a meal, the camera filming these videos is generally focused on the hands of the cook featured in the video. However, so far there was no well-defined language that allowed engineers to use this video footage to train machine learning algorithms. Della Santina, Bianchi and their colleagues introduced such a language and validated it.

In the future, the labeled dataset they compiled could be used to train both existing and new algorithms on image recognition, robotic grasping and robotic manipulation tasks. In addition, the taxonomy introduced in their paper could help to compile other datasets and to label other videos of humans manipulating objects.

“Empowered by this new tool we plan to keep doing what we all like the most: be amazed by the capability of humans during even the most mundane activities involving grasping and manipulation and think of ways of transferring these capabilities to robots,” Della Santina said. “We believe that the new language we developed will multiply our capability of doing these things, by incorporating non-scientific material in our scientific investigations.”

An approach to achieve compliant robotic manipulation inspired by human adaptive control strategies

More information:

Understanding human manipulation with the environment: a novel taxonomy for video labelling. IEEE Robotics and Automation Letters(2021). DOI: 10.1109/LRA.2021.3094246.

2021 Science X Network

Citation:

A new taxonomy to characterize human grasp types in videos (2021, July 28)

retrieved 28 July 2021

from https://techxplore.com/news/2021-07-taxonomy-characterize-human-grasp-videos.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.