Gary Marcus, top, hosted presentations by sixteen AI scholars on what AI needs to “move forward.”

Montreal.ai

A year ago, Gary Marcus, a frequent critic of deep learning forms of AI, and Joshua Bengio, a leading proponent of deep learning, faced off in a two-hour debate about AI at Bengio’s MILA institute headquarters in Montreal.

Wednesday evening, Marcus was back, albeit virtually, to open what is now the second installment of what has become a planned annual debate on AI, under the title “AI Debate 2: Moving AI Forward.” (You can follow the proceedings from 4 pm to 7 pm on Montreal.ai’s Facebook page.)

Vincent Boucher, president of the organization Montreal.AI, who had helped to organize last year’s debate, opened the proceedings, before passing the mic to Marcus as moderator.

Marcus said 3,500 people had pre-registered for the evening, and at the start, 348 people were live on FaceBook. Last year’s debate had 30,000 by the end of the night, noted Marcus.

Bengio was not in attendance, but the evening featured presentations from sixteen scholars: Ryan Calo, Yejin Choi, Daniel Kahneman, Celeste Kidd, Christof Koch, Luis Lamb, Fei-Fei Li, Adam Marblestone, Margaret Mitchell, Robert Osazuwa Ness, Judea Pearl, Francesco Rossi, Ken Stanley, Rich Sutton, Doris Tsao and Barbara Tversky.

“The point is to represent a diversity of views,” said Marcus, promising a three hours that might be like “drinking from a firehose.”

Marcus opened the talk by recalling last year’s debate with Bengio, which he said had been about the question, Are big data and deep learning alone enough to get to general intelligence?

This year, said Marcus, there had been a “stunning convergence,” as Bengio and another deep learning scholar, Facebook’s head of AI, Yann LeCun, seemed to be making the same points as Marcus about

There was also a Jürgen Schmidhuber paper recently that seemed to be part of that convergence, said Marcus.

Following this convergence, said Marcus, it was time to move to “the debate of the next decade: How can we move Ai to the next level.”

Each of the sixteen speakers spoke for roughly five minutes about their focus and what they believed AI needs. Marcus compiled a nice reading packet you can check out for the scholars.

Fei-Fei Li, the Sequoia Professor of computer science at Stanford University, talked about what she called the “north star” of AI.

The north star, she said was interaction with the environment.

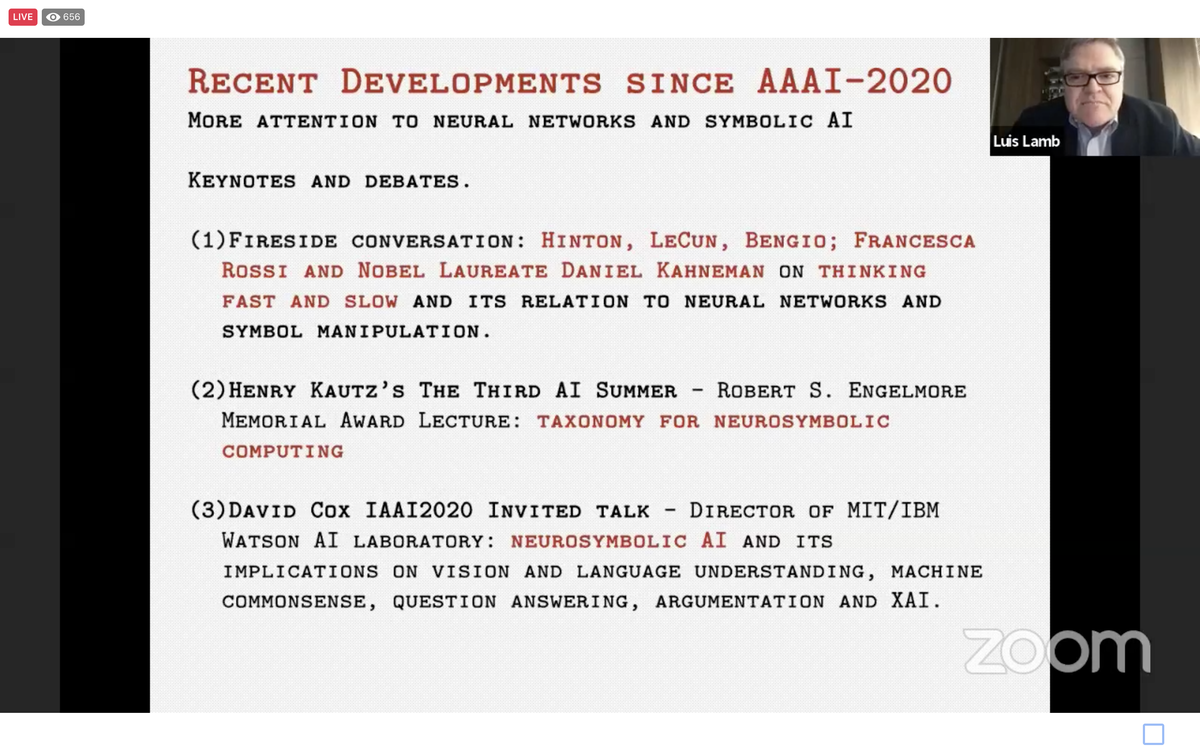

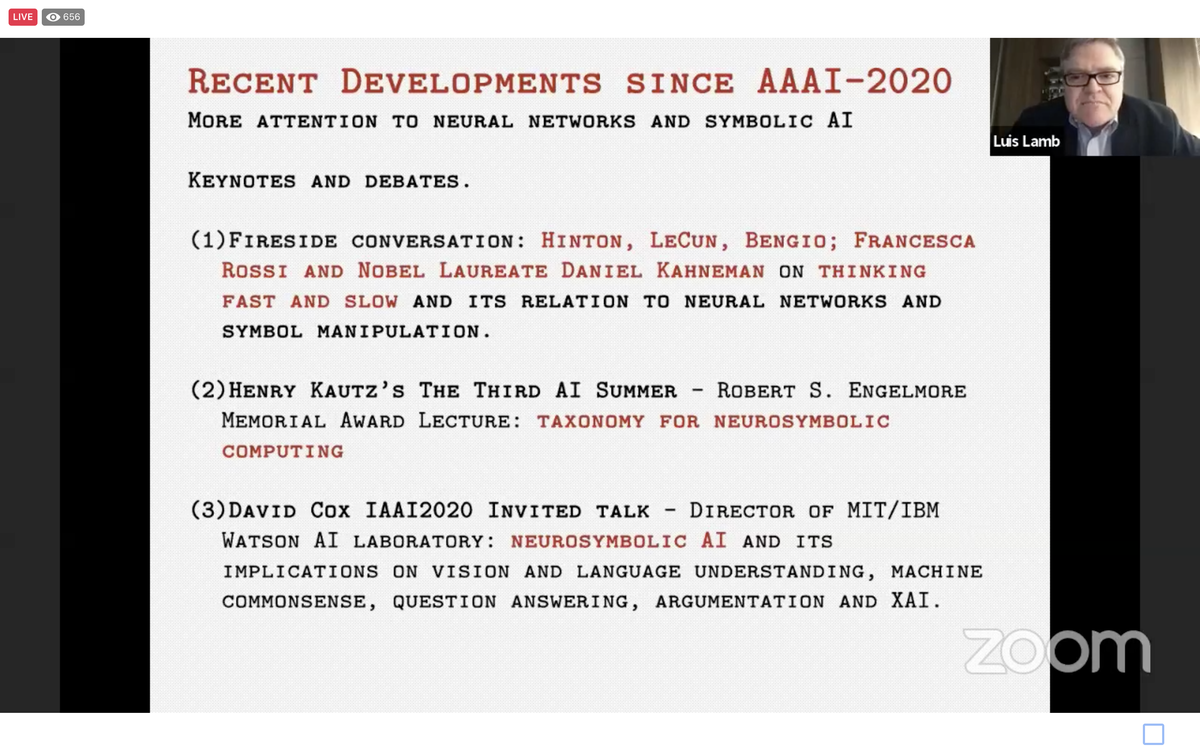

Following LI, Luís Lamb, who is professor of computer science at the Universidade Federal do Rio Grande do Sul in Brazil, talked about “neurosymbolic AI,” a topic he had presented a week prior at the NeurIPS AI conference.

Calling Marcus and Judea Pearl “heroes,” Lamb said the neurosymbolic work from Marcus’s concepts in Marcu’s book, “The Algebraic Mind,” including the need to manipulate symbols, on top of neural networks. “We need a foundational approach based both on logical formalization” such as Pearl’s “and machine learning,” said Lamb.

Lamb was followed by Rich Sutton, distinguished scientist at DeepMind. Sutton recalled neuroscientist David Marr, who he called one of the great figures of AI. He described Marr’s notion of three levels of processing that were required: computational theory, representation and algorithm, and hardware implementation. Marr was particularly interested in computational theory, but “there is very little computational theory” today in AI, said Sutton.

Thing such as gradient descent are “hows,” said Sutton, not the “what” that computational theory needed.

“Reinforcement learning is the first computational theory of intelligence,” declared Sutton. Other possibilities were predictive coding, bayesian inference, and such.

“AI needs an agreed-upon computational theory of intelligence,” concluded Sutton, “RL is the stand-out candidate for that.”

Next came Pearl, who has written numerous books about causal reasoning, including the Times best-seller, The Book of Why. His talk was titled, “The domestication of causality.”

“We are sitting on a goldmine,” said Pearl, referring too deep learning. “I am proposing the engine that was constructed in the causal revolution to represent a computational model of a mental state deserving of the title ‘deep understanding’,” said Pearl.

Deep understanding, he said, would be the only system capable of answering the questions, “What is?” “What if?” and “If Only?”

Next up was Robert Ness, on the topic of “causal reasoning with (deep) probabilistic programming.”

Ness said he thinks of himself as an engineer, and is interested in building things. “Probabilistic programming will be key,” he said, to solving causal reasoning. Probabilistic programming could build agents that can reason counter-factually, a key to causal reasoning, said Ness. That was something he felt was “near and dear to my heart.” This, he said, could address Pearl’s question for the “If only?”

Next was Ken Stanley, who is Charles Millican professor of computer science at the University of Central Florida. Stanley took a sweeping view of evolution and creativity.

“We layer idea upon idea, over millennia, from fire and wheels up to space stations, which we would call an open-ended system.” Stanley said evolution was a parallel, a “phenomenal system,” he said, that had produced intelligence. “Once we began to exist, you got all the millennia of creativity,” said Stanley. “We should be trying to understand these phenomena,” he said.

Following Stanley was Yejin Choi, the Brett Helsel Associate Professor of Computer Science at the University of Washington.

She recalled Roger Shepard’s “Monsters in a Tunnel,” examples form “The Enigma of Reason,” led hear to talk about the importance of language, and also “reasoning as generative tasks.” “We just reason on the fly, and this is going to be one of the key fundamental challenges moving forward.”

Choi said new language models like “GPT-4, 5, 6 will not cut it.”

Marcus took a moment to identify convergence among the speakers: on counter-factuals, on dealing with the unfamiliar and open-mindedness; on the need to integrate knowledge, to Pearl’s point about data alone not being enough; the importance of common sense (to Choi’s point about the “generative.”)

Marcus asked the panel, “Okay, we’ve seen six or seven different perspectives, do we want to put all those together? Do we want to make reinforcement learning compatible with knowledge.”

Lamb noted that neurosymbolic systems are not about “the how.” “It’s important to understand we have the same aims of building something very solid in terms of learning, but representation precedes learning,” he said.

Marcus asked the scholars to reflect on modularity. Sutton replied that he would welcome lots of ways to think about the overall problem, not just representation. “What is it? What is the overall thing we’re trying to achieve” with computational theory, he prompted. “We need multiple computational theories,” said Sutton.

Among audience questions, one individual asked how to reason in a causal framework with unknown, or novel variables. Ness talked about COVID-19 and studying viruses. Prior viruses helped to develop causal models that were able to be extended to SARS-CoV-2. He referred to dynamic models such as the ubiquitous “SEI” epidemiological model, and modeling that in probabilistic languages. “You encounter it a lot where you hit a problem, you’r unfamiliar, but you can import abstractions from other domains.

Choi pointed out “Humans are capable of believing strange things, and do strange causal reasoning,” she said, “do we want to build a human-like system?” Choi said one thing that is interesting about humans is the ability to communicate so much knowledge in natural language, and to learn through natural language.

Marcus asked Li and Stanley about “neuro-evolution.” “What’s the status of that field?” he asked.

Stanley said the field might use evolutionary algorithm, but also might just be an attempt to understand open-ended phenomena. He suggested “hybridizing” evolutionary approaches with learning algorithms.

“Evolutiona is one of the greatest, richest experiments we have seen in intelligence,” said Li. She suggested there will be “a set of unifying principles behind intelligence.” “But I’m not dogmatic about following the biological constraints of evolution, I want to distill the principles,” said Li.

After the question break, Marcus brought on Tversky, emeritus professor of psychology at Stanford. Her focus was encapsulate on the screen, “All living things must act in space … when motion ceases, life ends.” Tversky talked about how people make gestures, making spatial motor movements that can affect how well or how poorly people think.

“Learning, thinking, communication,. cooperation, competing, all rely on actions, and a few words.

Daniel Kahneman was next, Nobel laureate and author of Thinking, Fast and Slow, a kind of bible for some in issues of reasoning in AI. Kahneman noted he has been identified with the book’s paradigm, “System 1 and System 2 thinking.” One is a form of intuition, the other a form of higher-level reasoning.

Kahneman said System 1 could encompass any things that are non-symbolic, but it was not true to say System 1 is a non-symbolic system. “It’s much to rich for that,” he said. “It holds a representation of the world, the representation that allows a simulation fo the world,” which humans live with, said Kahneman. “Most of the time, we are in the valley of the normal, events that we expect.” The model accepts many events as “normal,” said Kahneman, even if unexpected. It rejects others. Picking up Pearl, Kahneman said a lot of counter-factual reasoning was in System 1, where an innate sense of what is normal would govern such reasoning.

Following Kahneman was Doris Tsao, a professor of biology at CalTech. She focused on feedback systems, recalling early work on neurons of McCulloch and Pitts. Feedback is essential, she said, citing back-propagation in multilayer neural nets. Understanding feedback may allow one to build more robust vision systems, said Tsao. Tsao said feedback systems might help to understand phenomena such as hallucinations. Tsao said she is “very excited about the interaction between machine learning and systems neuroscience.”

Next was Marblestone of MIT, previously a research scientist at DeepMind continued the theme of neuroscience. He said making observations of the brain, trying to abstract to a theory of functioning, was “right now at a very primitive level of doing this.” Marblestone said examples of neural nets, such as convolutional neural networks, were copying human behavior.

Marblestone spoke about moving beyond just brain structure to human activity, using fMRIs, for example. “What if we could model not just how someone is categorizing an object, but making the system predict the full activity in the brain?”

Next came Koch, a researcher with the Allen Institute for Brain Science in Seattle. He started with the assertion, “Don’t look to neuroscience for help with AI.”

The Institute’s large-scale experiments reveal complexity in the brain that is far beyond anything seen in deep learning, he said. “The connector reveals the brains are not just made out of millions and billions of cells, and a thousand different neuronal cell types, that differentiate by the genes expressed, the addresses they send the information to, the differences in synaptic architecture in dendritic trees,” said Koch. “We have highly heterogeneous components,” he said. “Very different from our current VLSI hardware.”

“This really exceeds anything that science has ever studied, with these highly heterogenous components on the order of 1,000 with connectivity on the order of 10,000.” Current deep neural networks are “impoverished,” said Koch, with gain-saturation activation units. “The vast majority of them are feed forward,” said Koch, whereas the brain has “massive feedback.”

“Understanding brains is going to take us a century or two,” he advised. “It’s mistake to look for inspiration in the mechanistic substrate of the brain to speed up AI,” said Koch, contrasting his views to those of Marblestone. “It’s just radically different from the properties of a manufactured object.”

Following Koch, Marcus proposed some more questions. He asked about “diversity,” what could individual pieces of the cortex tell us about other parts of the cortex?

Tsao responded by pointing out “what strikes you is the similarity,” which might indicate really deep general principles of brain operation, she said. Predictive coding is a “normative model” that could “explain a lot of things.” “Seeking this general principle is going to be very powerful.”

Koch jumpe3d on that point, saying that cell types are “quite different,” with visual neurons being very different from, for example, pre-frontal cortex neurons.

Marblestone responded “we need to get better data” to “understand empirically the computational significance that Christof is talking about.”

Marcus asked about innateness. He asked Kahneman about his concept of “object files.” “An index card in your head for each of the things that you’re tracking. Is this an innate architecture?”

Kahneman replied that object files is the idea of permanence in objects as the brain tracks them. He likened it to a police file that changes contents over time as evidence is gathered. It is “surely innate,” he said.

Marcus posed that there is no equivalent of object files in deep learning.

Marcus asked Tversky if she is a Kantian, meaning, Emmanuel Kant’s notion that time and space are “innate.” She replied that she has “trouble with innate,” even object files being “innate.” Object permanence, noted Tversky, “takes a while to learn.” The sharp dichotomy between innate and what is acquired made her uncomfortable, she said. She cited Li’s notion of acting in the world, as important.

Celeste Kidd echoed Tversky in “having a problem saying what it means to be innate.” However, she pointed out “we are limited in knowing what an infant knows,” and there is still progress being made to understand what infants know.

Next was Kidd’s turn to present. Kidd is a professor at UC Berkeley whose lab studies how humans form beliefs. Kidd noted algorithmic bias is dangerous for the ways they can “drive human belief sometimes in destructive ways.” She cited AI systems for content recommendation, such as on social networks. Such systems can push people toward “stronger, inaccurate beliefs that despite our best efforts are very difficult to correct.” She cited the examples of Amazon and LinkedIn using AI for hiring, which had a tendency to bias against female job candidates.

“Biases in AI systems reinforce and strengthen bias in the people that use them,” said Kidd. “Right now is a terrifying time in AI,” said Kidd, citing the termination of Timnit Gebru from Google. “What Timnit experienced at Google is the norm, hearing about it is what’s unusual.” “You should listen to Timnit and countless others about what the environment at Google was like,” said Kidd. “Jeff Dean should be ashamed.”

Next up was Mitchell, who was co-lead with Gebru at Google. “The typical approach to developing machine learning collects trAining data, training the model, output is filtered, and people can see the output.” But, said Mitchell, human bias is upfront in the collection of data, the annotation, and “throughout the rest of development. It further affects model training.” Things such as loss function put in a “value statement,” said Mitchel.. There are biases in post-processing,.

“People see the output, and it becomes a feedback loop,” you home in on the beliefs, she said. “We were trying to break this system, which we call bias laundering.” There is no such thing as neutrality in development, said Mitchell, “development is value laden.” Among the things that need to be operationalized, she said, is to break down what is packed into development, so that people can reflect on it, and work toward “foreseeable benefits” and away from “foreseeable harms and risks.” Tech, said Mitchell, is usually good at extolling the benefits of tech, but not the risks, nor pondering the long-term effects.

You wouldn’t think it would be that radical to put foresight into a development process, but I think we’re seeing now just how little value people are placing on the expertise and value of their workers,” said Mitchell. She said she and Gebru continue to strive to bring the element of foresight to more areas of AI.

Next up was Rossi, an IBM fellow. She discussed the task of creating “an ecosystem of trust” for AI. “Of course we want it to be accurate, but beyond that, we want a lot of properties,” including “value alignment,” and fairness, but also explainability. “Explainability is very important especially in the context of machines that work with human beings.”

Rossi echoed Mitchel about the need for “transparency,” as she called it, the ways bias is injected in the pipeline. Principles, said Rossi, are not enough, “it needs a lot of consultation, a lot of work in helping developers understand how to change the way they’re doing things,” and some kind of “umbrella structure” within organizations. Rossi said efforts such as neurosymbolic and Kahneman’s Systems 1 and 2 would be important to model values in the machine.

The last presenter was Ryan Calo, a professor of law at University of Washington. ” I have a few problems with principles,” said Calo, “Principles are not self-enforcing, there are no penalties attached to violating them,” he said.

“My view is principles are largely meaningless because in practice they are designed to make claims no one disputes. Does anyone think AI should be unsafe? I don’t think we need principles. What we need to do is roll up our sleeves and assess how AI affects human affordances. And then our system of laws to this change. Just because AI can’t be regulated as such, doesn’t mean we can’t change law in response.”

Marcus replied, saying the “Pandora’s Box is open now,” and that the world is in “the worst period of AI. We have these systems that are slavish to data without knowledge,” said Marcus.

Marcus asked about benchmarks. Can machines have some “reliable way to meаsure our progress toward common sense.” Choi replied that the training of machines through examples is a pitfall. Or self-supervised learning.

“The truth about current deep learning paradigm, i think it’s just wrong , so the benchmark will hae to be set up in more of a generative way.” There’s something about this generative ability humans have that if AI is going to get closer to, we really have to test the generative ability.”

Marcus asked about the literature on human biases. What can we do about them. “Should we regulate our social media companies? What about interventions,” he asked Kahneman, who has done a lifetime of work on human biases.

Kahneman was not optimistic, he said. Efforts at changing the way people think intuitively have not been very successful, noted Kahneman. “Two colleagues and I have just finished a book where we talk about decision hygiene,” he said. “That would be some descriptive principles you apply in thinking about how to think, without knowing what are the biases you are trying to eliminate. It’s like washing your hands: you don’t know what you are preventing.”

Tversky followed, saying “there are many things about AI initiatives that mystify me, one is the reliance on words when so much understanding comes in other ways, not in words.”

Mitchell noted that cognitive biases can lead to discrimination. Discrimination and biases are “closely tied” in model development, said Mitchell. Pearl offered in response that some biases might be corrigible via algorithms if they can be understood. “Some biases can be repaired if they can be modeled.” He referred to this as bias “laundering.”

Kidd noted work in her lab to reflect upon misconceptions. People all reveal biases that are not justified by evidence in the world. Ness noted that cognitive biases can be useful inductive biases. That, he said, means that problems in AI are not just problems of philosophy but “very interesting technical problems.”

Marcus asked about robots. His startup, Reliable AI, is working on such systems. Most robots that exist these days “do only modest amounts of physical reasoning,” just how to navigate a room,” observed Marcus. He asked Li, “how are we going to solve this question of physical reasoning that is very effective for humans, and that enters into language.”

Li replied that “embodiment and interaction is a fundamental part of intelligence,” citing pre-verbal activity of babies. “I cannot agree more that physical reasoning is part of the larger principles of developing intelligence,” said Li. It was not necessary to go through necessarily the construction of physical robots, she said. Learning agents could serve some of that need.

Marcus followed up asking about the simulation work in Li’s lab. He mentioned “affordances.” “When it comes to embodiment,” said Marcus, “a lot of it is about physical affordances. Holding this pencil, holding this phone. How close are we to that stuff?”

“We’re very close,” said Li, citing, for example, chip giant Nvidia’s work on physical modeling. “We have to have that in the next chapter of AI development,” said Li of physical interactions.

Sutton said “I try not to think about the particular contents of knowledge,” and so he’s inclined to think about space as being like thinking about other things. It’s about states and transitions from one state to another. “I resist this question, I don’t want to think about physical space as being a special case.”

Tversky offered that a lot is learned by people from watching other people. But I’m also thinking of social interactions, imitation. In small children, it is not of the exact movements, it’s often of the goals.”

Marcus replied to Tversky that “Goals are incredibly important.” He picked up the example of infants. “A fourteen-month-old does an imitation — either the exact action, or they will realize the person was doing something in a crazy way, and instead of copying, they will do what the person was trying to do,” he said, explaining experiments with children. “Innateness is not equal to birth, but early in life, representations of space and of goals are pretty rich, and we don’t have good systems for that yet” in AI, said Marcus.

Choi replied to Marcus by re-iterating the importance of language. “Do we want to limit common sense research to babies, or do we want to build a system that can capture adult common sense as well,” she prompted. “Language is so convenient for us” to represent adult common sense, said Choi.

Marcus at this point changed topics, asking about “curiosity.” A huge part of human’s ability, he said, “is setting ourselves an agenda that in some way advances our cognitive capabilities.”

Koch chimed in, “This is not humans restricted, look at a young chimp, young dog, cat… They totally want to explore the world.”

Stanley replied that “this is a big puzzle because it is clear that curiosity is fundamental to early development and learning and becoming intelligent as a human,” but, he said, “It’s not really clear how to formalize such behavior.”

“If we want to have curious systems at all,” said Stanley, “we have to grapple with this very subjective notion of what is interesting, and then we have to grapple with subjectivity, which we don’t like as scientists.”

Pearl proposed an incomplete theory of curiosity. It is that mammals are trying to find their way into a system where they feel comfortable and in control. What drives curiosity, he said, is having holes in such systems. “As long as you have certainly holes, you feel irritated, or discomfort, and that drives curiosity. When you fill those holes, you feel in control.”

Sutton noted that in reinforcement learning, curiosity has a “low-level role,” to drive exploration. “In recent years, people have begun to look at a larger role for what we are referring to, which I like to refer to as ‘play.’ We set goals that are not necessarily useful, but may be useful later. I set a task and say, Hey, what am I able to do. What affordances.” Sutton said play might be among the “big things” people do. “Play is a big thing,” he said.