In recent years, computer scientists have developed artificial intelligence-based techniques that can complete a wide variety of tasks. Some of these techniques are designed to artificially replicate the human senses, particularly vision, audition and touch.

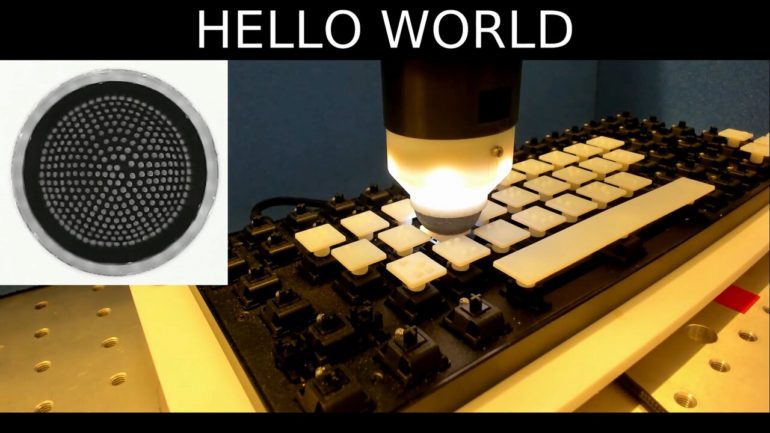

Researchers at University of Bristol have recently carried out a study that could inform the development of new tactile reinforcement learning approaches. More specifically, they introduced an experimental environment and a set of tasks designed to teach AI agents to type on a Braille keyboard via reinforcement learning.

Braille keyboards are devices that allow people to type instructions for computers in Braille. Braille is a renowned system of reading and writing based on the sense of touch, which is often used by blind individuals. In Braille, unique patterns of raised dots represent letters of the alphabet or punctuation marks.

“The overall idea behind our paper was to have a robot learn to do a difficult task that humans can also learn with their hands,” Nathan F. Lepora, one of the researchers who carried out the study, told Tech Xplore. “We also wanted to show the capabilities of deep reinforcement learning, which is famous for learning to play games such as Go or Atari simulations, but also has a huge amount of potential in robotics.”

Lepora and his colleagues set out to teach an AI agent to complete a complex interactive task based on the sense of touch within a real, physical environment. They focused on Braille keyboard typing, a skill that humans typically take some time to acquire and that had never been reported in AI agents before.

“Alex, Raia and I spent a lot of time thinking up this demonstration—learning to type on a Braille keyboard,” Lepora explained. “We wanted a task that you can see would be difficult to learn as a human, but that can be done with a single tactile fingertip on a robot arm. Part of the problem is that we as humans are experts at using our hands, so most tasks using our sense of touch seem easy to us, even though they are actually very difficult for robots.”

Lepora and his colleagues devised four tasks that involve typing on a Braille keyboard. These tasks increased in difficulty, going from typing arrows to alphabet keys, and requiring intermittent or continuous actions.

The researchers created a simulated and real environment where AI agents can learn to type in Braille. They then trained state-of-the-art deep learning algorithms to complete the four tasks they created in both their simulated and real environment (i.e., using a physical robot).

These deep-learning algorithms achieved remarkable results, learning to complete all four tasks in simulations, and three out of the four when they were implemented on a real robot. Only one of the tasks introduced in the recent paper, which required AI agents to continuously type letters of the alphabet, appeared to be difficult to translate on a real robot.

“The most notable achievement of our study is that the robot learned to type in the real world simply by interacting with the braille keyboard,” Lepora said. “Previously, deep reinforcement learning has been used in simulation, as in games, or by viewing a scene from afar with vision, as in the OpenAI demo of solving a Rubuk’s cube with a robot hand. It still took our robot a long time to learn—about a day—but now we can investigate ways to improve techniques for solving more difficult problems using robot touch.”

Lepora and his colleagues were the first to successfully train AI agents to type on a Braille keyboard both in simulations and in the real world. The environments, tasks and other code they created during their study were released and are now readily available online. In the future, this work could inspire additional studies aimed at developing AI agents or deep learning techniques that can perform well in complex, touch-related tasks.

“We’d really like to see what deep reinforcement learning is capable of achieving with robots that can interact physically with their environment,” Lepora said. “As a lab, we’re now looking into how you can feel around or move everyday objects using robots with a sense of touch. If you had a good enough tactile robot hand and sufficient artificial intelligence to learn how to control that hand, then in principle, the robot could learn to do anything that humans can do with their hands.”

Using deep learning to give robotic fingertips a sense of touch

More information:

Alex Church et al. Deep Reinforcement Learning for Tactile Robotics: Learning to Type on a Braille Keyboard, IEEE Robotics and Automation Letters (2020). DOI: 10.1109/LRA.2020.3010461

2021 Science X Network

Citation:

Teaching AI agents to type on a Braille keyboard (2021, January 6)

retrieved 7 January 2021

from https://techxplore.com/news/2021-01-ai-agents-braille-keyboard.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.