C3.ai’s platform allows for the gluing together of AI programs in rapid fashion. “What would otherwise have taken months of programming by a data scientist can actually be done literally in days using the C3.ai platform.”

One of the most intriguing artificial intelligence firms to grab the public’s attention in the past year is C3.ai, the creation of serial entrepreneur Tom Siebel, who sold his last company to Oracle for $6 billion.

C3.ai came public in early December, representing the hopes of many investors to cash in on the artificial intelligence buzz, sporting the ticker symbol “AI.”

ZDNet caught up recently, via Zoom, with C3.ai’s chief technology officer, Edward Y. Abbo, to discuss what goes into making C3.ai’s artificial intelligence capabilities.

Abbo has a long history with Siebel himself, having worked as CTO for Siebel Systems from its founding till its acquisition, and then serving as CEO in C3.ai’s early years.

Just before the initial public offering of C3.ai’s stock, ZDNet examined patent documents that describe what C3.ai calls its “secret sauce,” the artificial intelligence suite that it says speeds development of customer relationship management functions.

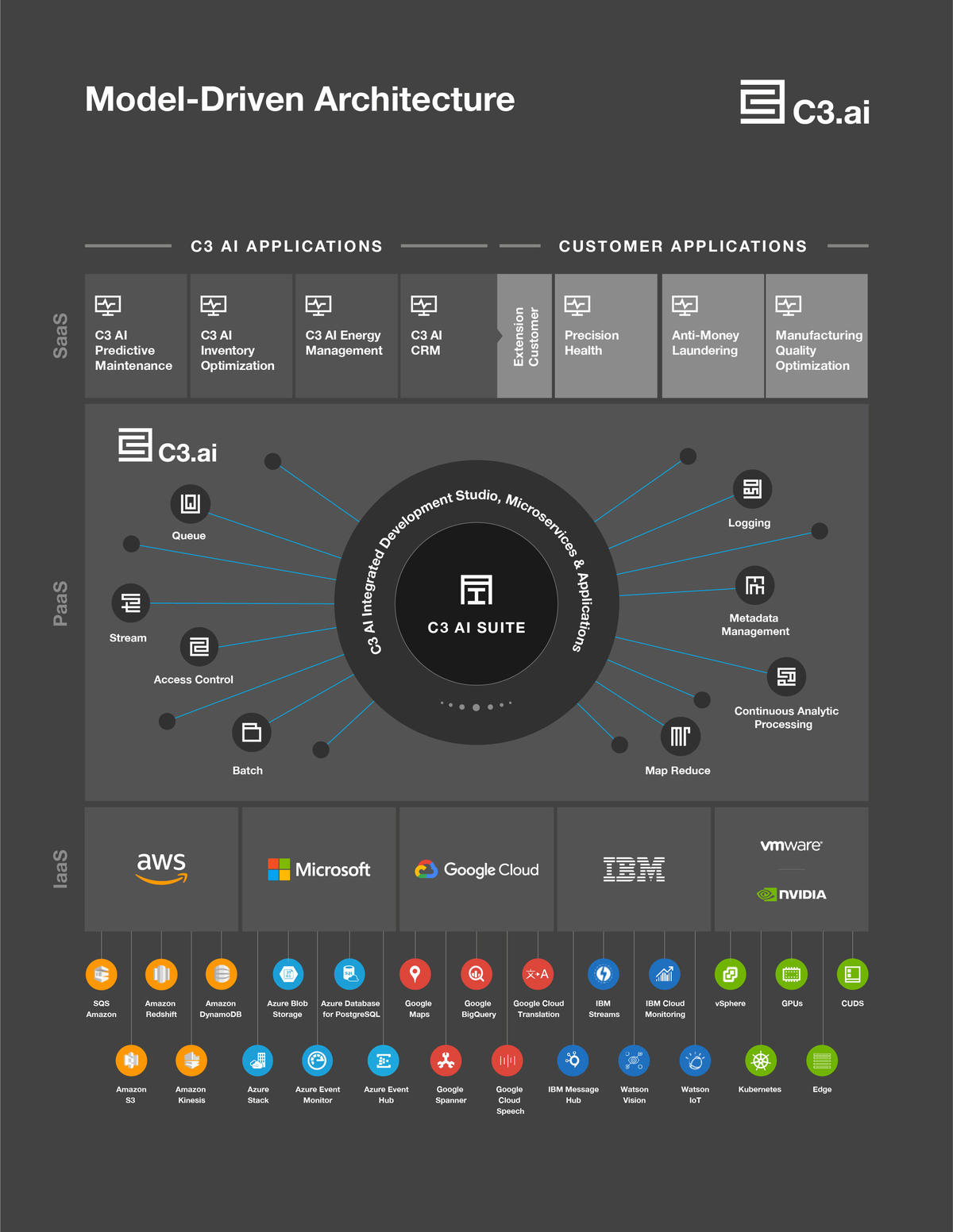

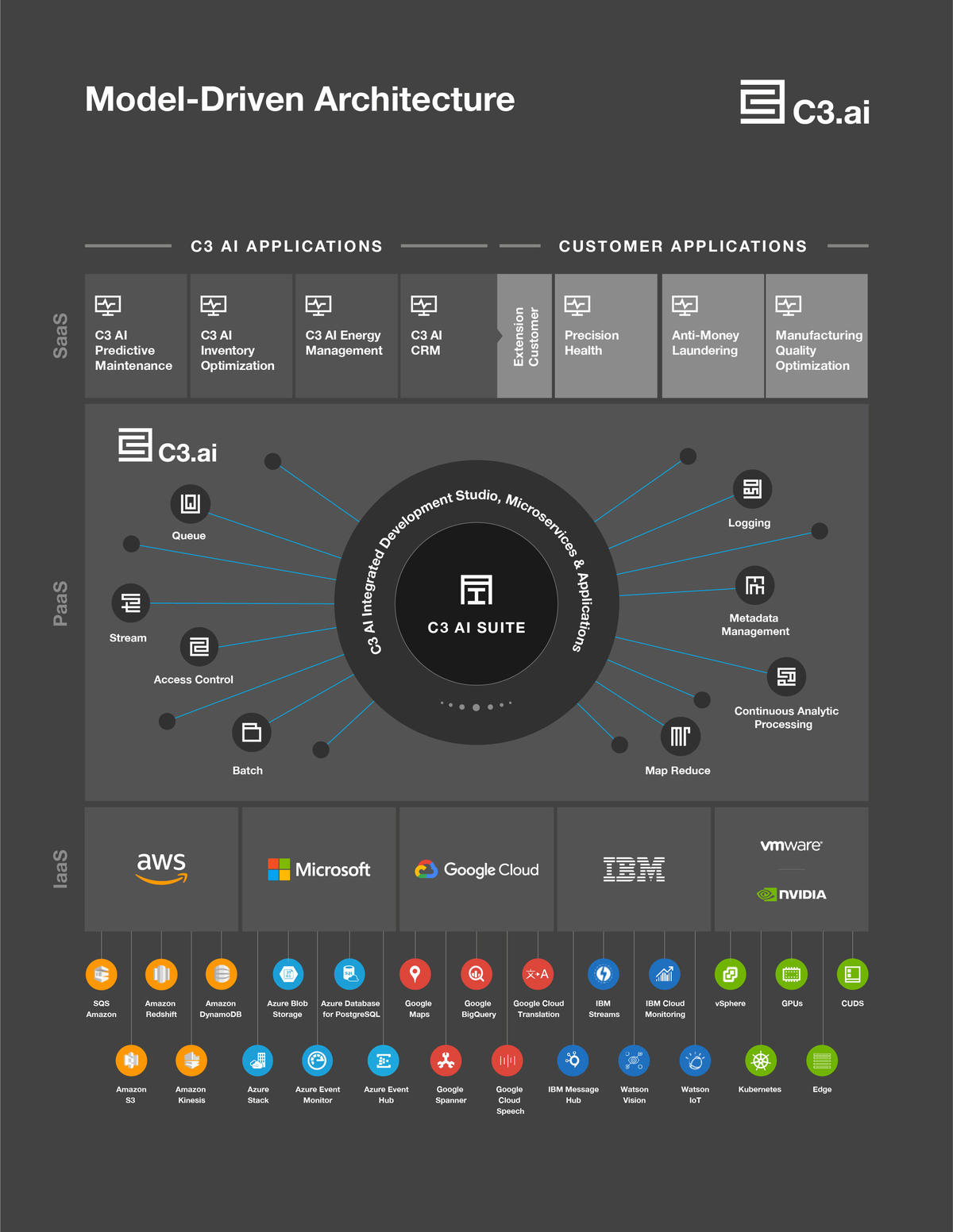

The view expressed in that article was that the secret sauce is really more about platform-as-a-service, or PaaS, rather than AI per se. Meaning, the company has built a system to apply machine learning models at scale, and the innovation is in the engineering of those large-scale systems, more so than the refinement of any particular artificial intelligence approach.

Also: Dissecting C3.ai’s secret sauce: less about AI, more about fixing Hadoop

Abbo, while not necessarily refuting that notion, suggested there was more than meets the eye. C3.ai has the ability to “make data scientists dramatically more productive” both through automation of many tasks, but also through the integration of machine learning approaches in AI, he said.

The ostensible event of Abbo’s conversation with ZDNet was last month’s announcement by C3.ai of what’s called the Open AI Energy Initiative, a partnership with Microsoft, Shell and Baker Hughes to bring automation and machine learning to some of the most challenging problems in the energy industry.

Consider, if you will, the example of a hard machine learning problem. An oil refinery is built, and there are schematics on paper of its construction. Over time, what’s on paper falls out of step with the actual state of the refinery as newer processes are introduced. And so, to create a living, accurate representation of the refinery in its current layout and function, its owners need what’s called a digital twin, a modern computer simulation of the layout of the refinery.

To re-create the schematics of the plant involves things such as optical character recognition to read documents, natural language processing, and multiple machine learning programming frameworks, all of which must operate in succession. C3.ai’s software simplifies the assembly of a pipeline of those functions, said Abbo.

“The innovation there is a model representation of an AI pipeline that we refer to as the C3.ai ML pipeline,” said Abbo, “which allows you to actually declare without having to program the steps involved in solving that problem, and use different AI frameworks in each step, and potentially even different programming languages, and allow you to basically very rapidly assemble this into a machine learning pipeline.”

The result, according to Abbo, is “what would otherwise have taken months of programming by a data scientist can actually be done literally in days using the C3.ai platform.”

In another instance, a customer had two million machine learning models responsible for control valves, said Abbo. That was a model management problem, said Abbo. Each model had to be deployed into production, and then observed for variance, and revised.

“This whole area of scaling up AI is another area where we’ve made huge investments, which provides a huge differentiator between us and others in the marketplace,” said Abbo.

“This is why our customers have huge deployments, and others are stuck in the prototype phase,” he said.

Regarding last month’s Open AI Energy Initiative, Abbo said the domain-specific aspect of the project is addressing “inefficiencies in the value chain” that are created by silos within organizations.

“Look at any medium or large-sized organizations,” said Abbo. “They have their data fragmented across hundreds if not thousands of systems.” Add in the increasing telemetry with sensors being placed in the field, and the complexity only increases. Much of the data collected with telemetry is “not activated, or not activated to the benefit of anyone,” said Abbo.

The second dimension of the OAI is to provide what Abbo called an application platform, akin to consumer app stores. The goal, said Abbo, is to have others outside the initial collection of partners to participate in the ecosystem of applications.

All of that, said Abbo, is with the goal of speeding up the energy industry’s so-called energy transition, the move toward what has been referred to as net zero emissions.

“The development of the ecosystem is critically important,” said Abbo. The announcement of the OAI took place at Baker Hughes’s annual meeting last month, where the company hosts representatives from all the most prominent oil and gas participants.

“The objective is to get a complete ecosystem of software providers,” said Abbo, including researchers, equipment providers, and energy providers themselves.